No credit card required

Merge, Dedup & Transform Datasets

No credit card required

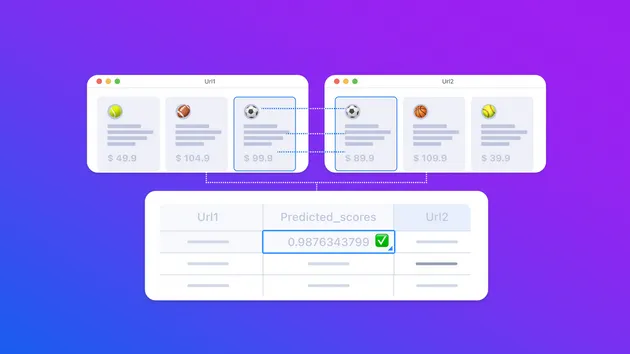

The ultimate dataset processor. Extremely fast merging, deduplications & transformations all in a single run.

De-duplicate dataset error

Closed

While running the task I keep get this error:

2024-05-01T09:22:59.977Z ACTOR: Pulling Docker image of build BzYf2ui1QKTRzHNQI from repository. 2024-05-01T09:23:02.120Z ACTOR: Creating Docker container. 2024-05-01T09:23:02.325Z ACTOR: Starting Docker container. 2024-05-01T09:23:04.501Z INFO System info {"apifyVersion":"2.2.0","apifyClientVersion":"2.0.4","osType":"Linux","nodeVersion":"v16.20.2"} 2024-05-01T09:23:04.604Z My input: 2024-05-01T09:23:04.607Z { 2024-05-01T09:23:04.610Z datasetIds: [ 'Id07DYkDaLOduqIsZ' ], 2024-05-01T09:23:04.612Z fields: [ 2024-05-01T09:23:04.615Z 'categoryName', 2024-05-01T09:23:04.618Z 'title', 2024-05-01T09:23:04.620Z 'address', 2024-05-01T09:23:04.622Z 'phone', 2024-05-01T09:23:04.624Z 'website', 2024-05-01T09:23:04.627Z 'totalScore', 2024-05-01T09:23:04.629Z 'reviewsCount', 2024-05-01T09:23:04.631Z 'openingHours' 2024-05-01T09:23:04.633Z ], 2024-05-01T09:23:04.640Z fieldsToLoad: [ 2024-05-01T09:23:04.642Z 'categoryName', 2024-05-01T09:23:04.645Z 'title', 2024-05-01T09:23:04.650Z 'address', 2024-05-01T09:23:04.652Z 'phone', 2024-05-01T09:23:04.654Z 'website', 2024-05-01T09:23:04.656Z 'totalscore', 2024-05-01T09:23:04.658Z 'reviewsCount', 2024-05-01T09:23:04.665Z 'openingHours' 2024-05-01T09:23:04.667Z ], 2024-05-01T09:23:04.670Z mode: 'dedup-as-loading', 2024-05-01T09:23:04.699Z output: 'unique-items', 2024-05-01T09:23:04.702Z outputDatasetId: 'tennis-apeldoorn', 2024-05-01T09:23:04.705Z postDedupTransformFunction: 'async (items, { Apify }) => {\n return items;\n}', 2024-05-01T09:23:04.707Z preDedupTransformFunction: 'async (items, { Apify }) => {\n return items;\n}', 2024-05-01T09:23:04.709Z verboseLog: false, 2024-05-01T09:23:04.712Z outputTo: 'dataset', 2024-05-01T09:23:04.714Z parallelLoads: 10, 2024-05-01T09:23:04.716Z parallelPushes: 5, 2024-05-01T09:23:04.719Z uploadBatchSize: 500, 2024-05-01T09:23:04.722Z batchSizeLoad: 50000 2024-05-01T09:23:04.724Z } 2024-05-01T09:23:04.726Z WARN Limiting parallel pushes to 1 because dedup as loading is already pushing in parallel by default 2024-05-01T09:23:04.728Z WARN For dedup-as-loading mode, batchSizeLoad must equal uploadBatchSize. Setting batch size to 500 2024-05-01T09:23:04.731Z INFO Output dataset ID was not provided, will use or create named dataset: tennis-apeldoorn with ID: RL3oVAamyCvg2c3Ev 2024-05-01T09:23:05.249Z INFO Loading deduplicating set, currently contains 0 unique keys (already deduplicated items) 2024-05-01T09:23:05.344Z INFO Dataset LTqc9Efgem6XXVf8r has 709 items 2024-05-01T09:23:05.347Z INFO Number of requests to do: 2 2024-05-01T09:23:05.612Z INFO Items loaded from dataset LTqc9Efgem6XXVf8r: 209, offset: 500, total loaded from dataset LTqc9Efgem6XXVf8r: 209, total loaded: 209 2024-05-01T09:23:05.615Z INFO [Batch-LTqc9Efgem6XXVf8r-500]: Loaded: 209, Total unique: 5 2024-05-01T09:23:05.618Z ERROR 2024-05-01T09:23:05.621Z TypeError: Cannot read properties of undefined (reading '500') 2024-05-01T09:23:05.623Z at processFn (/usr/src/app/src/dedup-as-loading.js:64:40) 2024-05-01T09:23:05.625Z at processTicksAndRejections (node:internal/process/task_queues:96:5) 2024-05-01T09:23:05.628Z at async bluebird.map.concurrency (/usr/src/app/src/loader.js:240:13)

Hello,

Thanks for the report. This happened because you accidentally passed run ID, instead of dataset ID. But I updated the so that it will handle it as well now.

- 251 monthly users

- 96.8% runs succeeded

- 0.78 days response time

- Created in Apr 2020

- Modified 18 days ago

Lukáš Křivka

Lukáš Křivka