No credit card required

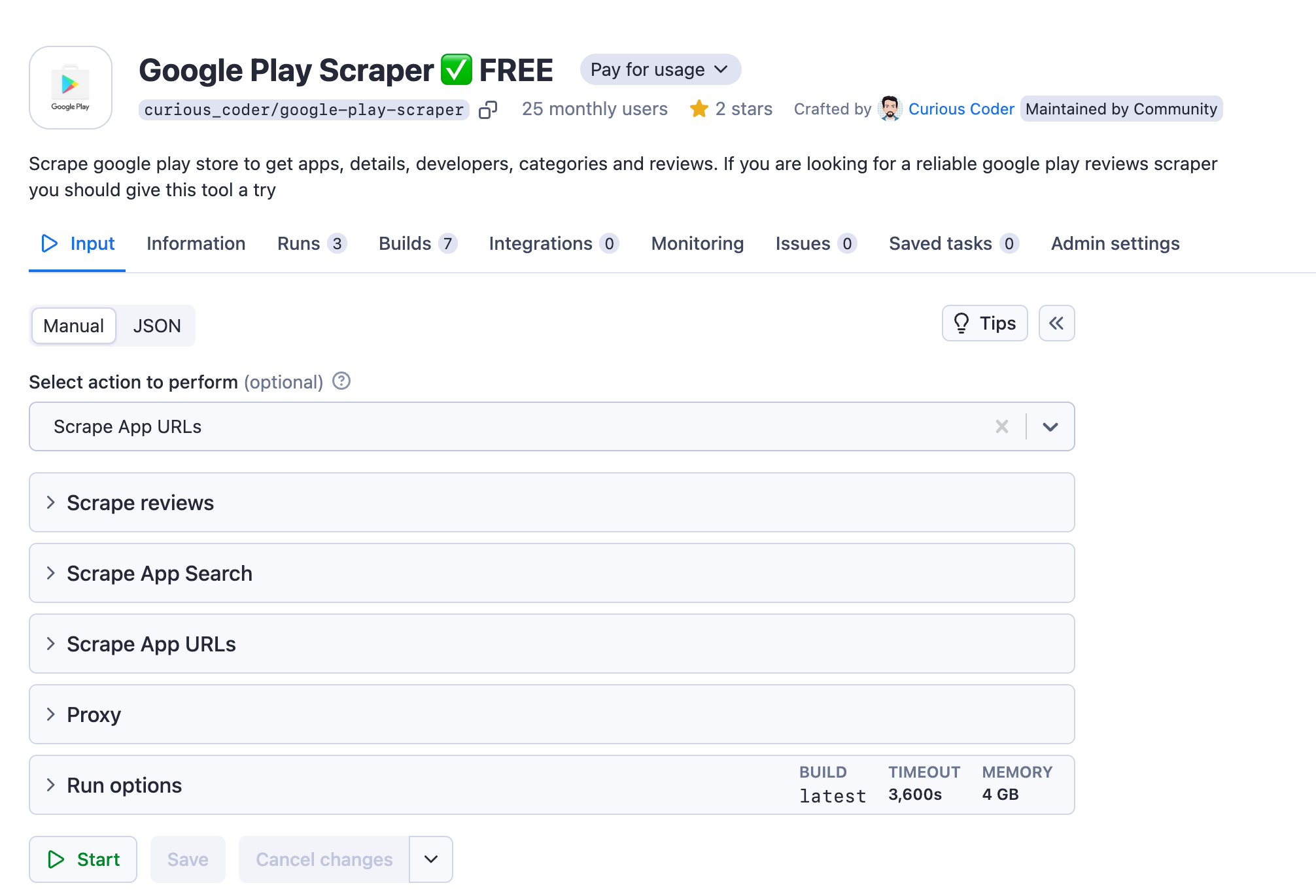

Google Play Scraper ✅ FREE

No credit card required

Scrape google play store to get apps, details, developers, categories and reviews. If you are looking for a reliable google play reviews scraper you should give this tool a try

👾 What is Google Play Scraper?

The Google Play Scraper is designed to scrape Google Play Store apps, reviews, and developer data based on your search query or URL.

🔭 Extract Google Play data by keywords, specific URLs or App IDs

🧚♀️ Extract official app details, popularity, user reviews, and developer information in one go

👽 Extract app data from various categories on Google Play Store including Games, Apps, and Kids section

☄️ Get more than 3,000 results for free

⬇️ Download Google Play data in Excel, CSV, JSON, and other formats

🕹 Why scrape data from Google Play Store?

Google Play has millions of apps and is a great source of data for market research. Here are some interesting uses for it:

- Track app performance and reviews

- Monitor competitors and their updates

- Identify market trends and user preferences

- Analyze developer activity and app features

- Research user sentiment and feedback

📱 What app data can Google Play Scraper extract?

Google Play Scraper extracts details from apps and app reviews such as:

| 📱 App title and app ID | 📝 App description | ⭐ App score |

| 📈 App ratings and star histogram | ⬇️ Number of installs | 🔗 App URL |

| 💵 Pricing details | 📸 Screenshot and video URLs | 🐲 Game genre and categories |

| 🌐 Developer website | 📍 Developer address | 📧 Developer email |

| 📅 Release date | 🆕 Recent changes | 🔐 Privacy policy |

| 👤 Reviewer's user name and image | ⭐ Review score | 🗓️ Review date |

| 🔗 Review URL | 📝 Review text | 📦 App version |

| 👍 Number of votes | 🎮 Criteria ratings | 💬 Reply text and date |

🔧 How to scrape app data from Google Play Store?

You can scrape details on all apps that match your query or a given URL. Just follow these steps to get your data in a few minutes.

- Find Google Play Scraper on Apify Store and click Try for free button.

- Enter your search query and specify the number of apps want to scrape.

- Click "Start" and wait for the app or reviews data to be extracted.

- Preview and download your dataset in JSON, XML, CSV, Excel, or HTML, or export it via API.

⭐️ How to scrape reviews data from Google Play Store?

You can scrape all reviews that match the app ID. Just follow these steps to get your data in a few minutes.

- Find Google Play Scraper on Apify Store and click Try for free button.

- Enter app IDs and specify the number of reviews you want to scrape.

- If you don't have app IDs ready, first extract them using the same Google Play Scraper, then use appIDs in the output as the new input for reviews.

- Click "Start" and wait for the app or reviews data to be extracted.

- Preview and download your dataset in JSON, XML, CSV, Excel, or HTML, or export it via API.

💸 Is this Google Play Store API free?

Yes. Apify provides you with $5 free usage credits every month on the Apify Free plan, so with Google Play Scraper you can scrape thousands of results for free within those limits. The range of free results depends on the complexity of your input: URLs, reviews or keywords.

⬇️ Input

To scrape app details, the input for this Google Play Scraper should be search keywords or app URLs. To scrape app reviews, the input for this Google Play Scraper should be Google Play app IDs and number of reviews to scrape.

You can input data by filling out fields, using JSON, or programmatically via an API. For a full explanation of input in JSON, see the input tab.

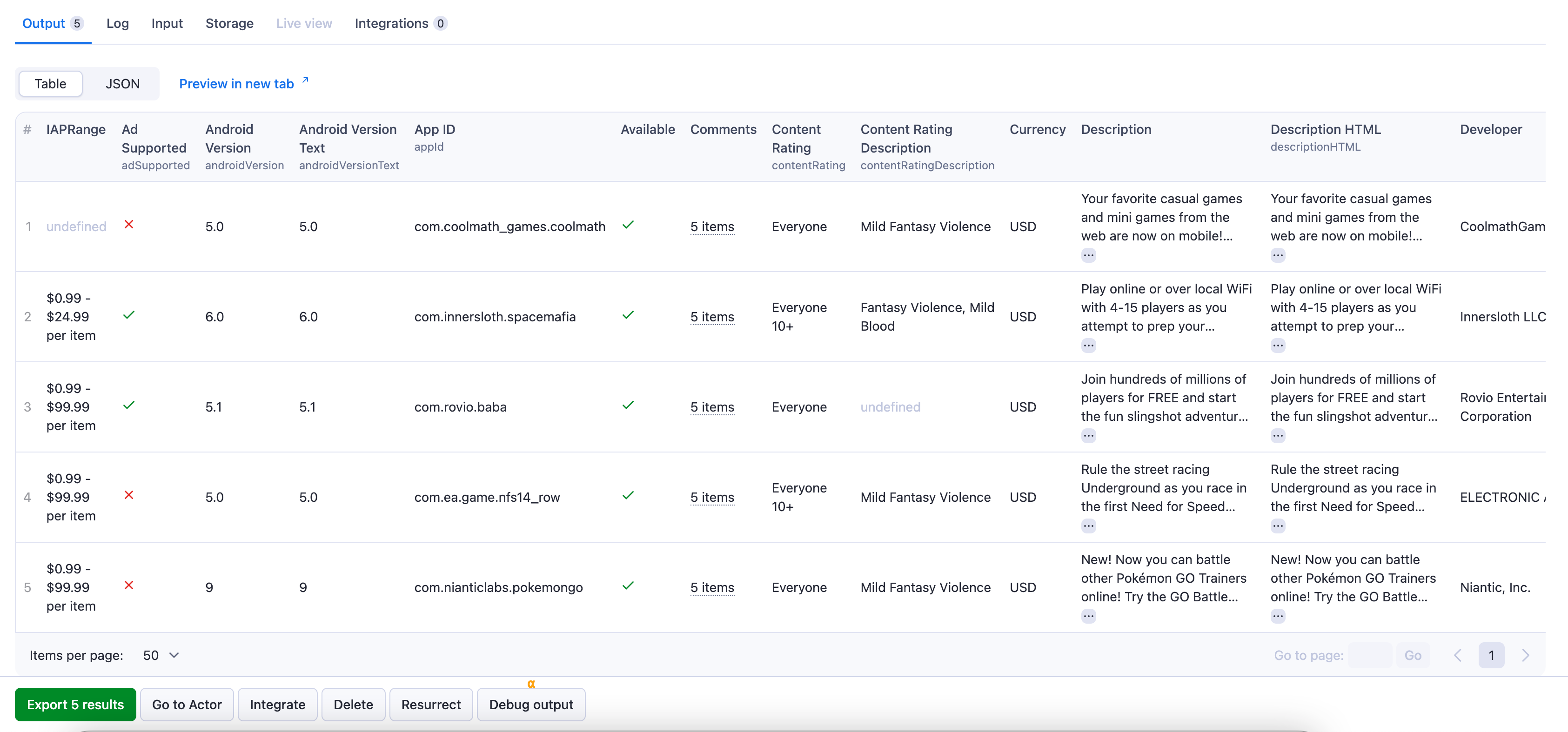

⬆️ Output sample

The results will be wrapped into a dataset which you can find in the Output tab. Here's an example from the Google Play dataset from the input above:

You can preview all the fields in the Storage tab and choose the format in which to export the Google Play Store data you've extracted: JSON, CSV, Excel, or HTML table. Here below are two sample datasets in JSON:

📲 App details

1{ 2 "title": "Coolmath Games Fun Mini Games", 3 "description": "Your favorite casual games and mini games from the web are now on mobile! Coolmath Games is full of fun casual games, mini games, trivia & brain-training puzzles for everyone. Play fun logic, math and thinking games today!\n\nIf you love the Coolmath Games website, you’ll love the official app with hundreds of our favorite math, logic, casual, trivia, thinking and strategy mini games made especially for mobile phones and tablets! Play all sorts of fun mini games, trivia and educational games for hours – Coolmath Games has a fun mini game for you!\n\nWith new casual mini games added every week, you’ll never run out of new gaming challenges. Coolmath Games is kid-friendly because there is never violence, empty action, or inappropriate language – just a wide range of fun casual games and strategy puzzles! Fun starts with mini games!\n\nPlay our most popular logic, casual games, math and strategy mini games including:\n\n* Bob the Robber: Play this fun classic game and use logic to help Bob expose the real crook!\n* Block the Pig: The fun casual strategy game where you place walls to prevent the little snorter from escaping!\n* IQ Ball: Use your brain to solve climbing and grabbing puzzles in as few moves as possible. \n* Checkers: A strategy classic! Play against the computer or challenge other players to an online multiplayer game!\n* Parking Fury: Solve fun parking puzzles as you drive and play with realistic steering and pedal controls. \n* 2048: Match and multiply your way through this fun logic puzzle to 2048.\n* Toy Defense: A classic tower defense game. Find the best strategy to protect your base!\n\nWith game categories like Fun Physics, Path Planning, Quick Reaction, Drawing and Match 3, Coolmath Games lets you train your brain in all sorts of ways. Recently named one of the internet’s all-time favorite websites by Popular Mechanics, Coolmath Games brings your favorite educational mini games, logic, math and learning games to your mobile devices.\n\nCoolmath Games has mini games, casual games and trivia for hours of fun and learning!", 4 "descriptionHTML": "Your favorite casual games and mini games from the web are now on mobile! Coolmath Games is full of fun casual games, mini games, trivia & brain-training puzzles for everyone. Play fun logic, math and thinking games today!<br><br>If you love the Coolmath Games website, you’ll love the official app with hundreds of our favorite math, logic, casual, trivia, thinking and strategy mini games made especially for mobile phones and tablets! Play all sorts of fun mini games, trivia and educational games for hours – Coolmath Games has a fun mini game for you!<br><br>With new casual mini games added every week, you’ll never run out of new gaming challenges. Coolmath Games is kid-friendly because there is never violence, empty action, or inappropriate language – just a wide range of fun casual games and strategy puzzles! Fun starts with mini games!<br><br>Play our most popular logic, casual games, math and strategy mini games including:<br><br>* Bob the Robber: Play this fun classic game and use logic to help Bob expose the real crook!<br>* Block the Pig: The fun casual strategy game where you place walls to prevent the little snorter from escaping!<br>* IQ Ball: Use your brain to solve climbing and grabbing puzzles in as few moves as possible. <br>* Checkers: A strategy classic! Play against the computer or challenge other players to an online multiplayer game!<br>* Parking Fury: Solve fun parking puzzles as you drive and play with realistic steering and pedal controls. <br>* 2048: Match and multiply your way through this fun logic puzzle to 2048.<br>* Toy Defense: A classic tower defense game. Find the best strategy to protect your base!<br><br>With game categories like Fun Physics, Path Planning, Quick Reaction, Drawing and Match 3, Coolmath Games lets you train your brain in all sorts of ways. Recently named one of the internet’s all-time favorite websites by Popular Mechanics, Coolmath Games brings your favorite educational mini games, logic, math and learning games to your mobile devices.<br><br>Coolmath Games has mini games, casual games and trivia for hours of fun and learning!", 5 "summary": "Play strategy, logic & learning games for a fun mental workout!", 6 "installs": "1,000,000+", 7 "minInstalls": 1000000, 8 "maxInstalls": 1369210, 9 "score": 3.576, 10 "scoreText": "3.6", 11 "ratings": 5015, 12 "reviews": 1477, 13 "histogram": { 14 "1": 1256, 15 "2": 279, 16 "3": 400, 17 "4": 466, 18 "5": 2606 19 }, 20 "price": 0, 21 "free": true, 22 "currency": "USD", 23 "priceText": "Free", 24 "available": true, 25 "offersIAP": false, 26 "androidVersion": "5.0", 27 "androidVersionText": "5.0", 28 "androidMaxVersion": "VARY", 29 "developer": "CoolmathGames.com", 30 "developerId": "7876604119083871959", 31 "developerEmail": "mobile@coolmath.com", 32 "developerWebsite": "https://coolmathgames.com", 33 "developerAddress": "122 East 42nd Street\nSuite 1611\nNew York, NY 10168", 34 "privacyPolicy": "https://www.coolmathgames.com/app-privacy-policy", 35 "developerInternalID": "7876604119083871959", 36 "genre": "Arcade", 37 "genreId": "GAME_ARCADE", 38 "categories": [ 39 { 40 "name": "Casual", 41 "id": "GAME_CASUAL" 42 }, 43 { 44 "name": "Minigames", 45 "id": null 46 }, 47 { 48 "name": "Multiplayer", 49 "id": null 50 }, 51 { 52 "name": "Competitive multiplayer", 53 "id": null 54 }, 55 { 56 "name": "Single player", 57 "id": null 58 }, 59 { 60 "name": "Stylized", 61 "id": null 62 }, 63 { 64 "name": "Offline", 65 "id": null 66 } 67 ], 68 "icon": "https://play-lh.googleusercontent.com/GOSj_Oh_taPXIomBMiiTRNM1Mg3LP9qKJ4gRIuCRV2jQ0oa9VUIC2SHAiY8GL09j6ts", 69 "headerImage": "https://play-lh.googleusercontent.com/iPKMWbRxgfaUTqYSezs2FgBQKRlJaSyCIR3aCkxHh2t6o28Z7zQOsn5v2CIsm11yoGU", 70 "screenshots": [ 71 "https://play-lh.googleusercontent.com/ZzFGj_MgQ2_LoXc0XleZzTiKLTktYve5IxgLUcmLJIXdFBRRojMVTH052SNfkVI1dJA8", 72 "https://play-lh.googleusercontent.com/OkJFfEddiKGa8mbgvcqNCIYpZQKwlhDgFs4KTZaykFdZz7Z0yUdVjNmAtM9Gmxy1ubZf", 73 "https://play-lh.googleusercontent.com/OIZTR-_YD-P1Z2pW4draBCIg7nbHrw7pUEMszM892Er_u2UpBu7H-7028hGXg_ndyTXB", 74 "https://play-lh.googleusercontent.com/hjRM__CGvcsuzzKzaz9SXwzHmpF6Yb6tR16350M8RD21iIbTihKR4YfZ333MGvAOVKfA", 75 "https://play-lh.googleusercontent.com/wkKOflrpH835tjgDWyn3KApglNSJiCbRTMkK9AEasLRJfG33HnaaegD3kKQRU5Dheg", 76 "https://play-lh.googleusercontent.com/Bq56xjAcMbBd8VFvVfmnO52wkGJs1FpwTBEw6L-8WjNBmkQ0CxVZ3LUpmhMPjz-ksBY", 77 "https://play-lh.googleusercontent.com/rZjjdGivAnBWcEylFadbB_0vJ8B1zQJqfNdFEnQlE33ERWnXpoOPRu72H8SGUF5b-r0", 78 "https://play-lh.googleusercontent.com/wteh08ZsZI6FqUN3E6yYV4b-22yqlKFynLmP3EzFUpw6O5GNmwjQZMi4y88mHqZCNmw", 79 "https://play-lh.googleusercontent.com/lJ0DkN9QW3c9Xn7-XimJsGHC7K7Cl-VWd0fCIuN1r3k2oSBrx9fze9LCUAgYT0LRJ5b3", 80 "https://play-lh.googleusercontent.com/2J8AJqZADwSbkcPlmUqMO5RAlX2WEZ16wcqlGoVqWPmyYUQ_f4pmMYnW0dau0AsU5i0", 81 "https://play-lh.googleusercontent.com/6r9DmlE6ZNxe6BiPzWj8sZSk0ZBbLvOgIZLXz37YiInWpGBP2WVeA-AM4TIhxHi33A", 82 "https://play-lh.googleusercontent.com/fZVMzsmiFjbPimBm6kFhJC5CvKkN_FpyGUa3CbCJqZiw_culyVNYYMef5wTMtK4OZsE", 83 "https://play-lh.googleusercontent.com/g40qsYIcIkgDoZWJwqk-VfkvFY2kGt2-LzxogzWQrQVOQC0CYLal5TTjIREr564fzaA", 84 "https://play-lh.googleusercontent.com/9MN3Ui-lcNt9DFvyA4huzG2HEQr1bSnGyshDAdcMLRIdfI0I4hvlc0qcx8P4e1tAbWk", 85 "https://play-lh.googleusercontent.com/L3Vu-CIWuWhYCrxmRkMwSw1_yHGU0WfB3uJCHw9SQFFaQ5lwOiLKaJuR8Lvno9ERdwYX", 86 "https://play-lh.googleusercontent.com/q5hJFrDC6_kQlsscXNG8S8MlLBcy5zd7oT3AzolXm-FR7fZYiUq-s-7pOTwvwF3cnMDU", 87 "https://play-lh.googleusercontent.com/-v9zOOaOlCbuPOhgwX_lnbjZAkY7_Vjm7hRyDhLcy2M6v4pXsjKIg3epL0pauUYPS58", 88 "https://play-lh.googleusercontent.com/lrXfhIS4uvxeLaIbi_cRt-HXZ16qYb9ukGSSQiaFPkDIKb6sW1NBUSagO_Q1ewjk4TuR", 89 "https://play-lh.googleusercontent.com/-lWEH7KWcTzZZ9AkCK3ZPkj8e1tw55-pXHu0rU9kyElXLTHEcw1piqY0_Ny2TrSCde0", 90 "https://play-lh.googleusercontent.com/eqMTHwdlDauIuFVqDeuLRaz88BPhUtxww87Od1v6FXneifijs-KQ-ebSq15xasc1uhU", 91 "https://play-lh.googleusercontent.com/J9wreRc-4B_u4ZVV_JzQaX4y-DP3XT1FdogWOyMORN74RCuodiEZ0LOkyAcD9IX9", 92 "https://play-lh.googleusercontent.com/n6QpslWQX3F9T41ijaN-m6_nrxxzgxUg2j6a_dvBnvDzg6IdEO1HNVGAGN8XXQktHg", 93 "https://play-lh.googleusercontent.com/GxFWf_Dtc2qZBib6sUFRLnq0giEiHlz2UHAeteH5tXaR6axEAQoCh9IRXFSQY4AZ0Z4", 94 "https://play-lh.googleusercontent.com/pEGVCsAmhqnYiZov5vQpxzRRCBzdh_ATOlx9m_wXweOzskRTz2w9P_cpMAVhqA5QcQ" 95 ], 96 "video": "https://www.youtube.com/embed/sHdqJFdEbzg?ps=play&vq=large&rel=0&autohide=1&showinfo=0", 97 "videoImage": "https://play-lh.googleusercontent.com/iPKMWbRxgfaUTqYSezs2FgBQKRlJaSyCIR3aCkxHh2t6o28Z7zQOsn5v2CIsm11yoGU", 98 "previewVideo": "https://play-games.googleusercontent.com/vp/mp4/1280x720/sHdqJFdEbzg.mp4", 99 "contentRating": "Everyone", 100 "contentRatingDescription": "Mild Fantasy Violence", 101 "adSupported": false, 102 "released": "Sep 23, 2019", 103 "updated": 1709126357000, 104 "version": "1.4.3", 105 "recentChanges": "Bug Fixes and Improvements.", 106 "comments": [ 107 null 108 ], 109 "preregister": false, 110 "earlyAccessEnabled": false, 111 "isAvailableInPlayPass": false, 112 "appId": "com.coolmath_games.coolmath", 113 "url": "https://play.google.com/store/apps/details?id=com.coolmath_games.coolmath&hl=en&gl=us" 114}

⭐️ App review

1{ 2 "id": "0e5ec468-f8a3-4d3d-8b1f-24b1cbb5b309", 3 "userName": "Logan Landman", 4 "userImage": "https://play-lh.googleusercontent.com/a-/AD_cMMQLM0vtsmrJyz4OzNBTa55rUPIX66-XZ0p0ljkhdBBD2Ic", 5 "date": "2023-07-03T20:58:35.428Z", 6 "score": 5, 7 "scoreText": "5", 8 "url": "https://play.google.com/store/apps/details?id=com.rockstargames.gtasa&reviewId=0e5ec468-f8a3-4d3d-8b1f-24b1cbb5b309", 9 "title": null, 10 "text": "Legitametly great port for what it is. Many people complain about the downgrades, and yes these ports do somewhat have a reputation with the Definitive Editions, but the downgrades are minor and are honestly not much of a problem and really should've been expected. This port is great and perfect for on-the-go, controls can be a bit weird and frustrating but once you get used to them it's not much of a problem. Highly recommended.", 11 "replyDate": null, 12 "replyText": null, 13 "version": "2.11.32", 14 "thumbsUp": 132, 15 "criterias": [ 16 { 17 "criteria": "vaf_games_genre_shooting", 18 "rating": 1 19 }, 20 { 21 "criteria": "vaf_games_massively_multi_player", 22 "rating": 2 23 }, 24 { 25 "criteria": "vaf_games_subject_bmx", 26 "rating": 3 27 } 28 ] 29}

📱 Want more tools for scraping games or app data?

Apify Store is always expanding with newer, more reliable and more versatile scrapers, contributed either by Apify or the Community. Feel free to explore some of the following web scrapers from the gaming category:

| 🎮 Steam Store Scraper | 🕹️ Playstation Store Scraper |

| 💬 Steam Reviews Scraper | 📊 App Store Scraper |

| 📱 Facebook Games Scraper | 📺 Twitch Recent Video Scraper |

❓FAQ

Does Google Play Store have API?

No, Google Play doesn't have an official API for retrieving data on games, reviews and devs. While there's (Google Play Developer API) neither of these APIs provide access to the app data .

This is why alternative solutions like Google Play Scraper exist. A scraper is essentially an API of its own if it allows exporting scraped data via an API.

Can I scrape Google Play app reviews if I don't have app IDs?

Yes. Just use Google Play Scraper in two runs. First, scrape app details using search or URL input. Your output from this run will contain App IDs. Then, filter out App IDs from the output and use them as an input for the second run. Done!

Can I integrate Google Play Scraper with other apps?

Yes. This Google Play Scraper can be connected with almost any cloud service or web app thanks to integrations on the Apify platform. You can integrate your Google Play data with Zapier, Slack, Make, Airbyte, GitHub, Google Drive, LangChain and more.

You can also use webhooks to carry out an action whenever an event occurs, e.g., get a notification whenever Play Store Scraper successfully finishes a run.

Can I use Google Play Scraper as its Google Play Store API?

Yes, you can use the Apify API to access Google Play Scraper programmatically. The API allows you to manage, schedule, and run Apify Actors, access datasets, monitor performance, get results, create and update Actor versions, and more.

To access the API using Node.js or Python, you can use the apify-client in NPM package or PyPI package. There are also API endpoints available for extracting Google Play data without a client. For detailed information and code examples, see the API tab or refer to the Apify API documentation.

Is it legal to scrape data from Google Play?

Note that personal data such as names is protected by GDPR in the European Union and by other regulations around the world. You should not scrape personal data unless you have a legitimate reason to do so. If you're unsure whether your reason is legitimate, consult your lawyers. We also recommend that you read our blog post: Is web scraping legal?

Your feedback

We’re always working on improving the performance of our Actors. So if you’ve got any technical feedback for Google Play Scraper or simply found a bug, please create an issue on the Actor’s Issues tab.

- 43 monthly users

- 2 stars

- 85.3% runs succeeded

- 21 days response time

- Created in Jul 2023

- Modified 23 days ago

Curious Coder

Curious Coder