Google Search Scraper For Live App

Pricing

Pay per usage

Go to Apify Store

Google Search Scraper For Live App

A simple version of Google Search Scraper optimized for fast response time. Don't use it unless you really need a sub-5-second response because it is less flexible and reliable.

Pricing

Pay per usage

Rating

1.0

(1)

Developer

Lukáš Křivka

Maintained by Community

Actor stats

1

Bookmarked

30

Total users

0

Monthly active users

9 months ago

Last modified

Categories

Share

Google Search for Live apps

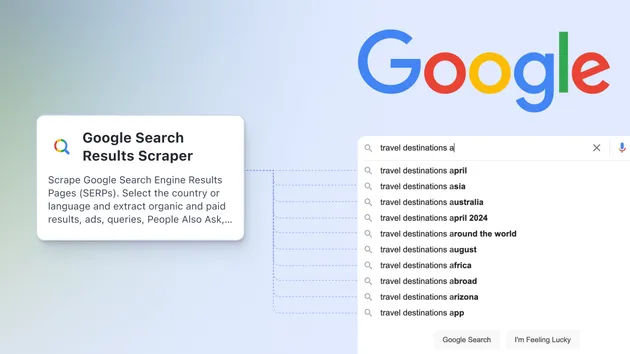

This is a simplified version of Google Search Results Scraper.

It uses the same parser but it is optimized for fastest response. Average response time should be around 4 seconds.

Don't use this actor unless you need sub 5 seconds response time. For everything else use Google Search Scraper.

Advantages of Google Search Results Scraper

- Faster response time

Disadvantages of Google Search Results Scraper

- Actor has to run continuously and consumes some compute units

- Uses Residential proxy which is more expensive than dedicated SERP proxy

- Fewer settings and options

How to use

- Start the actor. 512 MB or 1 GB memory should be enough. Scale to higher memory if you need higher loads.

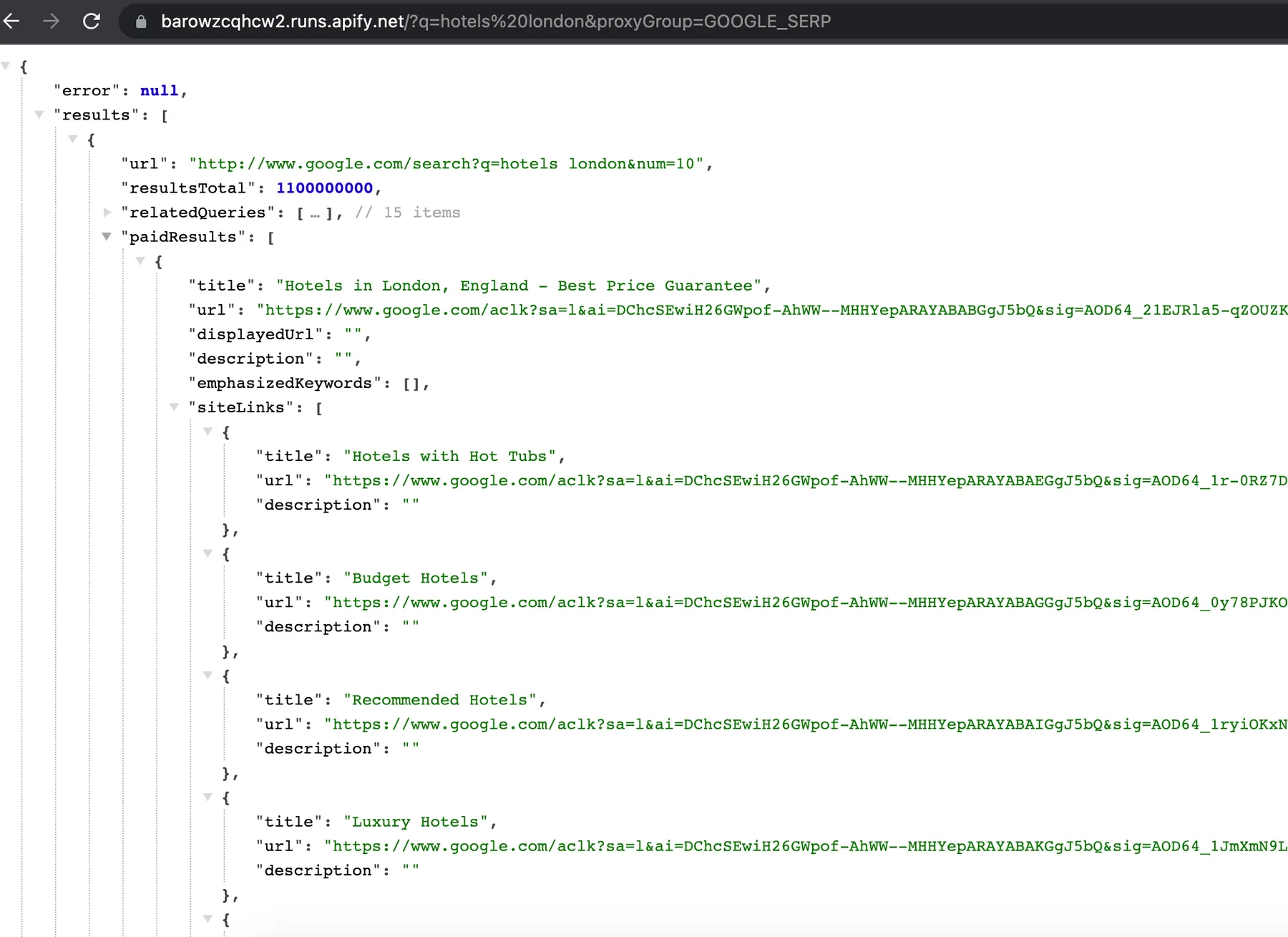

- Obtain the Container URL of the running actor which is now the server for your requests. It will look something like https://0zxzjbqdzxvu.runs.apify.net. You can find it on the run page or in the log.

- Use this URL in your browser or app by adding query parameters, e.g. https://0zxzjbqdzxvu.runs.apify.net/?q=hotels+new+york

- Don't forget to abort the actor run once you don't need to use it anymore. You can always resurrect it to ensure you keep the same Container URL.

Query parameters

q- search querynum- number of results to return (default 10, max 100)proxyGroup- Apify proxy group to use (default RESIDENTIAL). GOOGLE_SERP can be used but has variable speed (2 - 10 secs). Datacenter proxies are fastest but are often blocked.saveHtml- save full HTML of the search results page (default false)saveHtmlToKeyValueStore- save full HTML of the search results page to default key-value store (default false). Useful for quick debugging.

You can paste the URL to your browser for simple testing