Wikipedia API

This Wikipedia API gives you programmatic access to Wikipedia data that isn't available through any official API. Get data on Article titles, page content, URLs, categories, revision data, and more. You can try the Wikipedia API for free, no credit card required.

Trusted by industry leaders all over the world

Integrate Wikipedia API

Access the Wikipedia API using Python, JavaScript, CLI, cURL, OpenAPI, or MCP. Choose your preferred option and start extracting Wikipedia data in minutes.

Python

JavaScript

HTTP

MCP

1from apify_client import ApifyClient2

3# Initialize the ApifyClient with your Apify API token4# Replace '<YOUR_API_TOKEN>' with your token.5client = ApifyClient("<YOUR_API_TOKEN>")6

7# Prepare the Actor input8run_input = { "pages": [9 "https://en.wikipedia.com/wiki/JavaScript",10 "https://terraria.fandom.com/wiki/Bosses",11 ] }12

13# Run the Actor and wait for it to finish14run = client.actor("jupri/wiki-scraper").call(run_input=run_input)15

16# Fetch and print Actor results from the run's dataset (if there are any)17print("💾 Check your data here: https://console.apify.com/storage/datasets/" + run["defaultDatasetId"])18for item in client.dataset(run["defaultDatasetId"]).iterate_items():19 print(item)20

21# 📚 Want to learn more 📖? Go to → https://docs.apify.com/api/client/python/docs/quick-startGet data with Wikipedia API

Extract MediaWiki data by providing Wikipedia or Fandom URLs or search terms. The MediaWiki Scraper returns structured JSON data with article titles, full page content, URLs, categories, and revision information.

Input

{ "pages": [ "<https://en.wikipedia.com/wiki/JavaScript>", "<https://terraria.fandom.com/wiki/Bosses>" ]}Output

{ "title": "Chocolate", "lastmod": "2025-07-22T22:18:00.000Z", "sections": [ { "content": [ { "text": "For other uses, see Chocolate (disambiguation).", "type": "note", "links": [ "..." ] }, "...", { "text": "Chocolate is a food made from roasted and ground cocoa beans...", "type": "paragraph", "links": [ { "pos": 49, "href": "<https://en.wikipedia.org/wiki/Cocoa_bean>", "text": "cocoa beans", "title": "Cocoa bean" }, "..." ] } ] }, "..." ], "languages": [ { "href": "<https://af.wikipedia.org/wiki/Sjokolade>", "lang": "af", "title": "Sjokolade", "autonym": "Afrikaans", "localName": "Afrikaans" }, "..." ], "categories": [ { "text": "Chocolate", "links": [ "..." ] }, "..." ], "description": "Food produced from cacao seeds", "numLanguages": 154}Sign up for Apify account01

Creating an account is quick and free — no credit card required. Your account gives you access to more than 5,000 scrapers and APIs.

Get your Apify API token02

Go to settings in the Apify console and navigate to the “API & Integrations” tab. There, create a new token and save it for later.

Integrate Wikipedia API03

Navigate to the Wikipedia API page and click on the API dropdown menu in the top right corner. In the dropdown menu, you can see API clients, API endpoints, and more.

Get your Wikipedia data via API04

Now, you can use the API and get the data you need from Wikipedia.

Why use Apify?

Never get blocked

Every plan (free included) comes with Apify Proxy, which is great for avoiding blocking and giving you access to geo-specific content.

Customers love us

We truly care about the satisfaction of our users and thanks to that we're one of the best-rated data extraction platforms on both G2 and Capterra.

Monitor your runs

With our latest monitoring features, you always have immediate access to valuable insights on the status of your web scraping tasks.

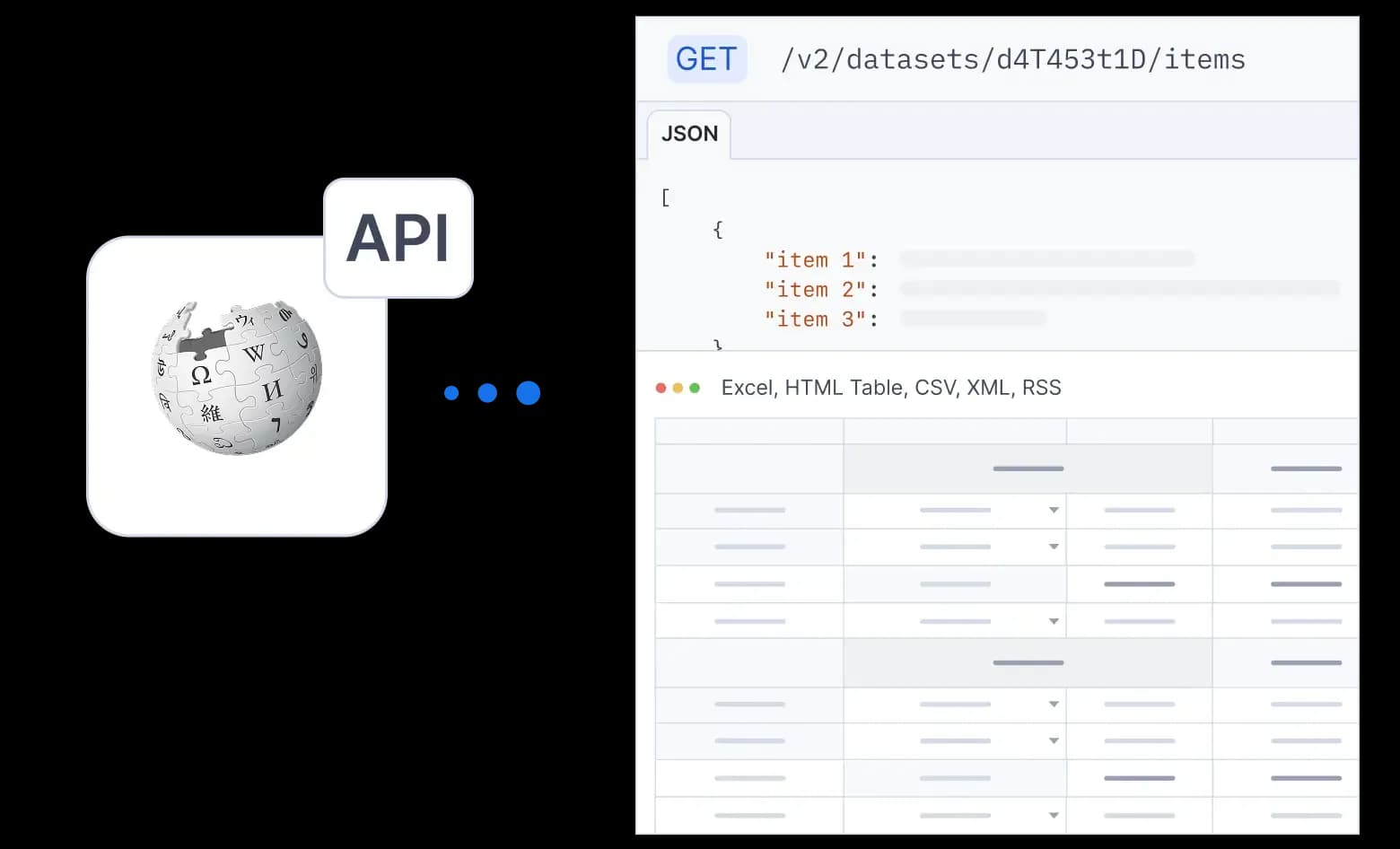

Export to various formats

Your datasets can be exported to any format that suits your data workflow, including Excel, CSV, JSON, XML, HTML table, JSONL, and RSS.

Integrate Apify to your workflow

You can integrate your Apify runs with platforms such as Zapier, Make, Keboola, Google Drive, or GitHub. Connect with practically any cloud service or web app.

Large developer community

Apify is built by developers, so you'll be in good hands if you have any technical questions. Our Discord server is always here to help!

Get AI-ready Wikipedia data via API

Connect to hundreds of apps right away using ready-made integrations, or set up your own with webhooks and our API.

Yes, Wikipedia provides an official API at https://en.wikipedia.org/api/rest_v1/ for accessing articles and metadata. However, our MediaWiki Scraper offers enhanced functionality by extracting both Wikipedia and Fandom content with additional formatting options and batch processing capabilities that may not be available through the standard API.

Yes, you can try the MediaWiki Scraper for free on Apify. New users receive free platform credits to test the Actor. After that, it's available for $30.00/month plus usage-based pricing depending on the amount of data extracted and compute resources consumed.

The MediaWiki Scraper can extract comprehensive data including article titles, full page content, URLs, categories, revision history, last modification dates, infobox data, images, links, references, headers, tables, and lists. It works with both Wikipedia and Fandom wikis to provide structured JSON output.

Yes, it's legal to scrape Wikipedia data. Wikipedia content is available under Creative Commons licenses and encourages reuse. Fandom content follows similar open policies. However, you should always respect the platforms' terms of service, implement reasonable request rates, and properly attribute the content according to the respective licenses.

Getting started is simple: 1) Sign up for a free Apify account, 2) Navigate to the MediaWiki Scraper Actor page, 3) Configure your input parameters with Wikipedia or Fandom URLs or search terms, 4) Run the Actor and download your structured JSON results. You can also integrate it via API for automated data extraction workflows.