GPT Scraper

Try for free

Pay $9.00 for 1,000 pages

View all Actors

GPT Scraper

drobnikj/gpt-scraper

Try for free

Pay $9.00 for 1,000 pages

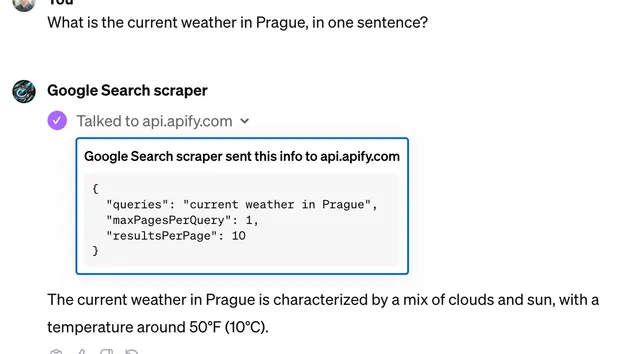

Extract data from any website and feed it into GPT via the OpenAI API. Use ChatGPT to proofread content, analyze sentiment, summarize reviews, extract contact details, and much more.

Do you want to learn more about this Actor?

Get a demoThe code examples below show how to run the Actor and get its results. To run the code, you need to have an Apify account. Replace <YOUR_API_TOKEN> in the code with your API token, which you can find under Settings > Integrations in Apify Console. Learn more

1from apify_client import ApifyClient

2

3# Initialize the ApifyClient with your Apify API token

4client = ApifyClient("<YOUR_API_TOKEN>")

5

6# Prepare the Actor input

7run_input = {

8 "startUrls": [{ "url": "https://news.ycombinator.com/" }],

9 "instructions": """Gets the post with the most points from the page and returns it as JSON in this format:

10postTitle

11postUrl

12pointsCount""",

13 "includeUrlGlobs": [],

14 "excludeUrlGlobs": [],

15 "linkSelector": "a[href]",

16 "initialCookies": [],

17 "proxyConfiguration": { "useApifyProxy": True },

18 "targetSelector": "",

19 "removeElementsCssSelector": "script, style, noscript, path, svg, xlink",

20 "schema": {

21 "type": "object",

22 "properties": {

23 "title": {

24 "type": "string",

25 "description": "Page title",

26 },

27 "description": {

28 "type": "string",

29 "description": "Page description",

30 },

31 },

32 "required": [

33 "title",

34 "description",

35 ],

36 },

37 "schemaDescription": "",

38}

39

40# Run the Actor and wait for it to finish

41run = client.actor("drobnikj/gpt-scraper").call(run_input=run_input)

42

43# Fetch and print Actor results from the run's dataset (if there are any)

44print("💾 Check your data here: https://console.apify.com/storage/datasets/" + run["defaultDatasetId"])

45for item in client.dataset(run["defaultDatasetId"]).iterate_items():

46 print(item)

47

48# 📚 Want to learn more 📖? Go to → https://docs.apify.com/api/client/python/docs/quick-startDeveloper

Maintained by Apify

Actor metrics

- 221 monthly users

- 45 stars

- 99.0% runs succeeded

- 1.8 days response time

- Created in Mar 2023

- Modified 27 days ago

Categories

Jakub Drobník

Jakub Drobník