7 days trial then $40.00/month - No credit card required now

Facebook Comments Scraper

7 days trial then $40.00/month - No credit card required now

Extract data from hundreds of Facebook comments from one or multiple Facebook posts. Get comment text, timestamp, likes count and basic commenter info. Download the data in JSON, CSV, Excel and use it in apps, spreadsheets, and reports.

What is Facebook Comments Scraper?

It's a simple and powerful tool that allows you to extract data from Facebook comments. To get that data, just insert the post URL of a post, video, picture, or reel and click the "Save & Start" button.

What Facebook comments data can I extract?

With this Facebook API, you will be able to extract the following data from Facebook:

| 📝 Post text | 🔗 Post URL |

| 💬 Comment text | 🧛 Basic commentator info |

| 🕐 Timestamp | 👍 Likes count |

Why scrape Facebook comments?

🤬 Analyze social media and identify hot spots of misinformation or hate speech

🔎 Conduct market research or analysis

🤺 Monitor competition

🤔 Track brand sentiment and shifts in customer engagement

How do I use Facebook Comments Scraper?

Facebook Comments Scraper was designed to be easy to start with even if you've never extracted data from the web before. Here's how you can scrape Facebook data with this tool:

- Create a free Apify account using your email.

- Open Facebook Comments Scraper.

- Add one or more Facebook post URLs to scrape its comments.

- Click "Start" and wait for the data to be extracted.

- Download your data in JSON, XML, CSV, Excel, or HTML.

If you need guidance on how to run the scraper, you can follow our step-by-step tutorial 🔗

Input

The input for Facebook Comments Scraper should be URLs of Facebook posts you want to scrape comments from. Click on the input tab for a full explanation of input in JSON.

1{ 2 "persistCookiesPerSession": false, 3 "proxy": { 4 "useApifyProxy": true, 5 "apifyProxyGroups": [ 6 "RESIDENTIAL" 7 ] 8 }, 9 "resultsLimit": 500, 10 "startUrls": [ 11 { 12 "url": "https://www.facebook.com/humansofnewyork/posts/pfbid0BbKbkisExKGSKuhee9a7i86RwRuMKFC8NSkKStB7CsM3uXJuAAfZLrkcJMXxhH4Yl" 13 } 14 ], 15 "useSessionPool": true, 16 "viewOption": "RANKED_UNFILTERED", 17 "maxRequestRetries": 10 18} 19...

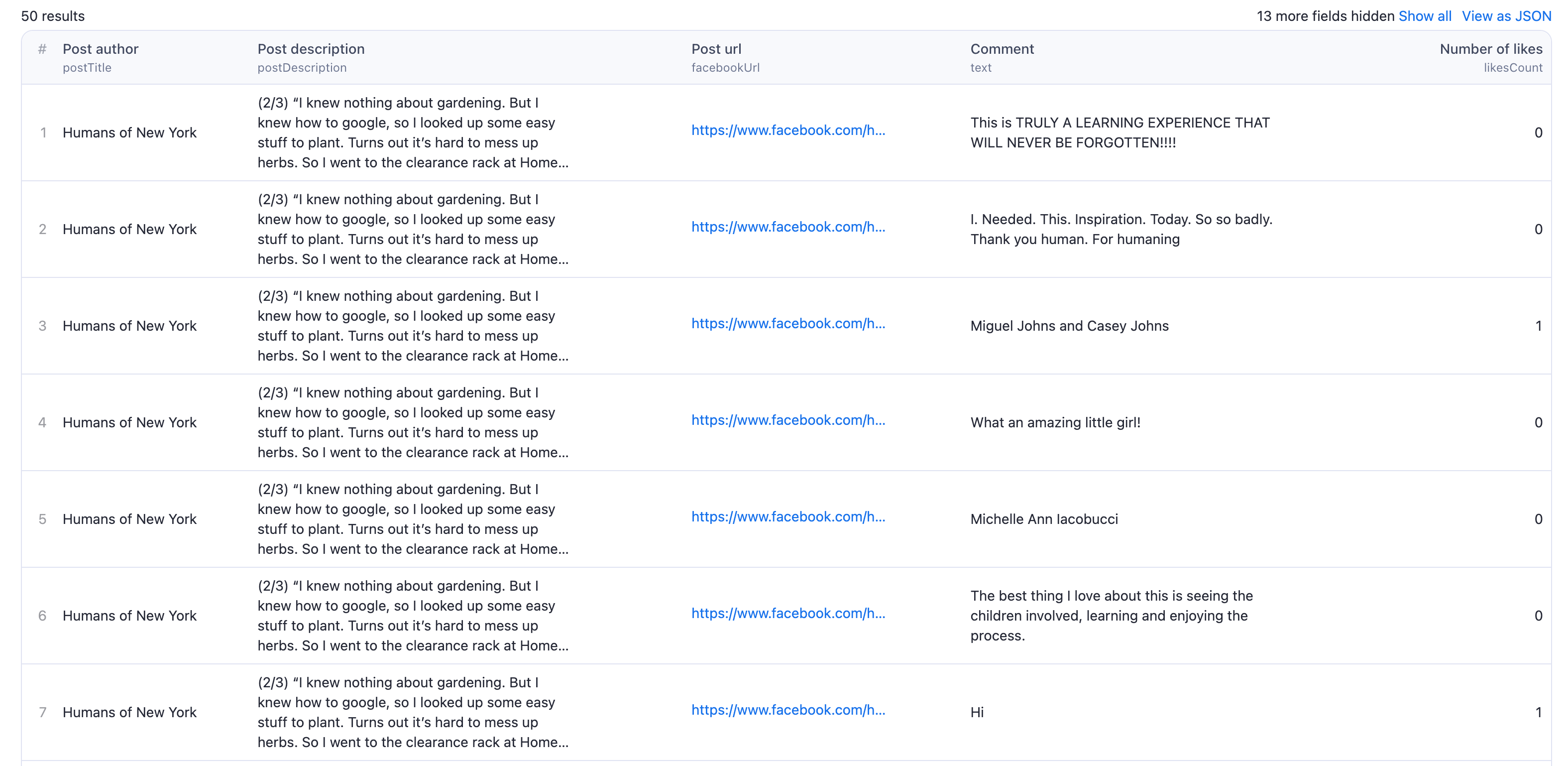

Output sample

The results will be wrapped into a dataset which you can find in the Storage tab. Here's an excerpt from the dataset you'd get if you apply the input parameters above:

And here is the same data but in JSON. You can choose in which format to download your Facebook data: JSON, JSONL, Excel spreadsheet, HTML table, CSV, or XML.

1[{ 2 "postId": "6375219342552112", 3 "postTitle": "Humans of New York", 4 "postDescription": "(2/3) “I knew nothing about gardening. But I knew how to google, so I looked up some easy stuff to plant. Turns out it’s hard to mess up herbs. So I went to the clearance rack at Home Depot and got...", 5 "id": "Y29tbWVudDo2Mzc1MjE5MzQyNTUyMTEyXzU2NTM2NzE3NTU0NDkwNQ==", 6 "feedbackId": "ZmVlZGJhY2s6NjM3NTIxOTM0MjU1MjExMl81NjUzNjcxNzU1NDQ5MDU=", 7 "date": "2023-02-12T05:37:43.000Z", 8 "text": "This is TRULY A LEARNING EXPERIENCE THAT WILL NEVER BE FORGOTTEN!!!!", 9 "profilePicture": "https://scontent-mia3-1.xx.fbcdn.net/v/t39.30808-1/317072515_520710776774518_3064683200933310383_n.jpg?stp=cp0_dst-jpg_p32x32&_nc_cat=104&ccb=1-7&_nc_sid=7206a8&_nc_ohc=WcDZ7w-7WE8AX8yVAxF&_nc_ht=scontent-mia3-1.xx&oh=00_AfCak9L4GpMwGM8u4gLdyPW23ibkJz-CQxKrZfSk4PkVSw&oe=63F8E120", 10 "profileId": "pfbid07jy5LgebfAw3rfJUermdhPhLviQ5hkpzbpz5jFQqPM1QAvvqqRmXZZYRqiLtfH8sl", 11 "profileName": "Rose Bennett", 12 "likesCount": 0, 13 "facebookUrl": "https://www.facebook.com/humansofnewyork/posts/pfbid0BbKbkisExKGSKuhee9a7i86RwRuMKFC8NSkKStB7CsM3uXJuAAfZLrkcJMXxhH4Yl" 14}, 15{ 16 "postId": "6375219342552112", 17 "postTitle": "Humans of New York", 18 "postDescription": "(2/3) “I knew nothing about gardening. But I knew how to google, so I looked up some easy stuff to plant. Turns out it’s hard to mess up herbs. So I went to the clearance rack at Home Depot and got...", 19 "id": "Y29tbWVudDo2Mzc1MjE5MzQyNTUyMTEyXzczMjAwMDAxNzk2OTk4Mw==", 20 "feedbackId": "ZmVlZGJhY2s6NjM3NTIxOTM0MjU1MjExMl83MzIwMDAwMTc5Njk5ODM=", 21 "date": "2022-05-03T14:38:08.000Z", 22 "text": "I. Needed. This. Inspiration. Today. So so badly. Thank you human. For humaning", 23 "profilePicture": "https://scontent-mia3-2.xx.fbcdn.net/v/t39.30808-1/329184498_2342106232636137_1760055814657808969_n.jpg?stp=cp0_dst-jpg_p32x32&_nc_cat=109&ccb=1-7&_nc_sid=7206a8&_nc_ohc=ugsgNEZ46E4AX8aAPyg&_nc_ht=scontent-mia3-2.xx&oh=00_AfBpd4oe3KkyTYvGZHRPNKXV8YCswDX6khNWgtFVlxOX2A&oe=63F97E6C", 24 "profileId": "pfbid0Fme3crqcE7DZerMLnEg5R3EPuzYzAZc2v9rfQxZurAVHWmcEmGP7Rht1vDTgH7Eel", 25 "profileName": "Stacey Petersen", 26 "likesCount": 0, 27 "facebookUrl": "https://www.facebook.com/humansofnewyork/posts/pfbid0BbKbkisExKGSKuhee9a7i86RwRuMKFC8NSkKStB7CsM3uXJuAAfZLrkcJMXxhH4Yl" 28}, 29{ 30 "postId": "6375219342552112", 31 "postTitle": "Humans of New York", 32 "postDescription": "(2/3) “I knew nothing about gardening. But I knew how to google, so I looked up some easy stuff to plant. Turns out it’s hard to mess up herbs. So I went to the clearance rack at Home Depot and got...", 33 "id": "Y29tbWVudDo2Mzc1MjE5MzQyNTUyMTEyXzU4NDI1ODU5NjAyOTg4Nw==", 34 "feedbackId": "ZmVlZGJhY2s6NjM3NTIxOTM0MjU1MjExMl81ODQyNTg1OTYwMjk4ODc=", 35 "date": "2021-10-07T22:19:49.000Z", 36 "text": "Miguel Johns and Casey Johns", 37 "profilePicture": "https://scontent-mia3-1.xx.fbcdn.net/v/t39.30808-1/324423766_737675124553862_3632400898456577811_n.jpg?stp=cp0_dst-jpg_p32x32&_nc_cat=101&ccb=1-7&_nc_sid=7206a8&_nc_ohc=K7DS6wA_j60AX9iNrSV&_nc_ht=scontent-mia3-1.xx&oh=00_AfCIgjBvR9bffDd1AciNyh8er4Gk9NfYpVQuwZC6cSgHug&oe=63F90193", 38 "profileId": "pfbid02WF8smYb1AnodsBGVz31nGMmPrSRXQe29sj7cC1PbU1LLYwTv82DrCPf3pkiVD3PAl", 39 "profileName": "Erika Powell", 40 "likesCount": 1, 41 "facebookUrl": "https://www.facebook.com/humansofnewyork/posts/pfbid0BbKbkisExKGSKuhee9a7i86RwRuMKFC8NSkKStB7CsM3uXJuAAfZLrkcJMXxhH4Yl" 42}, 43{ 44 "postId": "6375219342552112", 45 "postTitle": "Humans of New York", 46 "postDescription": "(2/3) “I knew nothing about gardening. But I knew how to google, so I looked up some easy stuff to plant. Turns out it’s hard to mess up herbs. So I went to the clearance rack at Home Depot and got...", 47 "id": "Y29tbWVudDo2Mzc1MjE5MzQyNTUyMTEyXzYwOTI1NTUwMzQyNTYwNQ==", 48 "feedbackId": "ZmVlZGJhY2s6NjM3NTIxOTM0MjU1MjExMl82MDkyNTU1MDM0MjU2MDU=", 49 "date": "2021-09-19T07:51:30.000Z", 50 "text": "What an amazing little girl!", 51 "profileUrl": "https://www.facebook.com/sophie.kathir", 52 "profilePicture": "https://scontent-mia3-1.xx.fbcdn.net/v/t39.30808-1/320826684_857375728912000_4654536252176073685_n.jpg?stp=cp1_dst-jpg_p32x32&_nc_cat=100&ccb=1-7&_nc_sid=7206a8&_nc_ohc=INqGrL1PD2MAX_BiL0R&_nc_ht=scontent-mia3-1.xx&oh=00_AfCf9aMsE8VHEy7eiG_QbbYjLyLNNbZ55ldwUFFWI42IaA&oe=63F99A60", 53 "profileId": "pfbid02eMBDMpxzsZ9cQBnqXE2E7wWHFwwkAERWv6KE42qEKMeP1PtpUQ8rkiNdBDRE1VhPl", 54 "profileName": "Sophie Kathir", 55 "likesCount": 0, 56 "facebookUrl": "https://www.facebook.com/humansofnewyork/posts/pfbid0BbKbkisExKGSKuhee9a7i86RwRuMKFC8NSkKStB7CsM3uXJuAAfZLrkcJMXxhH4Yl" 57}, 58...

How many results can you scrape with Facebook Comments scraper?

Facebook Comments scraper can return up to 2000 - 3000 results on average. However, you have to keep in mind that scraping facebook.com has many variables to it and may cause the results to fluctuate case by case. There’s no one-size-fits-all-use-cases number. The maximum number of results may vary depending on the complexity of the input, location, and other factors. Some of the most frequent cases are:

- website gives a different number of results depending on the type/value of the input

- website has an internal limit that no scraper can cross

- scraper has a limit that we are working on improving

Therefore, while we regularly run Actor tests to keep the benchmarks in check, the results may also fluctuate without our knowing. The best way to know for sure for your particular use case is to do a test run yourself.

How much will scraping Facebook Comments cost you?

When it comes to scraping, it can be challenging to estimate the resources needed to extract data as use cases may vary significantly. That's why the best course of action is to run a test scrape with a small sample of input data and limited output. You’ll get your price per scrape, which you’ll then multiply by the number of scrapes you intend to do.

Watch this video for a few helpful tips. And don't forget that choosing a higher plan will save you money in the long run.

Want to scrape Facebook groups or posts?

You can use the dedicated scrapers below if you want to scrape specific Facebook data. Each of them is built particularly for the relevant Facebook scraping case be it a group, reviews, posts or photos. Feel free to browse them:

Not your cup of tea? Build your own scraper

Facebook Comments Scraper doesn’t exactly do what you need? You can always build your own! We have various scraper templates in Python, JavaScript, and TypeScript to get you started. Alternatively, you can write it from scratch using our open-source library Crawlee. You can keep the scraper to yourself or make it public by adding it to Apify Store (and find users for it).

Or let us know if you need a custom scraping solution.

Integrations and Facebook Comments Scraper

Last but not least, Facebook Comments Scraper can be connected with almost any cloud service or web app thanks to integrations on the Apify platform. You can integrate with Make, Asana, Zapier, Slack, Airbyte, GitHub, Google Sheets, Google Drive, and more.

You can also use webhooks to carry out an action whenever an event occurs, e.g., get a notification whenever Facebook Comments Scraper successfully finishes a run.

Using Facebook Comments Scraper with the Apify API

The Apify API gives you programmatic access to the Apify platform. The API is organized around RESTful HTTP endpoints that enable you to manage, schedule, and run Apify actors. The API also lets you access any datasets, monitor actor performance, fetch results, create and update versions, and more. To access the API using Node.js, use the apify-client NPM package. To access the API using Python, use the apify-client PyPI package.

Check out the Apify API reference docs for full details or click on the API tab for code examples.

Is it legal to scrape Facebook Comments data?

Our Facebook scrapers are ethical and do not extract any private user data, such as email addresses, gender, or location. They only extract what the user has chosen to share publicly. However, you should be aware that your results could contain personal data. You should not scrape personal data unless you have a legitimate reason to do so.

If you're unsure whether your reason is legitimate, consult your lawyers. You can also read our blog post on the legality of web scraping and ethical scraping.

Your feedback

We’re always working on improving the performance of our Actors. So if you’ve got any technical feedback for Facebook Comments Scraper or simply found a bug, please create an issue on the Actor’s Issues tab in Apify Console.

- 394 monthly users

- 99.8% runs succeeded

- 1.8 days response time

- Created in Nov 2022

- Modified 9 days ago

Apify

Apify