Break Up Compass

Pricing

Pay per usage

Pricing

Pay per usage

Rating

0.0

(0)

Developer

Met-A Met-A

Actor stats

0

Bookmarked

3

Total users

2

Monthly active users

3 months ago

Last modified

Categories

Share

Python Crawlee & BeautifulSoup Actor Template

This template example was built with Crawlee for Python to scrape data from a website using Beautiful Soup wrapped into BeautifulSoupCrawler.

Quick Start

Once you've installed the dependencies, start the Actor:

Once your Actor is ready, you can push it to the Apify Console:

Project Structure

For more information, see the Actor definition documentation.

How it works

This code is a Python script that uses BeautifulSoup to scrape data from a website. It then stores the website titles in a dataset.

- The crawler starts with URLs provided from the input

startUrlsfield defined by the input schema. Number of scraped pages is limited bymaxPagesPerCrawlfield from the input schema. - The crawler uses

requestHandlerfor each URL to extract the data from the page with the BeautifulSoup library and to save the title and URL of each page to the dataset. It also logs out each result that is being saved.

What's included

- Apify SDK - toolkit for building Actors

- Crawlee for Python - web scraping and browser automation library

- Input schema - define and easily validate a schema for your Actor's input

- Dataset - store structured data where each object stored has the same attributes

- Beautiful Soup - a library for pulling data out of HTML and XML files

- Proxy configuration - rotate IP addresses to prevent blocking

Resources

- Quick Start guide for building your first Actor

- Video introduction to Python SDK

- Webinar introducing to Crawlee for Python

- Apify Python SDK documentation

- Crawlee for Python documentation

- Python tutorials in Academy

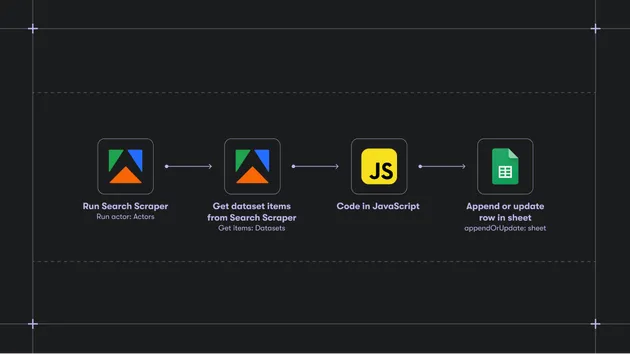

- Integration with Zapier, Make, Google Drive and others

- Video guide on getting data using Apify API

Creating Actors with templates

Getting started

For complete information see this article. In short, you will:

- Build the Actor

- Run the Actor

Pull the Actor for local development

If you would like to develop locally, you can pull the existing Actor from Apify console using Apify CLI:

-

Install

apify-cliUsing Homebrew

$brew install apify-cliUsing NPM

$npm -g install apify-cli -

Pull the Actor by its unique

<ActorId>, which is one of the following:- unique name of the Actor to pull (e.g. "apify/hello-world")

- or ID of the Actor to pull (e.g. "E2jjCZBezvAZnX8Rb")

You can find both by clicking on the Actor title at the top of the page, which will open a modal containing both Actor unique name and Actor ID.

This command will copy the Actor into the current directory on your local machine.

$apify pull <ActorId>

Documentation reference

To learn more about Apify and Actors, take a look at the following resources: