Fast, reliable data for ChatGPT and LLMs

Extract text content from the web to feed your vector databases, fine-tune or train your large language models (LLMs) such as ChatGPT or LLaMA.

POWERING THE WORLD'S TOP DATA-DRIVEN TEAMS

Generative AI is powered by web scraping

Data is the fuel for AI, and web is the largest source of data ever created. Today's most popular language models like ChatGPT or LLaMA were all trained on data scraped from the web. Apify gives you the same superpowers and brings the vast amounts of data from the web to your fingertips.

Load vector databases

Extract documents from the web and load them to vector databases for querying and prompt generation.

Train new models

Extract text and images from the web to generate training datasets for your new AI models.

Fine-tune models

Use domain-specific data extracted from the web with the OpenAI fine-tuning API or other models.

LangChain and LlamaIndex integration

Load scraped datasets directly into LangChain or LlamaIndex vector indexes. Build AI chatbots and other apps that query text data crawled from websites such as documentation, knowledge bases, blog posts, and other online sources.

Ingest entire websites automatically...

Gather your customers' documentation, knowledge bases, help centers, forums, blog posts, PDFs, and other sources of information to train or prompt your LLMs. Integrate Apify into your product and let your customers upload their content in minutes.

...and use that data to power chatbots

Customer service and support is a major area where generative AI and large language models (LLMs) in particular are starting to unlock huge amounts of customer value. Read about how Intercom's new AI chatbot is already using web scraping to answer customer queries.

Expand LLM capabilities with third-party data

Enrich your LLM with your own data or data from the web to deliver accurate responses. Unlock the power of real-time information, ensuring your chatbot is always up-to-date and relevant.

Ask questions about brand and sentiment

Provide your chatbot with data from external sources like forums, review sites or social media so it can give you real-time insights, sentiment analysis, and actionable feedback about your brand.

Improve the accuracy of chatbot responses

Make your chatbot more intelligent and accurate by integrating your own and external online sources. Impress users with precise, reliable, and personal interactions.

Apify Adviser GPT

Find the right Actor to extract data from the web or get help with the Apify scraping platform. Our Adviser GPT has been trained to assist you with any questions you might have about using Apify or Actors.

Generative AI is a type of deep learning model focused on generating text, images, audio, video, code, and other data types in response to text prompts. Examples of generative AI models are ChatGPT, MidJourney, and BARD.

AI is a field of computer science that aims to create intelligent machines or systems that can perform tasks that typically require human intelligence. Generative AI is a subfield of AI focused on creating systems capable of generating new content, such as images, text, music, or video.

Large language models, or LLMs, are a form of generative AI. They are typically transformer models that use deep learning methods to understand and generate text in a human-like fashion. Examples of LLMs are ChatGPT, LLaMA, LLaMDA, and BARD.

Data ingestion is the process of collecting, processing, and preparing data for analysis or machine learning. In the context of LLMs, data ingestion involves collecting text data (web scraping), preprocessing it (cleaning, normalization, tokenization), and preparing it for training (feature engineering).

Web scraping allows you to collect reliable, up-to-date information that can be used to feed, fine-tune, or train large language models (LLMs) or provide context for prompts for ChatGPT. In return, the model will answer questions based on your or your customer's websites and content.

Vector databases are designed to handle the unique structure of vector embeddings, which are dense vectors of numbers that represent text. They are used in machine learning to index vectors for easy search and retrieval by comparing values and finding those that are most similar to one another.

LangChain is an open-source framework for developing applications powered by language models. It connects to the AI models you want to use and links them with outside sources. That means you can chain commands together so the AI model can know what it needs to do to produce the answers or perform the tasks you require.

Pinecone is a popular vector database that lets you provide long-term memory for high-performance AI applications. It is used for semantic search, similarity search for images and audio, recommendation systems, record matching, anomaly detection, and natural language processing.

- Data collection: use a tool like Apify's Website Content Crawler to scrape web data. Configure the crawler settings like start URLs, crawler type, HTML processing, and data cleaning to tailor the data to what you need.

- Data processing: clean and process the scraped data by removing unnecessary HTML elements, duplications, and transforming it into a usable format (e.g. JSON, CSV).

- Integration and training: integrate the cleaned and processed data with tools like LangChain or Pinecone and feed it into your LLM to fine-tune or train the model according to your specific requirements. Check out this full step-by-step tutorial on how to collect data for LLMs with web scraping.

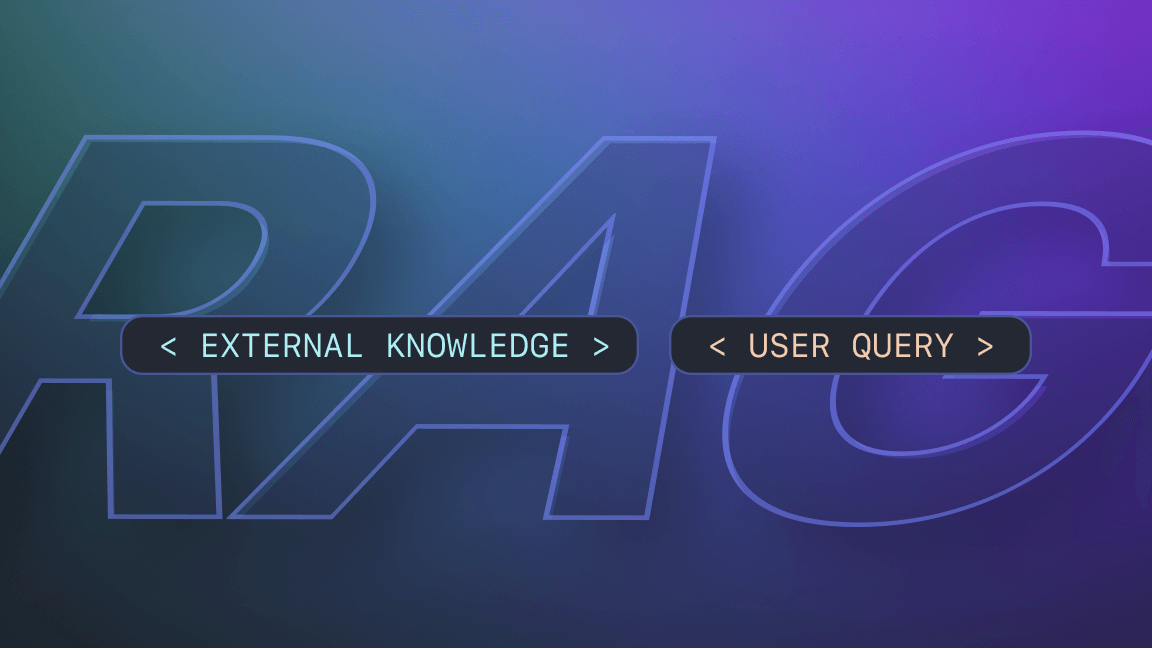

RAG is an AI framework and technique used in natural language processing that combines elements of both retrieval-based and generation-based approaches to enhance the quality and relevance of generated text. It is used as a way to improve generative AI systems.

RAG is a popular method for creating chatbots because it combines retrieval-based and generative-based models. Retrieval-based models search a database for the most relevant answer. Generative models create answers on the fly. The combination of these two capabilities makes RAG chatbots adaptable and mitigates hallucinations.