JSON to TOON Converter – Cut LLM Token Costs by Up to 40%

Pricing

$1.50 / 1,000 results

JSON to TOON Converter – Cut LLM Token Costs by Up to 40%

Convert JSON to TOON and see if it really reduces tokens (often up to ~40%). The actor analyzes your data structure, compares JSON vs TOON usage, and tells you where TOON will cut LLM running costs and where you should keep using JSON.

Pricing

$1.50 / 1,000 results

Rating

0.0

(0)

Developer

Khan

Actor stats

0

Bookmarked

3

Total users

0

Monthly active users

3 months ago

Last modified

Categories

Share

JSON to TOON Converter – LLM Token Saver

This Acror is a diagnostic tool for anyone thinking about adopting TOON instead of JSON in their LLM pipelines.

It doesn’t just rewrite your data into TOON, it alos measures real token usage for JSON vs TOON, inspects how tabular and uniform your arrays are, and then returns a clear, per-source recommendation.

Use these insights to decide where TOON can genuinely help you cut LLM running costs and fit more data into your context window, and where it’s still smarter (and cheaper) to keep sending plain JSON.

This actor:

- Accepts a JSON object or one/many JSON URLs

- Encodes the data into TOON format

- Compares JSON vs TOON token usage

- Analyses how “tabular” and uniform your arrays are

- Returns a clear recommendation: use TOON or stick to JSON

You can then decide, with numbers, whether migrating that payload to TOON is worth the LLM cost and complexity.

What is TOON?

TOON (Token-Oriented Object Notation) is a compact, human-readable format that encodes the same data model as JSON (objects, arrays, primitives) but optimized for LLM prompts.

Key ideas:

- Token-efficient: reduces repeated quotes, braces, and field names

- Human-readable: indentation-based, YAML-ish syntax

- Tabular arrays: declare keys once, then stream rows

- Drop-in JSON alternative: no loss of information, just a different encoding

It shines on uniform, row-like data such as events, logs, users, orders, analytics records, and database exports.

Why use this actor?

TOON can reduce tokens by 30–60% on the right kind of data but on other shapes it can be neutral or worse.

This actor helps you:

- Check real token impact for a given dataset (not just theory)

- Understand whether your arrays are uniform enough for TOON to shine

- Decide automatically: send TOON or JSON for each payload

- Explore TOON quickly without wiring up your own tokenizer and encoder

You can integrate it into your pipeline to:

- Pre-screen candidate datasets before a migration to TOON

- Build a “smart encoder” that only switches to TOON when recommended

- Quantify potential LLM cost savings for analytics/ops stakeholders

Inputs

You can provide data in three ways:

jsonData (object)

- Title: JSON Data

- Type: object

- Description: Direct JSON object to be converted.

- Editor: JSON

Example:

jsonUrl (string)

- Title: Single JSON URL

- Type: string

- Description: A single URL that returns JSON.

- Use this for a quick, one-off JSON endpoint.

Example:

jsonUrls (array of strings)

- Title: Multiple JSON URLs

- Type: array

- Description: Multiple URLs to fetch and convert.

- Each URL becomes a separate row in the output dataset.

Example:

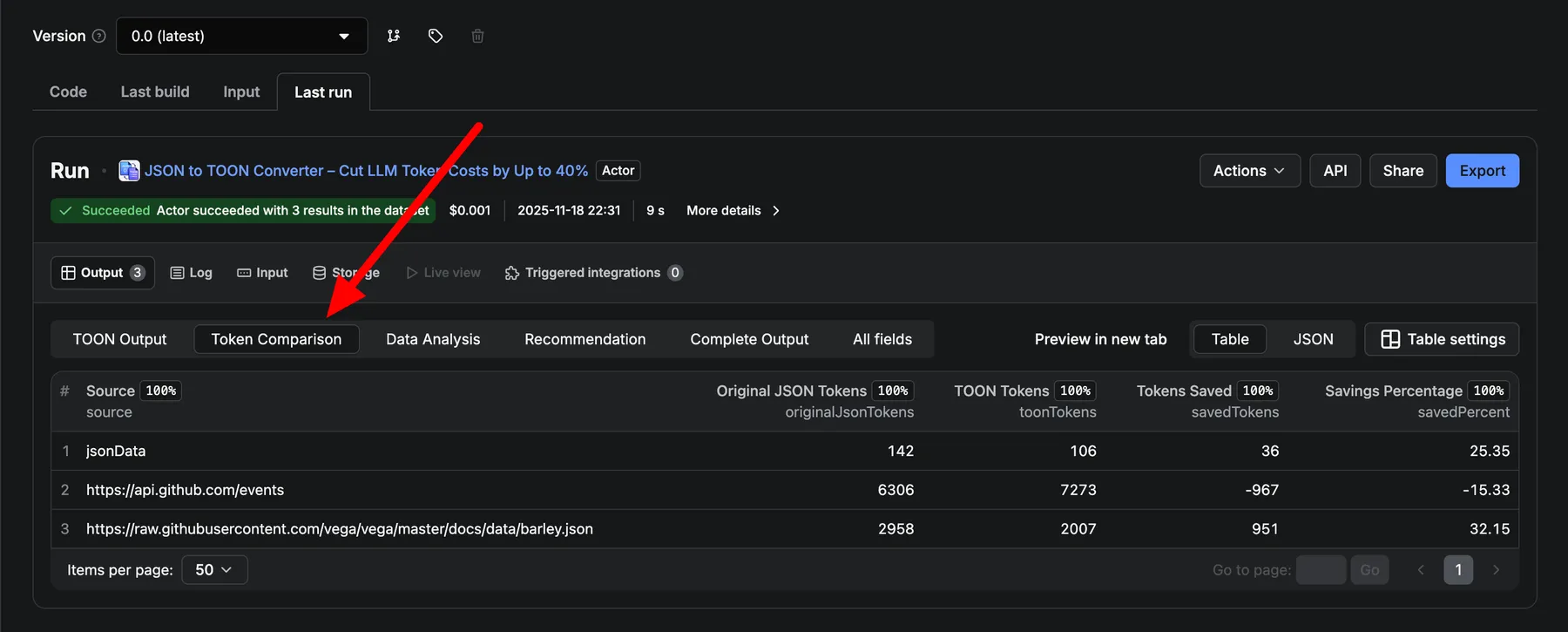

Outputs & Apify console tabs

Each input source (field or URL) produces one item in the default dataset with rich fields that Apify’s UI groups into tabs:

1. TOON Output

Columns:

source– where this data came from- e.g.

Input field "jsonData" - or

URL: https://api.github.com/events

- e.g.

success–true/falsetoon– TOON-encoded string

Example TOON output.

2. Token Comparison

Columns:

originalJsonTokens– estimated LLM tokens for the original JSONtoonTokens– estimated tokens for the TOON versionsavedTokens–originalJsonTokens - toonTokenssavedPercent– savings percentage vs JSON (can be negative)

Example:

| Source | JSON tokens | TOON tokens | Tokens saved | Savings % |

|---|---|---|---|---|

| jsonData | 142 | 106 | 36 | 25.35 |

| .../events | 6306 | 7273 | -967 | -15.33 |

| .../barley.json | 2958 | 2007 | 951 | 32.15 |

Positive = TOON is cheaper; negative = TOON is more expensive for that sample.

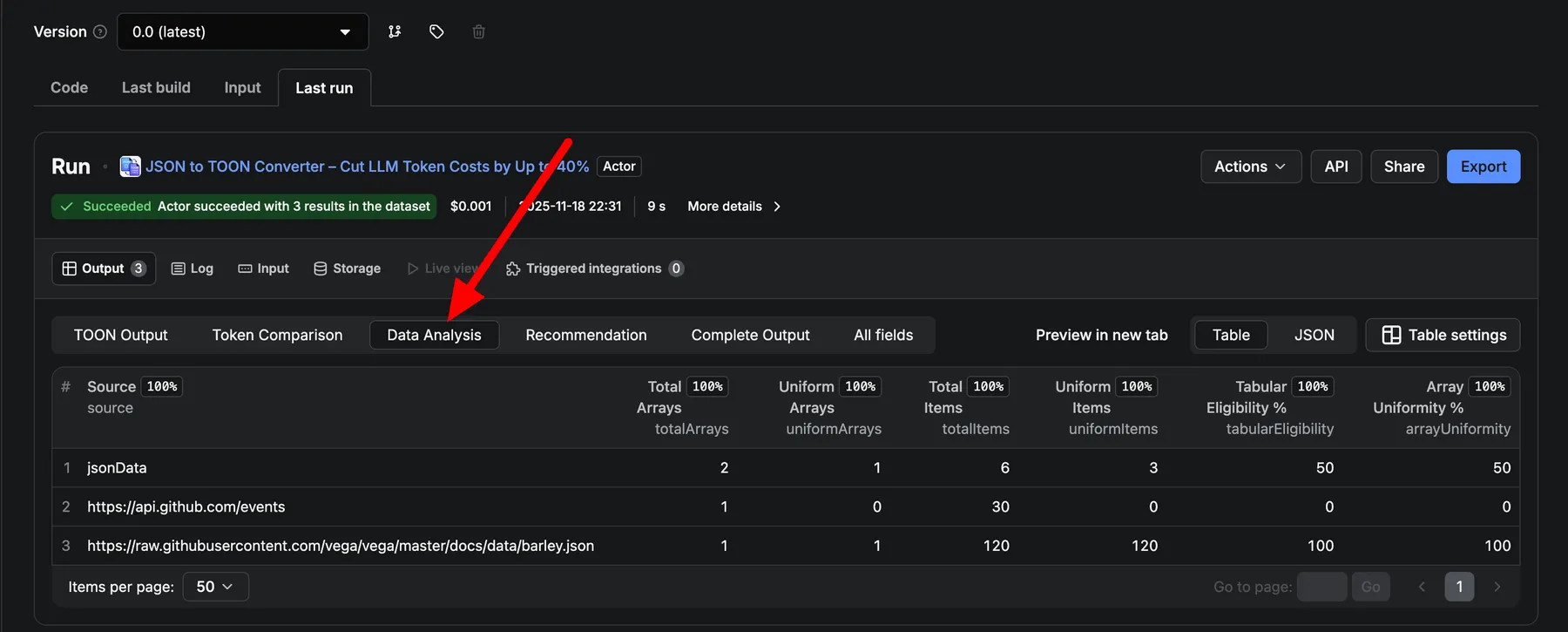

3. Data Analysis

Structural stats that describe how “TOON-friendly” the data is:

totalArrays– how many top-level arrays were analyzeduniformArrays– arrays where items share the same shapetotalItems– total number of items across arraysuniformItems– how many are in uniform arraystabularEligibility– % score (0–100) for tabular / row-like dataarrayUniformity– % score (0–100) for structural uniformity

Example:

| Source | Total arrays | Uniform arrays | Total items | Uniform items | Tabular % | Uniformity % |

|---|---|---|---|---|---|---|

| jsonData | 2 | 1 | 6 | 3 | 50 | 50 |

| .../events | 1 | 0 | 30 | 0 | 0 | 0 |

| .../barley.json | 1 | 1 | 120 | 120 | 100 | 100 |

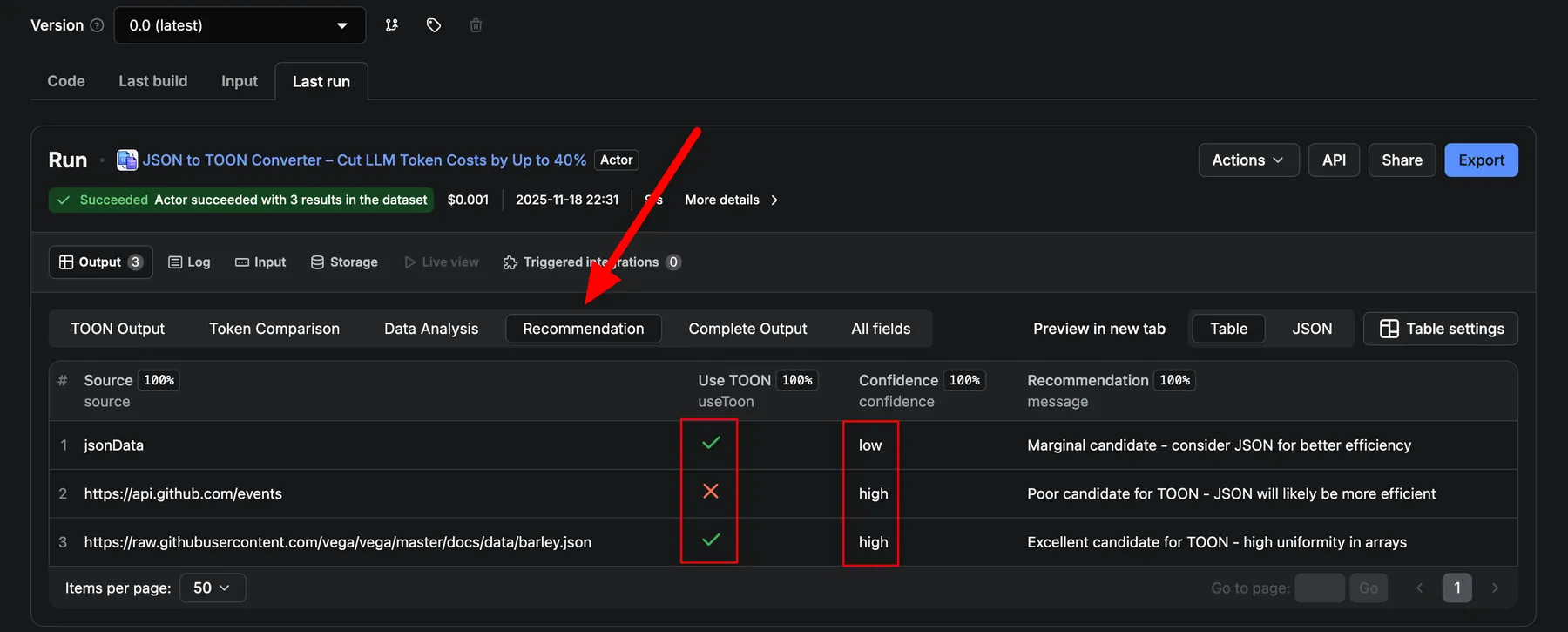

4. Recommendation

High-level decision for each source:

useToon– booleanconfidence–low,medium, orhighmessage– human-readable explanation

Example:

| Source | Use TOON | Confidence | Recommendation |

|---|---|---|---|

| jsonData | ✅ | low | Marginal candidate - consider JSON for better efficiency |

| .../events | ❌ | high | Poor candidate for TOON - JSON will likely be more efficient |

| .../barley.json | ✅ | high | Excellent candidate for TOON - high uniformity in arrays |

You can hook this logic into your own tooling to automatically choose the best format per request. Use these insights to where it’s better to keep sending JSON.

Here, the actor clearly provides insights to decide where TOON can genuinely help you cut LLM running costs, and where it’s better to keep sending JSON.

How to interpret the recommendation

A simple rule of thumb:

- ✅ Use TOON when:

useToon = true- and

savedPercentis positive or close to 0 but structure scores (tabularEligibility,arrayUniformity) are very high.

- ❌ Stick to JSON when:

useToon = false- or

savedPercentis strongly negative and structure scores are low.

You can hook this logic into your own tooling to automatically choose the best format per request.

5. Complete Output / All fields

This tab shows the full raw JSON result for each item, including:

successsourcetoonoriginalJsonTokens,toonTokens,savedTokens,savedPercenttotalArrays,uniformArrays,totalItems,uniformItems,tabularEligibility,arrayUniformitytokenComparison(nested breakdown)recommendation(nested decision object)

This is the best view if you want to consume results from code.

Example full result (simplified)

For a given json object a result might look like:

Limitations

- Token counts are estimates and may differ slightly from model to model.

- The actor assumes your data is valid JSON and that URLs return JSON.

- TOON is still a relatively new format; make sure your downstream tooling is comfortable handling TOON strings.

- Performance characteristics depend on your downstream model and infrastructure; always benchmark on your own stack for latency-sensitive workloads.

- This actor is one-way (JSON → TOON). It does not decode TOON back to JSON.