Proff.no Lead Scraper (Beta)

Pricing

from $3.00 / 1,000 results

Proff.no Lead Scraper (Beta)

Retrieve leads on proff.no, the easy way. This actor will retrieve the business' name, address, email addresses, phone numbers and social links.

Pricing

from $3.00 / 1,000 results

Rating

5.0

(1)

Developer

SLASH

Actor stats

5

Bookmarked

21

Total users

1

Monthly active users

a month ago

Last modified

Categories

Share

An Apify Actor that:

- Starts from one or more Proff.no bransjesøk (listing) URLs.

- Extracts company detail URLs (

/selskap/...) from listing pages and follows pagination via?page=up to a configurable depth. - Visits each company detail page and extracts name, categories, phone, address, website and a set of raw emails.

- Validates and normalizes the website URL, then checks its HTTP status (200/3xx vs 404/unavailable, banned).

- Crawls the company website (same registered base domain) for a small set of HTML pages to collect additional emails and social links.

- Optionally crawls social profiles (Facebook, Instagram, LinkedIn) when present, as an extra fallback to find emails.

- Sanitizes and deduplicates emails, filters out tracking / garbage patterns, and prioritizes emails matching the company domain (or common freemail domains).

- Reuses a per-domain website crawl cache so multiple companies on the same domain do not trigger repeated crawls.

- Uses async HTTP with concurrency limits and timeouts to scrape Proff and external sites efficiently while staying reasonably stable.

Optimized for Norwegian Proff listings, but with address heuristics that can also handle Swedish-style postcodes when needed.

ToS & legality: Scraping Proff HTML may violate their Terms of Service. Use responsibly, at low rates, with proxies as needed, and comply with local laws and site policies. This Actor avoids official APIs and parses public HTML only.

What’s new (2025-11-14)

Quality & correctness

- Proff-specific detail extraction: the actor now directly understands Proff layout:

- Detail links discovered via

a[href*="/selskap/"]on listing pages. - Pagination resolved from

Side X av Ytext plus?page=parameter.

- Detail links discovered via

- Structured address parsing:

- First tries JSON-LD

PostalAddressblocks when present. - Falls back to microdata and finally heuristic address extraction tuned for Norwegian 4-digit postcodes (with optional Swedish

NNN NNsupport). - JSON-LD is no longer limited to Sweden-only postcodes.

- First tries JSON-LD

- Website detection and validation:

- Uses canonical URL (

<link rel="canonical">),og:url, and JSON-LDurl/sameAsbefore falling back to “best external link”. - Marks each website with a

website_detailsstatus (ok,404,unavailable,banned,n/a).

- Uses canonical URL (

Emails & social links

- Layered email collection:

- Detail page: visible text,

mailto:links, Proff-style button/slug patterns, HTML entity unescape. - Website crawl: same-domain pages prioritized by contact-like paths (

/kontakt,/contact,/about, etc.). - Social profiles: optionally fetch emails from Facebook, Instagram, LinkedIn pages when no site emails are found.

- Detail page: visible text,

- Sanitization & filtering:

- Strict and fallback regexes, length checks, tracking-substring filters (

button-email,tracking,click,census). - Avoids

.jpg/.pdf/.jstails, slashes inside email, suspicious tracking locals.

- Strict and fallback regexes, length checks, tracking-substring filters (

- Domain-aware filtering:

- When a website is valid:

- Keeps emails whose domain matches the website base domain (or subdomain) or belong to common freemail providers.

- When the website is missing/banned/unavailable:

- Keeps all emails that pass basic validity checks from the detail page and social sources.

- When a website is valid:

Stability & performance

- Bounded website crawling:

- Same-domain internal links only (by registered base domain).

- Skips binary / heavy assets (pdf/doc/media/archives).

- Hard limit on

site_email_max_pagesper root website.

- Per-domain crawl cache:

- Results for each base domain are cached in memory for the run.

- Multiple companies under the same domain reuse the same

(emails, socials)rather than recrawling.

- Concurrency-aware

max_results:- Uses an async lock and shared counters to enforce a strict upper bound on the number of detail pages processed and dataset records pushed.

- Once

max_resultsis reached, workers stop picking up new detail jobs.

- Time-bounded HTTP:

- Configurable

timeout_secondsfor HTTP operations. httpx.AsyncClientwith limits on total and keep-alive connections.

- Configurable

Input

Parameters

| Key | Type | Default | Description |

|---|---|---|---|

start_urls | array[object] | See above | List of starting listing URLs, each object with a url field. Typically Proff bransjesøk result pages. |

max_depth | integer | 3 | Maximum depth of listing pagination per seed. Depth 0 is the initial listing page; depth 1 corresponds to ?page=2, and so on. |

max_results | integer | 0 | Global hard cap on the number of detail pages processed & records pushed. 0 means “no cap”. |

site_email_max_pages | integer | 3 | Maximum number of HTML pages to crawl per website when searching for emails and social links. |

timeout_seconds | integer | 30 | Read timeout for HTTP responses (also influences overall HTTP timeout via httpx.Timeout). |

concurrency | integer | 5 | Number of worker coroutines fetching from the Proff request queue in parallel. |

headers | object or null | Default headers | Optional HTTP headers override. When null, a sensible Norwegian desktop UA + Accept-Language is used. |

Default headers

When you do not provide headers, the actor uses:

You can override these if you need a different UA or language profile, but the defaults are tuned for Norway / Nordic sites.

Output

Each dataset item is a JSON object like:

Field notes

-

source_urlThe company detail URL on Proff that was scraped. This is the primary identifier and is always present. -

nameBusiness name, usually from<h1>,og:title, or the<title>tag. -

categoriesA comma-separated string of categories/industries extracted from Proff category containers (e.g.data-qa="categories"or similar elements). -

phonePrimary phone as seen on Proff detail page:- First attempt:

tel:links. - Fallback:

Telefon NNN NN NNpattern in the page text. - Last resort: generic Nordic-ish phone regex on the page.

- First attempt:

-

addressPostal address:- Prefers JSON-LD

PostalAddressif available and valid. - Otherwise uses microdata and finally heuristic extraction with Norwegian 4-digit or Swedish-style postcodes and street tokens.

- Set to

"n/a"when no plausible address is found.

- Prefers JSON-LD

-

websiteBest guess at the company’s website:- Canonical URL,

og:url, or JSON-LDurl/sameAswhen external and not banned. - Otherwise, a “best external link” found on the page (excluding social and banned domains).

- Set to

"n/a"when no suitable candidate is found or when the site is on the ban list.

- Canonical URL,

-

website_detailsStatus of the website check:"ok"– HTTP 2xx/3xx."404"– site returns 404."unavailable"– persistent errors / non-OK status."banned"– site is in the ban list."n/a"– no website to check.

-

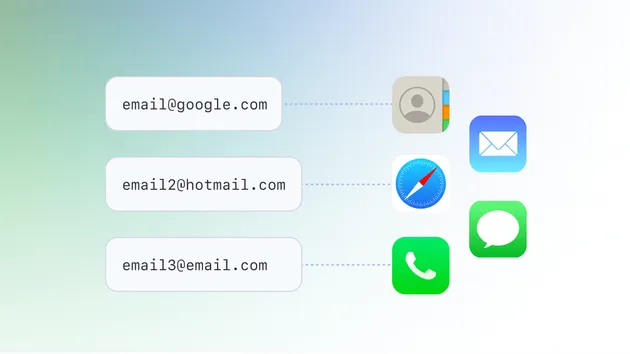

Emails (

email1,email2, …)-

Emails originate from:

- Proff detail page (visible text,

mailto:, slug/button patterns). - Website crawl, same-domain pages.

- Social profiles (Facebook/Instagram/LinkedIn) when detail+website had none.

- Proff detail page (visible text,

-

At least one field will be present (

email1), and if no emails are found at all,email1is set to"n/a". -

email2,email3, etc. are only present when extra distinct emails were discovered.

-

-

Social fields

social_facebook,social_instagram,social_linkedin,social_x,social_youtube,social_tiktok,social_pinterest.- Each field is either a cleaned canonical URL (tracking params removed) or

"n/a"when not found.

How it works

1. Request queue setup

-

Reads

start_urlsfrom actor input. -

For each entry with a

url, enqueues a listing request with:user_data = {'depth': 0, 'type': 'listing'}unique_key = urlfor deduplication.

2. Listing handling

For each listing request:

-

Fetches the Proff listing page.

-

Parses HTML with BeautifulSoup.

-

Extracts detail links:

- Any

<a>withhrefcontaining"/selskap/". - Normalized to absolute URLs.

- Any

-

Applies

max_resultsconstraint:- A shared

detail_enqueuedcounter and lock ensure that only up tomax_resultsdetail pages are ever enqueued.

- A shared

-

Enqueues detail requests for each selected company URL:

user_data = {'depth': depth + 1, 'type': 'detail'}.

-

Handles pagination:

- Searches the text for

Side X av Y. - Constructs

?page=Nfor the next listing page whileX < Yanddepth < max_depth. - Enqueues additional listing requests with the same

depth.

- Searches the text for

3. Detail handling

For each detail request:

-

Fetches the company detail page.

-

Extracts:

-

Name from

h1, Open Graph title, or<title>. -

Categories from Proff-specific category containers.

-

Phone via

tel:links and common Norwegian phone patterns. -

Address via:

- JSON-LD

PostalAddress. - Microdata

[itemprop="streetAddress"],[itemprop="postalCode"],[itemprop="addressLocality"]. - Proff-specific fallback (

Adresse ...) and general heuristics.

- JSON-LD

-

Website via:

- Canonical URL.

og:url.- JSON-LD

url/sameAs. - Best external link excluding Proff and social domains.

-

Raw emails from:

mailto:links.- Page text (clipped to 500k chars).

- Proff/Hitta-style slug/button patterns.

-

4. Website validation & crawl

If a website candidate exists and is not banned:

-

Status check:

- Performs a GET with redirect following.

- Marks

website_detailsas"ok","404", or"unavailable".

-

Site crawl (when status is ok):

-

Computes a base domain from the host (

example.no). -

Looks up a per-run cache:

-

If cached: reuse

(emails, socials)for this domain. -

If not cached:

- Starts from the root URL.

- Visits same-domain HTML pages, skipping binary extensions.

- Prioritizes URLs containing contact-related keywords.

- Stops after

site_email_max_pagespages or when the queue empties. - Extracts emails + socials from each page.

- Stores results in

WEBSITE_CRAWL_CACHE.

-

-

-

Email filtering with website context:

-

When website is ok:

-

Keeps only emails that:

- Match the website base domain or

- Are from known freemail providers (

gmail.com,outlook.com, etc.).

-

-

When website is missing/banned/unavailable:

- Uses all valid detail-page emails, plus any valid emails from socials.

-

5. Social extraction

The actor:

-

Parses JSON-LD

sameAsarrays/strings and regular<a>tags. -

Detects social domains using an exact/

endswithmatch against:facebook.com,instagram.com,linkedin.com,x.com,twitter.com,youtube.com,tiktok.com,pinterest.com.

-

Normalizes URLs by removing tracking parameters (

utm_*,fbclid, etc.). -

Fills the

social_*fields if they exist; otherwise sets them to"n/a".

6. Email fallback via socials

If no emails were found after:

- Detail page extraction, and

- Website crawl (or website missing/unavailable),

then:

- The actor fetches high-priority social profiles (Facebook, Instagram, LinkedIn).

- Extracts emails from those pages.

- If still none are found,

email1is set to"n/a".

7. Concurrency & stopping conditions

-

Multiple

workercoroutines pull requests from the queue as long asstop_crawlisFalse. -

max_resultsis enforced with a lock:detail_enqueuedlimits how many detail requests can be added.results_pushedlimits how many records are pushed.

-

When

results_pushedreachesmax_results,stop_crawlis set toTrueand workers stop after finishing the current job.

Debug logging

The actor writes concise, useful logs such as:

Enqueuing https://www.proff.no/bransjesøk?...Initial listing seeds.Scraping https://www.proff.no/bransjesøk?... (depth=0, type=listing) ...Listing processing start.Scraping https://www.proff.no/selskap/... (depth=1, type=detail) ...Detail processing start.While crawling for contacts, skipping https://example.com/path: <error>Website crawl failures (non-fatal).While crawling social page, skipping https://facebook.com/...: <error>Social crawl failures (non-fatal).Worker N finished.Each worker’s completion summary.

Increase APIFY_LOG_LEVEL to DEBUG for more granular insights during development or troubleshooting.

Performance tips

-

Limit

max_resultswhen doing exploratory runs to keep datasets manageable and runs short. -

Keep

concurrencymoderate (e.g. 5–10) when crawling many external sites; raise gradually if you have strong infrastructure/proxies. -

Tune

site_email_max_pages:1–2for quick scans (basic contacts).3–5for deeper email hunting.

-

Use sensible

start_urls:- More focused Proff filters (region/county, query) mean less noise and fewer unnecessary detail pages.

-

Consider adding your own HTTP headers if you need a different language or user agent profile.

FAQ

I’m not technical. How am I supposed to use this?

-

Find a Proff search page Go to Proff and run a bransjesøk (e.g. “Restauranter og kafeer” in a region/county). Copy the URL from your browser.

-

Paste it into

start_urlsIn the actor input, set:"start_urls": [{ "url": "PASTE_YOUR_PROFF_URL_HERE" }]You can add multiple listing URLs if you want to cover several regions or queries in one run.

-

Decide how many results you want

- For a small test, use

"max_results": 50. - For a serious lead list, use 200–500 or leave

0(no cap, but the run may be long).

- For a small test, use

-

Choose how hard to search for emails

site_email_max_pages = 1–2→ faster, fewer emails, minimal crawling.site_email_max_pages = 3–5→ slower, better chance to find contact pages.

-

Run the actor Wait for the run to finish, then open the dataset in Apify and export as CSV/JSON/XLSX.

What counts as a “good” start_url?

Any Proff listing page that shows a list of companies with multiple pages when you scroll or paginate is fine, e.g.:

https://www.proff.no/bransjesøk?q=Restauranter%20og%20kafeer®ion=Østlandet&county=Innlandethttps://www.proff.no/bransjesøk?q=barnehage®ion=Hele%20Norge

Avoid linking directly to a single company detail page as a start_url; the actor handles those too, but you lose the whole “listing to many details” benefit.

Why does it sometimes return fewer records than max_results?

Common reasons:

- The Proff listing(s) simply do not have that many companies matching your search.

- Pagination stops when

Side X av Yindicates there are no more pages. - Some detail pages may fail completely (rare), and the actor logs an error and moves on.

The actor guarantees not to exceed max_results, but it cannot invent records that Proff does not provide.

Why do some records only have email1 = "n/a"?

That means:

-

No valid emails were found on:

- The Proff detail page,

- The company website (if any and reachable),

- The prioritized social profiles (Facebook/Instagram/LinkedIn).

-

Many businesses use contact forms or hide emails behind logins/JS widgets; those are out of reach without more invasive techniques.

You can still use the phone, address, and website fields for outreach or enrichment elsewhere.

Why are some emails freemail (gmail/outlook/etc.)?

When the website is reachable and valid:

-

The actor keeps:

- Emails on the same base domain as the website.

- Emails on common freemail providers (gmail, outlook, etc.), since many small businesses use them.

This means you will see combinations such as:

post@firma.nonavn.firma@gmail.com

When the website is missing or down, the actor keeps all valid-looking emails from the detail page and social profiles.

Why is the website sometimes "n/a" even though Proff shows something clickable?

Likely causes:

- The Proff “website” link points to a banned domain (e.g. generic info/lookup services) that is intentionally ignored.

- The site consistently returns non-HTML or very broken responses that fail the validation.

- The click leads to something that is detected as social media or share/intent URL, not a real website.

The actor prefers to output "n/a" rather than pretend a non-company site is the official website.

Troubleshooting

Actor exits immediately with no data

- Check that

start_urlsis a non-empty array, each element an object with a non-emptyurlfield. - The actor logs an error and exits if there are no valid

start_urls.

Timeouts and partial data

- Increase

timeout_secondsslightly if you see frequent timeouts in logs for slower sites. - Reduce

site_email_max_pagesand/orconcurrencyto lighten the load.

Too many external-sites-related errors

- Many external sites may block or throttle; this is normal at small scale.

- If you rely heavily on site crawling, consider using a suitable proxy configuration on the Apify platform.

Roadmap / future improvements

- Better Proff layout adaptation if/when Proff updates their DOM structure.

- Company ID extraction from URLs for easier merging with other datasets.

- Optional Proff organization number field (org.nr parsing).

- Multi-country tuning for similar portals (e.g., Swedish company directories).

- Configurable social crawl strategy (which networks to prioritize or exclude).

- Schema validation for output with stricter types.

Supported & planned regions

| Region | Status | Details | Link |

|---|---|---|---|

| Norway | Optimized | Proff.no | — |

| Sweden | Planned | Allabolag.se | — |

Create an issue or contact the author if you’d like a specific directory or country prioritized.

Changelog

-

2025-11-14

- Reworked actor to target Proff.no instead of Google Maps Local results.

- Added listing vs detail separation with Proff-specific detail link & pagination logic.

- Implemented JSON-LD-first address extraction with Norwegian postcode awareness.

- Hardened social extraction from JSON-LD

sameAsand<a>tags with safe domain matching. - Introduced per-domain website crawl cache to avoid redundant crawling.

- Enforced concurrency-safe

max_resultslimits using async locks and shared counters.

Disclaimer & License

This Apify Actor is provided “as is”, without warranty of any kind — express or implied — including but not limited to the warranties of merchantability, fitness for a particular purpose, and non-infringement. Please follow local laws, do not use for malicious purposes and do not use this code to spam.

ToS & legality (Reminder): Scraping Proff HTML may violate their Terms of Service. Use responsibly, at low rates, with proxies if needed, and comply with local laws and site policies. This Actor avoids official APIs and parses public HTML only.

© 2025 SLSH. All rights reserved. Copying or modifying the source code is prohibited.