Spawn Workers

No credit card required

Spawn Workers

No credit card required

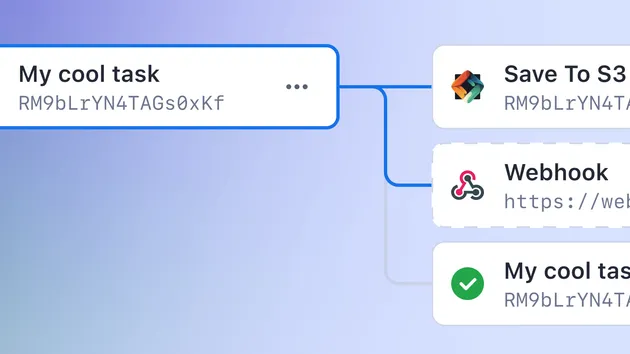

This actor lets you spawn tasks or other actors in parallel on the Apify platform that shares a common output dataset, splitting a RequestQueue-like dataset containing request URLs

This actor lets you spawn tasks or other actors in parallel on the Apify platform that shares a common output dataset, splitting a RequestQueue-like dataset containing request URLs

Usage

1const Apify = require("apify"); 2 3Apify.main(async () => { 4 const input = await Apify.getInput(); 5 6 const { 7 limit, // every worker receives a "batch" 8 offset, // that changes depending on how many were spawned 9 inputDatasetId, 10 outputDatasetId, 11 parentRunId, 12 isWorker, 13 emptyDataset, // means the inputDatasetId is empty, and you should use another source, like the Key Value store 14 ...rest // any other configuration you passed through workerInput 15 } = input; 16 17 // don't mix requestList with requestQueue 18 // when in worker mode 19 const requestList = new Apify.RequestList({ 20 persistRequestsKey: 'START-URLS', 21 sourcesFunction: async () => { 22 if (!isWorker) { 23 return [ 24 { 25 "url": "https://start-url..." 26 } 27 ] 28 } 29 30 const requestDataset = await Apify.openDataset(inputDatasetId); 31 32 const { items } = await requestDataset.getData({ 33 offset, 34 limit, 35 }); 36 37 return items; 38 } 39 }); 40 41 await requestList.initialize(); 42 43 const requestQueue = isWorker ? undefined : await Apify.openRequestQueue(); 44 const outputDataset = isWorker ? await Apify.openDataset(outputDatasetId) : undefined; 45 46 const crawler = new Apify.CheerioCrawler({ 47 requestList, 48 requestQueue, 49 handlePageFunction: async ({ $, request }) => { 50 if (isWorker) { 51 // scrape details here 52 await outputDataset.pushData({ ...data }); 53 } else { 54 // instead of requestQueue.addRequest, you push the URLs to the dataset 55 await Apify.pushData({ 56 url: $("select stuff").attr("href"), 57 userData: { 58 label: $("select other stuff").data("rest"), 59 }, 60 }); 61 } 62 }, 63 }); 64 65 await crawler.run(); 66 67 if (!isWorker) { 68 const { output } = await Apify.call("pocesar/spawn-workers", { 69 // if you omit this, the default dataset on the spawn-workers actor will hold all items 70 outputDatasetId: "some-named-dataset", 71 // use this actor default dataset as input for the workers requests, usually should be this own dataset ID 72 inputUrlsDatasetId: Apify.getEnv().defaultDatasetId, 73 // the name or ID of your worker actor (the one below) 74 workerActorId: Apify.getEnv().actorId, 75 // you can use a task instead 76 workerTaskId: Apify.getEnv().actorTaskId, 77 // Optionally pass input to the actors / tasks 78 workerInput: { 79 maxConcurrency: 20, 80 mode: 1, 81 some: "config", 82 }, 83 // Optional worker options 84 workerOptions: { 85 memoryMbytes: 256, 86 }, 87 // Number of workers 88 workerCount: 2, 89 // Parent run ID, so you can persist things related to this actor call in a centralized manner 90 parentRunId: Apify.getEnv().actorRunId, 91 }); 92 } 93});

Motivation

RequestQueue is the best way to process requests cross actors, but it doesn't offer a way to limit or get offsets from it, you can just iterate over its contents or add new requests.

By using the dataset, you have the same functionality (sans the ability to deduplicate the URLs) that can be safely shared and partitioned to many actors at once. Each worker will be dealing with their own subset of URLs, with no overlapping.

Limitations

Don't use the following keys for workerInput as they will be overwritten:

- offset: number

- limit: number

- inputDatasetId: string

- outputDatasetId: string

- workerId: number

- parentRunId: string

- isWorker: boolean

- emptyDataset: boolean

License

Apache 2.0

- 1 monthly user

- 2 stars

- Created in Apr 2020

- Modified about 2 years ago

Paulo Cesar

Paulo Cesar