Legacy PhantomJS Crawler

No credit card required

Legacy PhantomJS Crawler

No credit card required

Replacement for the legacy Apify Crawler product with a backward-compatible interface. The actor uses PhantomJS headless browser to recursively crawl websites and extract data from them using a piece of front-end JavaScript code.

Do you want to learn more about this Actor?

Get a demoThis actor implements the legacy Apify Crawler product. It uses the PhantomJS headless browser to recursively crawl websites and extract data from them using front-end JavaScript code.

Note that PhantomJS is no longer being developed by the community

and might be easily detected and blocked by target websites.

Therefore, for new projects, we recommend that you use the Web Scraper

(apify/web-scraper) actor,

which provides similar functionality, but is based on the modern headless Chrome browser.

For more details on how to migrate your crawlers to this actor, please read this blog post.

Compatibility with legacy Apify Crawler

Apify Crawler used to be a core product of Apify, but in April 2019 it was deprecated in favor of the more general Apify Actors product. This actor serves as a replacement of the legacy product and provides equivalent interface and functionality, in order to enable users to seamlessly migrate their crawlers. Note that there are several differences between this actor and legacy Apify Crawler:

- The Cookies persistence setting of Over all crawler runs is only supported when running the actor as a task. When you run the actor directly and use this setting, the actor will fail and print an error to the log.

- In Page function, the

contextobject passed to the function has slightly different properties:- The

statsobject contains only a subset of the original statistics. Seecontextdetails in Page function section. - The

actExecutionIdandactIdproperties are not defined and were replaced byactorRunIdandactorTaskId, respectively.

- The

- The Finish webhook URL and Finish webhook data fields are still supported. However, the POST payload passed to the webhook has a different format. See Finish webhook below for details.

- The actor supports legacy proxy settings fields

proxyType,proxyGroupsandcustomProxies, but their values are not checked. If these settings are invalid, the actor will start normally and might crawl pages with invalid proxy settings, most likely producing invalid results. It is recommended to use the new Proxy configuration (proxyConfiguration) field instead, which is correctly validated before the actor is started. Beware that Custom proxies in the new Proxy configuration no longer support SOCKS5 proxies, and they only accept HTTP proxies. If you need SOCKS5, please contact support@apify.com - The Test URL feature is not supported.

- The crawling results are stored into an Apify dataset instead of the specialized storage for crawling results used by the old Crawler. The dataset supports most of the legacy API query parameters in order to emulate the same results format. However, there might be some small incompatibilities. For details, see Crawling results.

Overview

This actor provides a web crawler for developers that enables the scraping of data from any website using the primary programming language of the web, JavaScript.

In order to extract structured data from a website, you only need two things. First, tell the crawler which pages it should visit (see Start URLs and Pseudo-URLs) and second, define a JavaScript code that will be executed on every web page visited in order to extract the data from it (see Page function). The crawler is a full-featured web browser which loads and interprets JavaScript and the code you provide is simply executed in the context of the pages it visits. This means that writing your data-extraction code is very similar to writing JavaScript code in front-end development, you can even use any client-side libraries such as jQuery or Underscore.js.

Imagine the crawler as a guy sitting in front of a web browser. Let's call him Bob. Bob opens a start URL and waits for the page to load, executes your JavaScript code using a developer console, writes down the result and then right-clicks all links on the web page to open them in new browser tabs. After that, Bob closes the current tab, goes to the next tab and repeats the same action again. Bob is pretty smart and skips pages that he has already visited. When there are no more pages, he is done. And this is where the magic happens. Bob would need about a month to click through a few hundred pages. Apify can do it in a few seconds and makes fewer mistakes than Bob.

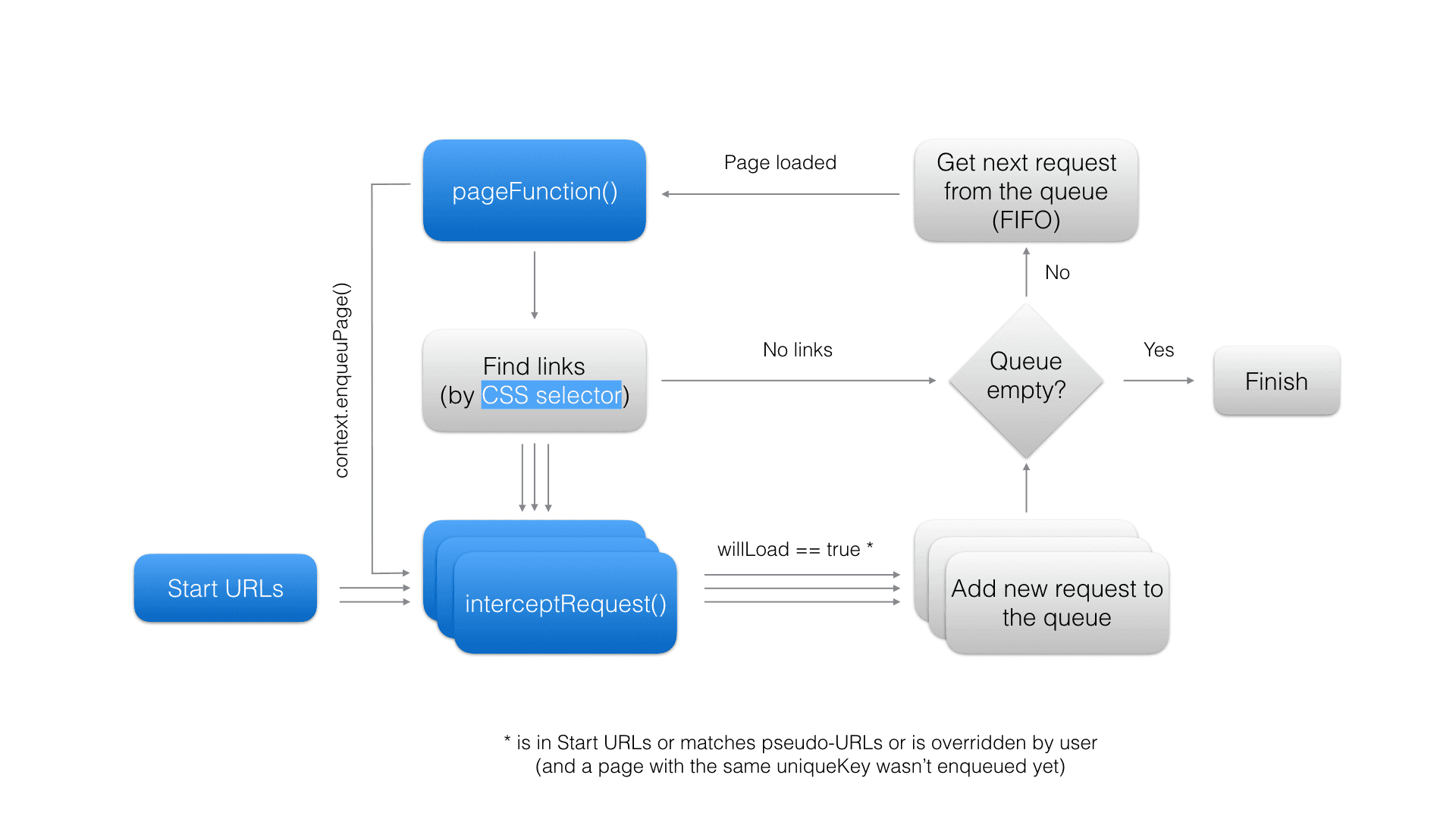

More formally, the crawler repeats the following steps:

- Add each of the Start URLs to the crawling queue.

- Fetch the first URL from the queue and load it in the virtual browser.

- Execute Page function on the loaded page and save its results.

- Find all links from the page using Clickable elements CSS selector. If a link matches any of the Pseudo-URLs and has not yet been enqueued, add it to the queue.

- If there are more items in the queue, go to step 2, otherwise finish.

This process is depicted in the following diagram. Note that blue elements represent settings or operations that can be affected by crawler settings. These settings are described in detail in the following sections.

Note that each crawler configuration setting can also be set using the API. The corresponding property names and types are described in the Input schema section.

Start URLs

The Start URLs (startUrls) field represent the list of URLs of the first pages that the crawler will open.

Optionally, each URL can be associated with a custom label that can be referenced from

your JavaScript code to determine which page is currently open

(see Request object for details).

Each URL must start with either a http:// or https:// protocol prefix!

Note that it is possible to instruct the crawler to load a URL using a HTTP POST request

simply by suffixing it with a [POST] marker, optionally followed by

POST data (e.g. http://www.example.com[POST]<wbr>key1=value1&key2=value2).

By default, POST requests are sent with

the Content-Type: application/x-www-form-urlencoded header.

Maximum label length is 100 characters and maximum URL length is 2000 characters.

Pseudo-URLs

The Pseudo-URLs (crawlPurls) field specifies which pages will be visited by the crawler using

the so-called pseudo-URLs (PURL)

format. PURL is simply a URL with special directives enclosed in [] brackets.

Currently, the only supported directive is [regexp], which defines

a JavaScript-style regular expression to match against the URL.

For example, a PURL http://www.example.com/pages/[(\w|-)*] will match all of the

following URLs:

http://www.example.com/pages/http://www.example.com/pages/my-awesome-pagehttp://www.example.com/pages/something

If either [ or ] is part of the normal query string,

it must be encoded as [\x5B] or [\x5D], respectively. For example,

the following PURL:

http://www.example.com/search?do[\x5B]load[\x5D]=1

will match the URL:

http://www.example.com/search?do[load]=1

Optionally, each PURL can be associated with a custom label that can be referenced from your JavaScript code to determine which page is currently open (see Request object for details).

Note that you don't need to use this setting at all,

because you can completely control which pages the crawler will access, either using the

Intercept request function

or by calling context.enqueuePage() inside the Page function.

Maximum label length is 100 characters and maximum PURL length is 1000 characters.

Clickable elements

The Clickable elements (clickableElementsSelector) field contains a CSS selector used to find links to other web pages.

On each page, the crawler clicks all DOM elements matching this selector

and then monitors whether the page generates a navigation request.

If a navigation request is detected, the crawler checks whether it matches

Pseudo-URLs,

invokes Intercept request function,

cancels the request and then continues clicking the next matching elements.

By default, new crawlers are created with a safe CSS selector:

a:not([rel=nofollow])

In order to reach more pages, you might want to use a wider CSS selector, such as:

a:not([rel=nofollow]), input, button, [onclick]:not([rel=nofollow])

Be careful - clicking certain DOM elements can cause unexpected and potentially harmful side effects. For example, by clicking buttons, you might submit forms, flag comments, etc. In principle, the safest option is to narrow the CSS selector to as few elements as possible, which also makes the crawler run much faster.

Leave this field empty if you do not want the crawler to click any elements and only open

Start URLs

or pages enqueued using enqueuePage().

Page function

The Page function (pageFunction) field contains

a user-provided JavaScript function that is executed in the context of every web page loaded by

the crawler.

Page function is typically used to extract some data from the page, but it can also be used

to perform some non-trivial

operation on the page, e.g. handle AJAX-based pagination.

IMPORTANT: This actor uses the PhantomJS headless web browser, which only supports JavaScript ES5.1 standard (read more in a blog post about PhantomJS 2.0).

The basic page function with no effect has the following signature:

1function pageFunction(context) { 2 return null; 3}

The function can return an arbitrary JavaScript object (including array, string, number, etc.) that can be stringified to JSON;

this value will be saved in the crawling results, as the pageFunctionResult

field of the Request object corresponding to the web page

on which the pageFunction was executed.

The crawling results are stored in the default dataset

associated with the actor run, from where they can be downloaded

in a computer-friendly form (JSON, JSONL, XML or RSS format),

as well as in a human-friendly tabular form (HTML or CSV format).

If the pageFunction's return value is an array,

its elements can be displayed as separate rows in such a table,

to make the results more readable.

The function accepts a single argument called context,

which is an object with the following properties and functions:

| Name | Description |

|---|---|

request | An object holding all the available information about the currently loaded web page. See Request object for details. |

jQuery | A jQuery object, only available if the Inject jQuery setting is enabled. |

underscoreJs | The Underscore.js' _ object, only available if the

Inject Underscore.js

setting is enabled.

|

skipLinks() | If called, the crawler will not follow any links from the current page and will continue with the next page from the queue. This is useful to speed up the crawl by avoiding unnecessary paths. |

skipOutput() | If called, no information about the current page will be saved to the results,

including the page function result itself.

This is useful to reduce the size of the results by skipping unimportant pages.

Note that if the page function throws an exception, the skipOutput()

call is ignored and the page is outputted anyway, so that the user has a chance

to determine whether there was an error

(see Request object's errorInfo

field).

|

willFinishLater() | Tells the crawler that the page function will continue performing some background

operation even after it returns. This is useful

when you want to fetch results from an asynchronous operation,

e.g. an XHR request or a click on some DOM element.

If you use the willFinishLater() function, make sure you also invoke finish()

or the crawler will wait infinitely for the result and eventually timeout

after the period specified in

Page function timeout.

Note that the normal return value of the page function is ignored.

|

finish(result) | Tells the crawler that the page function finished its background operation.

The result parameter receives the result of the page function - this is

a replacement

for the normal return value of the page function that was ignored (see willFinishLater() above).

|

saveSnapshot() | Captures a screenshot of the web page and saves its DOM to an HTML file, which are both then displayed in the user's crawling console. This is especially useful for debugging your page function. |

enqueuePage(request) |

Adds a new page request to the crawling queue, regardless of whether it matches

any of the Pseudo-URLs.

The Note that the manually enqueued page is subject to the same processing as any other page found by the crawler. For example, the Intercept request function function will be called for the new request, and the page will be checked to see whether it has already been visited by the crawler and skipped if so. For backwards compatibility, the function also supports the following signature:enqueuePage(url, method, postData, contentType).

|

saveCookies([cookies]) | Saves the current cookies of the current PhantomJS browser to the actor task's Initial cookies setting. All subsequently started PhantomJS processes will use these cookies. For example, this is useful for storing a login. Optionally, you can pass an array of cookies to set to the browser before saving (in PhantomJS format). Note that by passing an empty array you can unset all cookies. |

customData | Custom user data from crawler settings provided via customData input field. |

stats | An object containing a snapshot of statistics from the current crawl.

It contains the following fields:

pagesCrawled, pagesOutputted

and pagesInQueue.

Note that the statistics are collected before

the current page has been crawled.

|

actorRunId | String containing ID of this actor run. It might be used to control the actor using the API, e.g. to stop it or fetch its results. |

actorTaskId | String containing ID of the actor task, or null if actor is run directly.

The ID might be used to control

the task using the API.

|

Note that any changes made to the context parameter will be ignored.

When implementing the page function, it is the user's responsibility to not break normal

page scripts that might affect the operation of the crawler.

Waiting for dynamic content

Some web pages do not load all their content immediately, but only fetch it in the background

using AJAX,

while pageFunction might be executed before the content has actually been

loaded.

You can wait for dynamic content to load using the following code:

1function pageFunction(context) { 2 var $ = context.jQuery; 3 var startedAt = Date.now(); 4 5 var extractData = function() { 6 // timeout after 10 seconds 7 if( Date.now() - startedAt > 10000 ) { 8 context.finish("Timed out before #my_element was loaded"); 9 return; 10 } 11 12 // if my element still hasn't been loaded, wait a little more 13 if( $('#my_element').length === 0 ) { 14 setTimeout(extractData, 500); 15 return; 16 } 17 18 // refresh page screenshot and HTML for debugging 19 context.saveSnapshot(); 20 21 // save a result 22 context.finish({ 23 value: $('#my_element').text() 24 }); 25 }; 26 27 // tell the crawler that pageFunction will finish asynchronously 28 context.willFinishLater(); 29 30 extractData(); 31}

Intercept request function

The Intercept request function (interceptRequest) field contains

a user-provided JavaScript function that is called whenever

a new URL is about to be added to the crawling queue,

which happens at the following times:

- At the start of crawling for all Start URLs.

- When the crawler looks for links to new pages by clicking elements matching the Clickable elements CSS selector and detects a page navigation request, i.e. a link (GET) or a form submission (POST) that would normally cause the browser to navigate to a new web page.

- Whenever a loaded page tries to navigate to another page, e.g. by setting

window.locationin JavaScript. - When user code invokes

enqueuePage()inside of Page function.

The intercept request function allows you to affect on a low level

how new pages are enqueued by the crawler.

For example, it can be used to ensure that the request is added to the crawling queue even

if it doesn't match

any of the Pseudo-URLs,

or to change the way the crawler determines whether the page has already been visited or not.

Similarly to the Page function,

this function is executed in the context of the originating web page (or in the context

of about:blank page for Start URLs).

IMPORTANT: This actor is using PhantomJS headless web browser, which only supports the JavaScript ES5.1 standard (read more in blog post about PhantomJS 2.0).

The basic intercept request function with no effect has the following signature:

1function interceptRequest(context, newRequest) { 2 return newRequest; 3}

The context is an object with the following properties:

request | An object holding all the available information about the currently loaded web page. See Request object for details. |

jQuery | A jQuery object, only available if the Inject jQuery setting is enabled. |

underscoreJs | An Underscore.js object, only available if the Inject Underscore.js setting is enabled. |

clickedElement | A reference to the DOM object whose clicking initiated the current navigation

request.

The value is null if the navigation request was initiated by other

means,

e.g. using some background JavaScript action.

|

Beware that in rare situations when the page redirects in its JavaScript before it was

completely loaded

by the crawler, the jQuery and underscoreJs objects will be undefined.

The newRequest parameter contains a Request object

corresponding to the new page.

The way the crawler handles the new page navigation request depends

on the return value of the interceptRequest function in the following way:

- If function returns the

newRequestobject unchanged, the default crawler behaviour will apply. - If function returns the

newRequestobject altered, the crawler behavior will be modified, e.g. it will enqueue a page that would not normally be skipped. The following fields can be altered:willLoad,url,method,postData,contentType,uniqueKey,label,interceptRequestDataandqueuePosition(see Request object for details). - If function returns

null, the request will be dropped and a new page will not be enqueued. - If function throws an exception, the default crawler behaviour will apply

and the error will be logged to Request object's

errorInfofield. Note that this is the only way a user can catch and debug such an exception.

Note that any changes made to the context parameter will be ignored

(unlike the newRequest parameter).

When implementing the function, it is the user's responsibility not to break normal page

scripts that might affect the operation of the crawler. You have been warned.

Also note that the function does not resolve HTTP redirects: it only reports the originally

requested URL, but does not open it to find out which URL it eventually redirects to.

Proxy configuration

The Proxy configuration (proxyConfiguration) option enables you to set

proxies that will be used by the crawler in order to prevent its detection by target websites.

You can use both Apify Proxy

as well as custom HTTP or SOCKS5 proxy servers.

The following table lists the available options of the proxy configuration setting:

| None | Crawler will not use any proxies. All web pages will be loaded directly from IP addresses of Apify servers running on Amazon Web Services. |

|---|---|

| Apify Proxy (automatic) | The crawler will load all web pages using Apify Proxy in the automatic mode. In this mode, the proxy uses all proxy groups that are available to the user, and for each new web page it automatically selects the proxy that hasn't been used in the longest time for the specific hostname, in order to reduce the chance of detection by the website. You can view the list of available proxy groups on the Proxy page in the app. |

| Apify Proxy (selected groups) | The crawler will load all web pages using Apify Proxy with specific groups of target proxy servers. |

| Custom proxies |

The crawler will use a custom list of proxy servers.

The proxies must be specified in the Example:

|

Note that the proxy server used to fetch a specific page

is stored to the proxy field of the Request object.

Note that for security reasons, the usernames and passwords are redacted from the proxy URL.

The proxy configuration can be set programmatically when calling the actor using the API

by setting the proxyConfiguration field.

It accepts a JSON object with the following structure:

1{ 2 // Indicates whether to use Apify Proxy or not. 3 "useApifyProxy": Boolean, 4 5 // Array of Apify Proxy groups, only used if "useApifyProxy" is true. 6 // If missing or null, Apify Proxy will use the automatic mode. 7 "apifyProxyGroups": String[], 8 9 // Array of custom proxy URLs, in "scheme://user:password@host:port" format. 10 // If missing or null, custom proxies are not used. 11 "proxyUrls": String[], 12}

Finish webhook

The Finish webhook URL (finishWebhookUrl)

field specifies a custom HTTP endpoint that receives a notification after a run of the actor ends,

regardless of its status, i.e. whether it finished, failed, was aborted, etc.

You can specify a custom string that will be sent with the webhook

using the Finish webhook data (finishWebhookData),

in order to help you identify the actor run.

The provided endpoint is sent a HTTP POST request with Content-Type: application/json; charset=utf-8 header,

and its payload contains a JSON object with the following structure:

1{ 2 "actorId": String, // ID of the actor 3 "runId": String, // ID of the actor run 4 "taskId": String, // ID of the actor task. It might be null if the actor is started directly without a task. 5 "datasetId": String, // ID of the dataset with the crawling results 6 "data": String // Custom data provided in "finishWebhookData" field 7}

You can use the actorId and runId fields to query the actor run status using

the Get run API endpoint.

The datasetId field can be used to download the crawling results

using the Get dataset items

API endpoint - see Crawling results below for more details.

Note that the Finish webhook URL and Finish webhook data are provided merely for backwards compatibility with the legacy Apify Crawler product, and the calls are performed using the Apify platform's standard webhook facility. For details, see Webhooks in documentation.

To test your webhook endpoint, please create a new empty task for this actor, set the Finish webhook URL and run the task.

Cookies

The Initial cookies (cookies) option enables you to specify

a JSON array with cookies that will be used by the crawler on start.

You can export the cookies from your own web browser,

for example using the EditThisCookie plugin.

This setting is typically used to start crawling when logged in to certain websites.

The array might be null or empty, in which case the crawler will start with no cookies.

Note that if the Cookie persistence setting is Over all crawler runs and the actor is started from within a task the cookies array on the task will be overwritten with fresh cookies from the crawler whenever it successfully finishes.

SECURITY NOTE: You should never share cookies or an exported crawler configuration containing cookies with untrusted parties, because they might use it to authenticate themselves to various websites with your credentials.

Example of Initial cookies setting:

1[ 2 { 3 "domain": ".example.com", 4 "expires": "Thu, 01 Jun 2017 16:14:38 GMT", 5 "expiry": 1496333678, 6 "httponly": true, 7 "name": "NAME", 8 "path": "/", 9 "secure": false, 10 "value": "Some value" 11 }, 12 { 13 "domain": ".example.com", 14 "expires": "Thu, 01 Jun 2017 16:14:37 GMT", 15 "expiry": 1496333677, 16 "httponly": true, 17 "name": "OTHER_NAME", 18 "path": "/", 19 "secure": false, 20 "value": "Some other value" 21 } 22]

The Cookies persistence (cookiesPersistence) option

indicates how the crawler saves and reuses cookies.

When you start the crawler, the first PhantomJS process will

use the cookies defined by the cookies setting.

Subsequent PhantomJS processes will use cookies as follows:

Per single crawling process only"PER_PROCESS" | Cookies are only maintained separately by each PhantomJS crawling process for the lifetime of that process. The cookies are not shared between crawling processes. This means that whenever the crawler rotates its IP address, it will start again with cookies defined by the cookies setting. Use this setting for maximum privacy and to avoid detection of the crawler. This is the default option. |

Per full crawler run"PER_CRAWLER_RUN" | Indicates that cookies collected at the start of the crawl by the first PhantomJS process are reused by other PhantomJS processes, even when switching to a new IP address. This might be necessary to maintain a login performed at the beginning of your crawl, but it might help the server to detect the crawler. Note that cookies are only collected at the beginning of the crawl by the initial PhantomJS process. Cookies set by subsequent PhantomJS processes are only valid for the duration of that process and are not reused by other processes. This is necessary to enable crawl parallelization. |

Over all crawler runs"OVER_CRAWLER_RUNS" |

This setting is similar to Per full crawler run,

the only difference is that if the actor finishes with SUCCEEDED status,

its current cookies are automatically saved

to the cookies setting of the actor task

used to start the actor,

so that new crawler run starts where the previous run left off.

This is useful to keep login cookies fresh and avoid their expiration.

|

Crawling results

The crawling results are stored in the default dataset associated with the actor run, from where you can export them to formats such as JSON, XML, CSV or Excel. For each web page visited, the crawler pushes a single Request object with all the details about the page into the dataset.

To download the results, call the Get dataset items API endpoint:

https://api.apify.com/v2/datasets/[DATASET_ID]/items?format=json

where [DATASET_ID] is the ID of actor's run dataset,

which you can find the Run object returned when starting the actor.

The response looks as follows:

1[{ 2 "loadedUrl": "https://www.example.com/", 3 "requestedAt": "2019-04-02T21:27:33.674Z", 4 "type": "StartUrl", 5 "label": "START", 6 "pageFunctionResult": [ 7 { 8 "product": "iPhone X", 9 "price": 699 10 }, 11 { 12 "product": "Samsung Galaxy", 13 "price": 499 14 } 15 ], 16 ... 17}, 18... 19]

Note that in the full results of the legacy Crawler product results, each Request

object contained a field called errorInfo, even if it was empty.

In the dataset, this field is skipped if it's empty.

To download the data in simplified format known in the legacy Apify Crawler

product, add the simplified=1 query parameter:

https://api.apify.com/v2/datasets/[DATASET_ID]/items?format=json&simplified=1

The response will look like this:

1[{ 2 "url": "https://www.example.com/iphone-x", 3 "product": "iPhone X", 4 "price": 699 5}, 6{ 7 "url": "https://www.example.com/samsung-galaxy", 8 "product": "Samsung Galaxy", 9 "price": 499 10}, 11{ 12 "url": "https://www.example.com/nokia", 13 "errorInfo": "The page was not found", 14} 15]

To get the results in other formats, set format query parameter to xml, xlsx, csv, html, etc.

For full details, see the Get dataset items

endpoint in API reference.

To skip the records containing the errorInfo field from the results,

add the skipFailedPages=1 query parameter. This will ensure the results have a fixed structure, which is especially useful for tabular formats such as CSV or Excel.

Request object

The Request object contains all the available information about

every single web page the crawler encounters

(both visited and not visited). This object comes into play

in both Page function

and Intercept request function,

and crawling results are actually just an array of these objects.

The object has the following structure:

1{ 2 // A string with a unique identifier of the Request object. 3 // It is generated from the uniqueKey, therefore two pages from various crawls 4 // with the same uniqueKey will also have the same ID. 5 id: String, 6 7 // The URL that was specified in the web page's navigation request, 8 // possibly updated by the 'interceptRequest' function 9 url: String, 10 11 // The final URL reported by the browser after the page was opened 12 // (will be different from 'url' if there was a redirect) 13 loadedUrl: String, 14 15 // Date and time of the original web page's navigation request 16 requestedAt: Date, 17 // Date and time when the page load was initiated in the web browser, or null if it wasn't 18 loadingStartedAt: Date, 19 // Date and time when the page was actually loaded, or null if it wasn't 20 loadingFinishedAt: Date, 21 22 // HTTP status and headers of the loaded page. 23 // If there were any redirects, the status and headers correspond to the final response, not the intermediate responses. 24 responseStatus: Number, 25 responseHeaders: Object, 26 27 // If the page could not be loaded for any reason (e.g. a timeout), this field contains a best guess of 28 // the code of the error. The value is either one of the codes from QNetworkReply::NetworkError codes 29 // or value 999 for an unknown error. This field is used internally to retry failed page loads. 30 // Note that the field is only informative and might not be set for all types of errors, 31 // always use errorInfo to determine whether the page was processed successfully. 32 loadErrorCode: Number, 33 34 // Date and time when the page function started and finished 35 pageFunctionStartedAt: Date, 36 pageFunctionFinishedAt: Date, 37 38 // An arbitrary string that uniquely identifies the web page in the crawling queue. 39 // It is used by the crawler to determine whether a page has already been visited. 40 // If two or more pages have the same uniqueKey, then the crawler only visits the first one. 41 // 42 // By default, uniqueKey is generated from the 'url' property as follows: 43 // * hostname and protocol is converted to lower-case 44 // * trailing slash is removed 45 // * common tracking parameters starting with 'utm_' are removed 46 // * query parameters are sorted alphabetically 47 // * whitespaces around all components of the URL are trimmed 48 // * if the 'considerUrlFragment' setting is disabled, the URL fragment is removed completely 49 // 50 // If you prefer different generation of uniqueKey, you can override it in the 'interceptRequest' 51 // or 'context.enqueuePage' functions. 52 uniqueKey: String, 53 54 // Describes the type of the request. It can be either one of the following values: 55 // 'InitialAboutBlank', 'StartUrl', 'SingleUrl', 'ActorRequest', 'OnUrlChanged', 'UserEnqueued', 'FoundLink' 56 // or in case the request originates from PhantomJS' onNavigationRequested() it can be one of the following values: 57 // 'Undefined', 'LinkClicked', 'FormSubmitted', 'BackOrForward', 'Reload', 'FormResubmitted', 'Other' 58 type: String, 59 60 // Boolean value indicating whether the page was opened in a main frame or a child frame 61 isMainFrame: Boolean, 62 63 // HTTP POST payload 64 postData: String, 65 66 // Content-Type HTTP header of the POST request 67 contentType: String, 68 69 // Contains "GET" or "POST" 70 method: String, 71 72 // Indicates whether the page will be loaded by the crawler or not 73 willLoad: Boolean, 74 75 // Indicates the label specified in startUrls or crawlPurls config settings where URL/PURL corresponds 76 // to this page request. If more URLs/PURLs are matching, this field contains the FIRST NON-EMPTY 77 // label in order in which the labels appear in startUrls and crawlPurls arrays. 78 // Note that labels are not mandatory, so the field might be null. 79 label: String, 80 81 // ID of the Request object from whose page this Request was first initiated, or null. 82 referrerId: String, 83 84 // Contains the Request object corresponding to 'referrerId'. 85 // This value is only available in pageFunction and interceptRequest functions 86 // and can be used to access properties and page function results of the page linking to the current page. 87 // Note that the referrer Request object DOES NOT recursively define the 'referrer' property. 88 referrer: Object, 89 90 // How many links away from start URLs was this page found 91 depth: Number, 92 93 // If any error occurred while loading or processing the web page, 94 // this field contains a non-empty string with a description of the error. 95 // The field is used for all kinds of errors, such as page load errors, the page function or 96 // intercept request function exceptions, timeouts, internal crawler errors etc. 97 // If there is no error, the field is a false-ish value (empty string, null or undefined). 98 errorInfo: String, 99 100 // Results of the user-provided 'pageFunction' 101 pageFunctionResult: Anything, 102 103 // A field that might be used by 'interceptRequest' function to save custom data related to this page request 104 interceptRequestData: Anything, 105 106 // Total size of all resources downloaded during this request 107 downloadedBytes: Number, 108 109 // Indicates the position where the request will be placed in the crawling queue. 110 // Can either be 'LAST' to put the request to the end of the queue (default behavior) 111 // or 'FIRST' to put it before any other requests. 112 queuePosition: String, 113 114 // Custom proxy used by the crawler, or null if custom proxies were not used. 115 // For security reasons, the username and password are redacted from the URL. 116 proxy: String 117}

Actor Metrics

100 monthly users

-

21 stars

>99% runs succeeded

7.8 days response time

Created in Mar 2019

Modified 5 months ago

Apify

Apify