LLM Hallucination Detector – Detect Unsupported AI Claims

Pricing

from $0.01 / 1,000 results

Go to Apify Store Under maintenance

Under maintenance

LLM Hallucination Detector – Detect Unsupported AI Claims

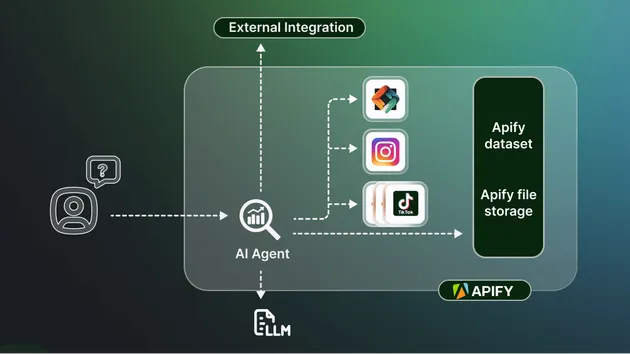

Detect hallucinations, unsupported claims, and overconfident language in LLM outputs. Ideal for RAG pipelines, AI agents, and production QA.

Pricing

from $0.01 / 1,000 results

Rating

0.0

(0)

Developer

JAYESH SOMANI

Maintained by Community

Actor stats

0

Bookmarked

1

Total users

0

Monthly active users

2 months ago

Last modified

Categories

Share