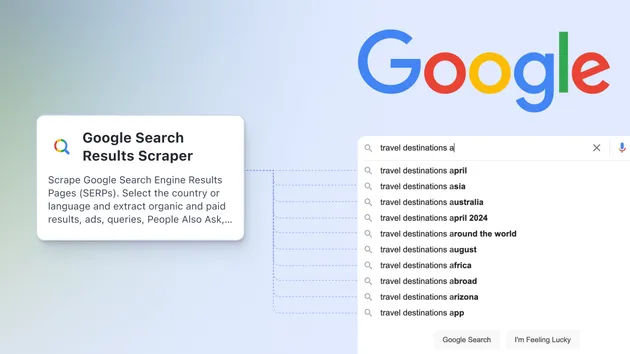

Google SERP Scraper

Pricing

$30.00/month + usage

Google SERP Scraper

Extracting detailed data from Google Search Engine Results Pages (SERPs) specific to search, including organic and paid listings, descriptions, URLs, and emphasized keywords. Ideal for digital marketers, SEO professionals, and market researchers. It will not scrap each site (easy and light run).

Pricing

$30.00/month + usage

Rating

1.0

(2)

Developer

AI_Builder

Actor stats

5

Bookmarked

200

Total users

0

Monthly active users

10 months ago

Last modified

Categories

Share

JavaScript PuppeteerCrawler Actor template

This template is a production ready boilerplate for developing with PuppeteerCrawler. The PuppeteerCrawler provides a simple framework for parallel crawling of web pages using headless Chrome with Puppeteer. Since PuppeteerCrawler uses headless Chrome to download web pages and extract data, it is useful for crawling of websites that require to execute JavaScript.

If you're looking for examples or want to learn more visit:

Included features

- Puppeteer Crawler - simple framework for parallel crawling of web pages using headless Chrome with Puppeteer

- Configurable Proxy - tool for working around IP blocking

- Input schema - define and easily validate a schema for your Actor's input

- Dataset - store structured data where each object stored has the same attributes

- Apify SDK - toolkit for building Actors

How it works

Actor.getInput()gets the input fromINPUT.jsonwhere the start urls are defined- Create a configuration for proxy servers to be used during the crawling with

Actor.createProxyConfiguration()to work around IP blocking. Use Apify Proxy or your own Proxy URLs provided and rotated according to the configuration. You can read more about proxy configuration here. - Create an instance of Crawlee's Puppeteer Crawler with

new PuppeteerCrawler(). You can pass options to the crawler constructor as:proxyConfiguration- provide the proxy configuration to the crawlerrequestHandler- handle each request with custom router defined in theroutes.jsfile.

- Handle requests with the custom router from

routes.jsfile. Read more about custom routing for the Cheerio Crawler here- Create a new router instance with

new createPuppeteerRouter() - Define default handler that will be called for all URLs that are not handled by other handlers by adding

router.addDefaultHandler(() => { ... }) - Define additional handlers - here you can add your own handling of the page

router.addHandler('detail', async ({ request, page, log }) => {const title = await page.title();// You can add your own page handling hereawait Dataset.pushData({url: request.loadedUrl,title,});});

- Create a new router instance with

crawler.run(startUrls);start the crawler and wait for its finish

Resources

If you're looking for examples or want to learn more visit:

- Crawlee + Apify Platform guide

- Documentation and examples

- Node.js tutorials in Academy

- How to scale Puppeteer and Playwright

- Video guide on getting data using Apify API

- Integration with Make, GitHub, Zapier, Google Drive, and other apps

- A short guide on how to create Actors using code templates:

Getting started

For complete information see this article. In short, you will:

- Build the Actor

- Run the Actor

Pull the Actor for local development

If you would like to develop locally, you can pull the existing Actor from Apify console using Apify CLI:

-

Install

apify-cliUsing Homebrew

$brew install apify-cliUsing NPM

$npm -g install apify-cli -

Pull the Actor by its unique

<ActorId>, which is one of the following:- unique name of the Actor to pull (e.g. "apify/hello-world")

- or ID of the Actor to pull (e.g. "E2jjCZBezvAZnX8Rb")

You can find both by clicking on the Actor title at the top of the page, which will open a modal containing both Actor unique name and Actor ID.

This command will copy the Actor into the current directory on your local machine.

$apify pull <ActorId>

Documentation reference

To learn more about Apify and Actors, take a look at the following resources: