Ai Memory Engine

Pricing

from $0.01 / 1,000 results

Ai Memory Engine

Give your AI persistent memory. Store conversations, search semantically, build knowledge graphs, and watch your AI get smarter with every interaction.

Pricing

from $0.01 / 1,000 results

Rating

0.0

(0)

Developer

Reuven Cohen

Actor stats

1

Bookmarked

3

Total users

0

Monthly active users

2 months ago

Last modified

Categories

Share

AI Memory Engine - Smart Database That Learns & Remembers

Give your AI persistent memory. Store conversations, search semantically, build knowledge graphs, and watch your AI get smarter with every interaction. The ultimate memory solution for AI agents, chatbots, and intelligent automation.

🧠 Self-Learning · 🔍 Semantic Search · 🕸️ Knowledge Graphs · ⚡ Sub-millisecond · 🔗 LLM Agnostic · 🔌 One-Click Integrations

Why AI Memory Engine?

The Problem: Every AI application needs memory, but building it is complex. You need embeddings, vector databases, search algorithms, and persistence - all expensive and time-consuming to set up.

The Solution: AI Memory Engine gives you production-ready AI memory in one click. Built on RuvLLM with native SIMD acceleration, HNSW indexing, and SONA self-learning - no external dependencies required.

What Makes This Different

| Feature | AI Memory Engine | Traditional Solutions |

|---|---|---|

| Setup Time | 1 minute | Hours to days |

| Cost | Pay per use ($0.001/operation) | $50-500/month subscriptions |

| Memory Persistence | Built-in cross-session | Requires external DB |

| Self-Learning | SONA neural architecture | Manual tuning |

| Apify Integration | One-click from any actor | Custom code required |

| Export Options | 6 vector DB formats | Limited |

Key Capabilities

27 Actions including:

- Core: store, search, get, list, update, delete, clear

- Advanced Search: batch_search, hybrid_search, find_duplicates, deduplicate

- AI Features: chat, recommend, analyze, build_knowledge, learn

- Integration: integrate_actor, integrate_synthetic, integrate_scraper, template

- Utilities: natural language commands, cluster, export_vectordb, feedback

One-Click Actor Integration with 10+ popular scrapers:

- Google Maps, Instagram, TikTok, YouTube, Twitter, Amazon, TripAdvisor, LinkedIn, and more

- Automatically memorize any scraper results with semantic search

6 Pre-Built Templates for instant deployment:

- Lead Intelligence, Customer Support, Research Assistant

- Competitor Intelligence, Content Library, Product Catalog

What Does This Do?

| Without AI Memory | With AI Memory Engine |

|---|---|

| AI forgets everything between sessions | Remembers all conversations |

| Same questions, same generic answers | Personalized, context-aware responses |

| No learning from interactions | Gets smarter with every use |

| Expensive vector DB subscriptions | Built-in, no external dependencies |

| Complex RAG setup | One-click semantic search |

| Manual integration with scrapers | One-click memory from any actor |

| Learn new tools for each vector DB | Export to Pinecone, Weaviate, ChromaDB |

Use Cases

💬 Chatbots with Memory

Your chatbot remembers every conversation and provides personalized responses.

📚 RAG (Retrieval Augmented Generation)

Then search with natural language:

🛍️ Recommendation Engine

Get personalized recommendations based on learned patterns.

🧠 Knowledge Graph

Automatically extract entities and relationships.

Quick Start (1 Minute)

Try the Demo

This will:

- Store sample memories (customer preferences, product info, support tickets)

- Run semantic searches

- Generate recommendations

- Show you what's possible

Output:

Core Features

1. Store Memories

Add any text to your AI's memory:

Output:

2. Semantic Search

Find relevant memories using natural language:

Output:

3. Chat with Memory

Have conversations where AI remembers everything:

Output:

4. Build Knowledge Graphs

Automatically extract entities and relationships:

Output:

5. Analyze Patterns

Get insights from your stored memories:

Output:

Integrations

🔗 Integrate with Synthetic Data Generator

Generate test data and automatically memorize it:

This calls the AI Synthetic Data Generator and stores the results as searchable memories.

Supported data types:

ecommerce- Product catalogsjobs- Job listingsreal_estate- Property listingssocial- Social media postsstock_trading- Market datamedical- Healthcare recordscompany- Corporate data

🌐 Integrate with Web Scraper

Scrape websites and build a searchable knowledge base:

Perfect for:

- Documentation search

- Competitor analysis

- Content aggregation

- Research databases

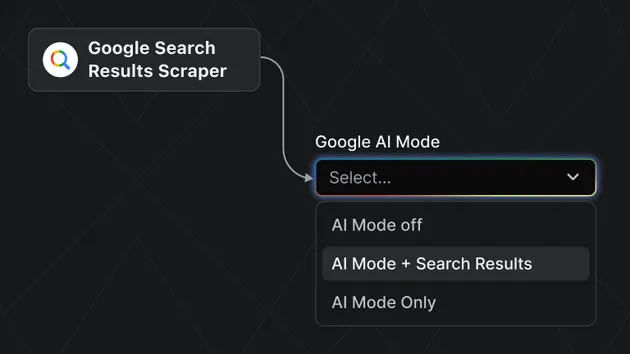

🔌 One-Click Actor Integration

Instantly memorize results from any Apify actor:

Supported actors (one-click ready):

| Actor | What Gets Memorized |

|---|---|

apify/google-maps-scraper | Business name, address, rating, reviews |

apify/instagram-scraper | Captions, hashtags, engagement |

apify/tiktok-scraper | Video text, author, play count |

apify/youtube-scraper | Titles, descriptions, view counts |

apify/twitter-scraper | Tweets, authors, engagement |

apify/amazon-scraper | Products, prices, ratings |

apify/tripadvisor-scraper | Venues, ratings, locations |

apify/linkedin-scraper | Profiles, headlines, companies |

apify/web-scraper | Any website content |

apify/website-content-crawler | Full page content |

Example: Build a local business database:

Then search naturally:

Pre-Built Templates

Get started instantly with industry templates:

Available templates:

| Template | Use Case |

|---|---|

lead-intelligence | Sales lead tracking, CRM enrichment |

customer-support | FAQ knowledge base, ticket resolution |

research-assistant | Academic & market research |

competitor-intelligence | Market tracking, competitive analysis |

content-library | Content ideas, editorial planning |

product-catalog | E-commerce knowledge base |

Each template includes:

- Sample memories for immediate use

- Suggested search queries

- Optimized metadata structure

Natural Language Commands

Talk to your memory database in plain English:

Supported commands:

| Command | Action |

|---|---|

remember [text] | Store a new memory |

forget about [topic] | Remove related memories |

what do you know about [topic] | Semantic search |

find [query] | Search memories |

how many memories | Get stats |

list memories | Show all memories |

clear everything | Reset database |

analyze | Get insights |

find duplicates | Detect duplicates |

export | Download data |

help | Show commands |

Examples:

Memory Clustering

Automatically group memories by similarity:

Output:

Use cases:

- Discover topics in large memory collections

- Identify content gaps

- Organize knowledge automatically

- Find related memories without a query

Vector DB Export

Export to any vector database:

Supported formats:

| Format | Database | Output Structure |

|---|---|---|

pinecone | Pinecone | {id, values, metadata} |

weaviate | Weaviate | {id, vector, properties} |

chromadb | ChromaDB | {ids, embeddings, documents, metadatas} |

qdrant | Qdrant | {id, vector, payload} |

langchain | LangChain | {pageContent, metadata, embeddings} |

openai | OpenAI-compatible | {input, embedding} |

Example: Migrate to Pinecone

Output:

Session Persistence

Keep memories across multiple runs:

Later, in another run:

All memories from the session are automatically restored.

Export & Import

Export Memories

Formats available:

json- Full export with metadata and embeddingscsv- Spreadsheet compatibleembeddings- Raw vectors for ML pipelines

Import Memories

Configuration Options

Embedding Models

| Model | Dimensions | Speed | Quality | API Required |

|---|---|---|---|---|

local-384 | 384 | ⚡⚡⚡ Fastest | Good | No |

local-768 | 768 | ⚡⚡ Fast | Better | No |

gemini | 768 | ⚡ Normal | Best | Yes (free tier) |

openai | 1536 | ⚡ Normal | Best | Yes |

Distance Metrics

| Metric | Best For |

|---|---|

cosine | Text similarity (default) |

euclidean | Numerical data |

dot_product | Normalized vectors |

manhattan | Outlier-resistant |

AI Providers

| Provider | Models Available |

|---|---|

local | No LLM (search only) |

gemini | Gemini 2.0 Flash, 1.5 Pro |

openrouter | GPT-4o, Claude 3.5, Llama 3.3, 100+ models |

API Integration

Python

JavaScript

cURL

Pricing

This actor uses Pay-Per-Event pricing:

| Event | Price | Description |

|---|---|---|

apify-actor-start | $0.00005 | Actor startup (per GB memory) |

apify-default-dataset-item | $0.00001 | Result in dataset |

memory-store | $0.001 | Store memory with RuvLLM embeddings |

memory-search | $0.001 | Semantic search with HNSW |

chat-interaction | $0.003 | AI chat with memory context |

knowledge-graph-build | $0.005 | Build knowledge graph |

recommendation | $0.002 | Get recommendations (per batch) |

pattern-analysis | $0.003 | Analyze patterns with SONA |

memory-export | $0.002 | Export database |

memory-import | $0.002 | Import data |

synthetic-integration | $0.005 | Integrate with synthetic data |

scraper-integration | $0.01 | Integrate with web scraper |

learning-cycle | $0.005 | Force SONA learning cycle |

Example costs:

- Store 1,000 memories: $1.00

- 1,000 searches: $1.00

- 100 chat interactions: $0.30

- Build knowledge graph: $0.005

Performance

| Operation | Latency | Throughput |

|---|---|---|

| Store memory | ~2ms | 500/sec |

| Search (1000 memories) | ~5ms | 200/sec |

| Search (10000 memories) | ~20ms | 50/sec |

| Chat with context | ~100ms | 10/sec |

FAQ

Q: How long are memories stored?

A: Memories persist as long as you use a sessionId. Without a session, memories exist only for the run.

Q: Can I use this with my own LLM? A: Yes! Use OpenRouter to access 100+ models including GPT-4, Claude, Llama, Mistral, and more.

Q: Is there a limit on memories? A: No hard limit. Performance is optimized for up to 100,000 memories per session.

Q: Can I use this for production? A: Absolutely! The actor is designed for production workloads with session persistence and high throughput.

Q: Does it work without an API key? A: Yes! Local embeddings and search work without any API. LLM features require Gemini or OpenRouter key.

MCP Integration (Model Context Protocol)

AI Memory Engine is fully compatible with Apify's MCP server, allowing AI agents (Claude, GPT-4, etc.) to directly interact with your memory database.

Quick Setup

Add to Claude Code (one command):

Replace

YOUR_APIFY_TOKENwith your Apify API token.

For Claude Desktop / VS Code / Cursor (config file):

Alternative: Local MCP Server

What AI Agents Can Do

Once connected, AI agents can autonomously:

- Store memories from conversations

- Search semantically through stored knowledge

- Build knowledge graphs from unstructured data

- Get recommendations based on patterns

- Manage sessions across conversations

Example AI Agent Workflow

MCP Resources

Related Actors

- AI Synthetic Data Generator - Generate mock data for testing

- Self-Learning AI Memory - PostgreSQL-based vector storage

Links

Built with RuVector - High-performance vector database for AI applications.