RAG Pipeline Data Collector

Pricing

$5.00 / 1,000 results

RAG Pipeline Data Collector

AI-ready web content extraction for RAG systems, LLMs, and AI agents. Single-page or multi-page scraping with parallel processing.

Pricing

$5.00 / 1,000 results

Rating

0.0

(0)

Developer

LIAICHI MUSTAPHA

Actor stats

0

Bookmarked

3

Total users

0

Monthly active users

a month ago

Last modified

Categories

Share

RAG Pipeline Data Collector - AI-Ready Web Content Extraction

Extract clean, structured web content optimized for RAG systems, LLMs, and AI agents. Built with Crawl4AI for lightning-fast parallel processing and intelligent content filtering.

🎯 What is RAG Pipeline Data Collector?

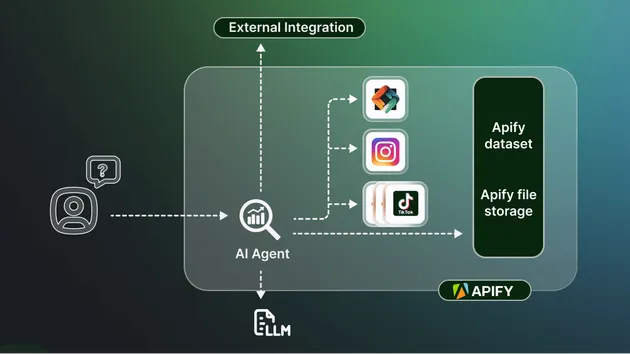

The RAG Pipeline Data Collector is a specialized web scraping Actor designed specifically for AI and machine learning workflows. It transforms raw web pages into clean, structured Markdown or HTML content that's ready to feed into RAG (Retrieval-Augmented Generation) systems, vector databases, LLM training pipelines, and AI agents.

Unlike traditional web scrapers, this Actor focuses on extracting meaningful content while removing navigation menus, ads, footers, and other noise that would pollute your AI training data or RAG knowledge base.

Perfect for:

- 🤖 Building RAG systems and AI chatbots

- 📚 Creating knowledge bases for LLMs

- 🔍 Training data collection for machine learning

- 💬 Content ingestion for vector databases (Pinecone, Weaviate, Chroma)

- 🔗 n8n, Zapier, and Make.com automation workflows

- 📊 Large-scale content analysis and research

🚀 Key Features

Dual Operating Modes

Single Page Mode - Fast API-style extraction

- Extract individual pages in 15-30 seconds

- Perfect for real-time integrations

- Ideal for n8n/Zapier/Make workflows

- On-demand content processing

Multi-Page Mode - Bulk extraction with parallel processing

- Process 50+ pages simultaneously

- 5-10x faster than sequential scraping

- Three intelligent crawl strategies

- Complete knowledge base extraction

Three Crawl Strategies

-

Sitemap Strategy 📋

- Automatically parse sitemap.xml

- Fastest parallel processing

- Complete site coverage

- Best for: Documentation sites, blogs, news sites

-

Deep Crawl Strategy 🕸️

- Follow internal links recursively

- Control depth (1-5 levels)

- Discover hidden content

- Best for: Sites without sitemaps, complex navigation

-

Archive Discovery 📰

- Intelligent pattern detection (/blog, /posts, /archive)

- Targeted content discovery

- Blog-focused extraction

- Best for: Content-heavy sites, news archives

Clean, AI-Ready Output

✅ Markdown Output - Perfectly formatted for LLMs ✅ Noise Removal - Intelligent filtering of ads, navigation, footers ✅ Metadata Extraction - Title, description, author, language ✅ Image URLs - All images with full URLs ✅ Link Extraction - Internal and external links separated ✅ Statistics - Word count, character count, image count

💡 Use Cases

RAG Systems & Vector Databases

Feed clean, structured content directly into your RAG pipeline:

n8n Automation Workflows

- Add Apify node to your workflow

- Select RAG Pipeline Data Collector

- Configure single or multi-page mode

- Connect to Pinecone, Weaviate, or Supabase nodes

- Automate your RAG data pipeline

Content Analysis & Research

Extract and analyze large volumes of content:

- Competitor research and monitoring

- Market intelligence gathering

- Academic research data collection

- Content aggregation for newsletters

AI Training Data Collection

Build high-quality training datasets:

- Clean, structured text for fine-tuning

- Consistent format across sources

- Metadata for context preservation

- Scalable bulk extraction

📥 Input Configuration

Single Page Mode

Multi-Page Mode (Sitemap)

Multi-Page Mode (Deep Crawl)

Multi-Page Mode (Archive Discovery)

📤 Output Format

Each scraped page returns a structured JSON object:

🔧 How It Works

The Actor uses Crawl4AI, a cutting-edge web scraping framework optimized for AI applications:

- Intelligent Rendering - Handles JavaScript-heavy sites with Playwright

- Parallel Processing - Scrapes multiple pages simultaneously (5-20x faster)

- Noise Filtering - Removes ads, navigation, footers using

fit_markdownalgorithm - LLM-Optimized Output - Clean Markdown perfect for AI consumption

- Smart Crawling - Three strategies to handle any site structure

Performance Expectations

- Single Page Mode: 15-30 seconds per page

- Multi-Page (Sitemap): 1-2 minutes for 50 pages

- Multi-Page (Deep Crawl): 2-5 minutes for 50 pages (varies by depth)

- Multi-Page (Archive): 1-3 minutes for 50 pages

💰 Pricing & Compute Units

This Actor is optimized for cost-effective operation:

- Single Page Mode: ~0.05-0.1 CU per page

- Multi-Page Mode: ~2-5 CU per 50 pages (parallel processing advantage)

Recommended Memory: 4096 MB for optimal performance

📊 Example Runs

Coming soon! Check back for public run examples.

🛠️ Advanced Features

Output Format Options

- Markdown: Clean, LLM-friendly format (recommended for RAG)

- HTML: Cleaned HTML with noise removed

- Raw HTML: Original HTML without processing

Content Filtering

- Noise Removal: Automatically removes navigation, ads, footers

- Image Filtering: Include/exclude images

- Link Filtering: Include/exclude links

- Metadata Control: Include/exclude page metadata

Crawl Configuration

- Max Pages: Control total pages (1-500)

- Max Depth: Control crawl depth (1-5 levels)

- Same Domain Only: Restrict to starting domain

- Pattern Matching: Custom URL filtering (coming soon)

🔗 Integration Examples

Make.com (Integromat)

- Add Apify module

- Select Run Actor

- Choose RAG Pipeline Data Collector

- Configure input parameters

- Map output to your RAG pipeline modules

Zapier

- Add Apify action

- Select Run Actor

- Choose RAG Pipeline Data Collector

- Configure trigger and input

- Connect to vector database action

Python SDK

JavaScript/Node.js

⚙️ Configuration Tips

For Best RAG Results

- ✅ Enable

remove_noisefor cleaner content - ✅ Use

markdownoutput format - ✅ Include metadata for context

- ✅ Set appropriate

max_pagesbased on your needs

For Faster Scraping

- ⚡ Use

sitemapstrategy when available - ⚡ Limit

max_depthto 1-2 for deep crawl - ⚡ Process in batches of 50-100 pages

- ⚡ Use 4096 MB memory allocation

For Cost Optimization

- 💰 Use single mode for small jobs

- 💰 Batch requests in multi-page mode

- 💰 Set reasonable

max_pageslimits - 💰 Monitor compute unit usage

🐛 Troubleshooting

Sitemap Not Found

If sitemap strategy fails, the Actor automatically falls back to deep crawl.

JavaScript-Heavy Sites

Some sites may require additional wait time. The Actor handles this automatically with Playwright.

Rate Limiting

The Actor respects robots.txt and includes configurable delays between requests.

Missing Content

If content is missing, try:

- Disable noise removal temporarily

- Use

raw_htmlformat to inspect - Increase timeout settings

📚 Documentation & Support

- GitHub Issues: [Report bugs or request features]

- Apify Discord: Join our community for support

- Documentation: Full API documentation

🏷️ Tags

web-scraping rag llm ai machine-learning vector-database langchain chatbot knowledge-base content-extraction markdown automation n8n zapier make

📄 License

This Actor is provided as-is for use on the Apify platform. Web scraping should be done responsibly and in accordance with website terms of service.

🤝 Ethical Scraping

This Actor:

- ✅ Respects

robots.txt - ✅ Only extracts publicly available content

- ✅ Does not extract personal data

- ✅ Includes configurable rate limiting

- ✅ Identifies itself properly in requests

Always ensure you have the right to scrape content from target websites and respect their terms of service.

Built with ❤️ using Crawl4AI

Need custom features or enterprise support? Contact us through the Apify platform!