Airtable Lead Enricher

Pricing

$30.00 / 1,000 lead enricheds

Airtable Lead Enricher

Stop manual lead research. This actor enriches your Airtable leads with contact data and AI scores, then updates your base.

Pricing

$30.00 / 1,000 lead enricheds

Rating

0.0

(0)

Developer

DataHQ

Actor stats

0

Bookmarked

1

Total users

0

Monthly active users

2 months ago

Last modified

Categories

Share

Airtable Lead Enricher

Fill missing lead data automatically. Email, phone, AI scoring. Works with Airtable or standalone or in any workflow!

Documentation • Try on Apify • Support

Demo

What It Does

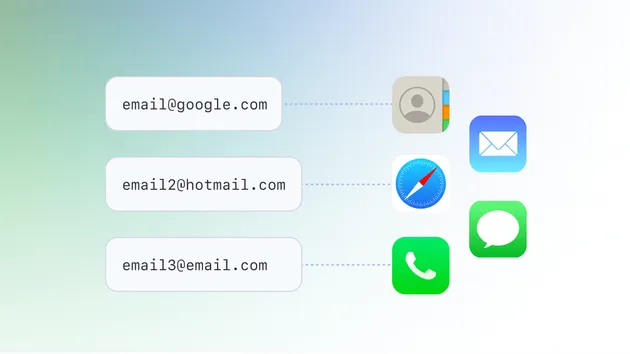

Reads leads from Airtable → Finds missing data → Writes back.

| Before | After |

|---|---|

| Acme Corp | Acme Corp contact@acme.com +1 555 0100 Lead Score: Good ICP Score: Excellent |

Key Stats:

- ⚡ 3-5 seconds per lead enrichment

- 💰 $0.03 per lead (vs competitors: $0.15-$0.36)

- 📊 85%+ success rate for contact info

- 🎯 AI-powered scoring with 7 quality labels

- 🔄 3 processing modes: Airtable batch, single record, or standalone API

- 📈 Up to 1000 companies per run in API mode

Features

- Contact Info - Email, phone

- Verified Emails - Hunter.io

- Social Links - LinkedIn, Facebook, Twitter

- AI Scoring - 0-100 score with quality labels (Excellent, Good, Fair, Poor, Bad)

- Batch Mode - 1000 companies per run

- API Mode - No Airtable needed

Quick Start

1. Get Airtable Token

airtable.com/create/tokens → Create token → Copy

2. Get IDs

- Base ID:

airtable.com/appXXXX→appXXXX - Table ID: Table menu → Copy table ID →

tblXXXX

3. Run

Pricing

$0.03 per lead (vs Clearbit $0.36, Apollo $0.15)

| Leads | Cost |

|---|---|

| 100 | $3 |

| 1,000 | $30 |

Data Sources

| Source | Data | API Key? |

|---|---|---|

| Website | Email, phone, tech | No |

| Google Maps | Phone, address, rating | No |

| Hunter.io | Verified email, socials | Yes |

| AI | Score, ICP match | Yes |

Configuration

Basic

With AI

With Hunter.io

Field Mapping

Map your Airtable columns to internal fields.

Input Fields (What to Read)

Internal → Your Column Name (case-sensitive)

Output Fields (What to Write)

If not specified, uses default names: email, phone, leadScore, etc.

Available Output Fields:

- Contact:

email,phone,address - Google Maps:

rating,reviewCount,category - Company:

description,techStack,industry,employeeCount,foundingYear,companyStage - Social:

linkedinUrl,facebookUrl,twitterHandle - AI:

leadScore,icpScore,summary,targetCustomers,valueProposition,keyProducts,outreachAngles

Modes

| Mode | Use Case | Needs Airtable? | Max Records |

|---|---|---|---|

batch | Scheduled Airtable sync | ✅ Yes | 100 |

single | Specific record IDs | ✅ Yes | 100 |

api | Standalone (no Airtable) | ❌ No | 1000 |

API Mode (No Airtable Required)

Perfect for integrations, workflows, and pipelines.

No airtable.apiKey, baseId, or tableId needed. Just provide companies.

LLM Providers

Supports OpenAI, Anthropic, and AWS Bedrock for AI-powered lead scoring.

Tested models: Claude 3 Haiku, GPT-4o, GPT-3.5 Turbo work well.

Get API keys:

Examples & Use Cases

Example 1: Enrich 100 Leads in Airtable

Scenario: You have a table with company names. You want to fill in missing emails, phones, and AI scores.

Input:

Result: All 100 records get enriched with contact info and AI-generated scores. Takes ~5-8 minutes.

Example 2: Qualify Leads with ICP Scoring

Scenario: You want to score leads against your ideal customer profile to prioritize outreach.

Input:

Output:

Example 3: Daily Scheduled Enrichment

Scenario: Automatically enrich new leads every day at 2 AM.

Setup:

- In Apify Console → Schedules → Create new schedule

- Cron:

0 2 * * * - Input:

Result: Only processes records with Status="New" and no enrichedAt timestamp. Runs automatically daily.

Example 4: Lambda Function Integration (Python)

Scenario: Enrich leads from your application backend via AWS Lambda.

Deployment:

Example 5: Webhook Integration with Make.com/Zapier

Scenario: Get enriched data pushed to your CRM automatically.

Setup:

- Create webhook endpoint (Make.com/Zapier/n8n)

- Configure Actor input:

- Webhook receives:

- Make.com automation:

- Trigger: Webhook received

- Action 1: Parse JSON

- Action 2: Update Salesforce/HubSpot record

- Action 3: Send Slack notification for "Excellent" leads

Example 6: Airflow DAG for ETL Pipeline

Scenario: Enrich leads as part of your nightly data pipeline.

Example 7: Batch Process 1000 Companies (API Mode)

Scenario: You have a CSV with 1000 companies to enrich. No Airtable needed.

Input:

Processing time: ~25-40 minutes for 1000 companies (parallel processing)

Output: Dataset with 1000 enriched records. Download as JSON/CSV from Apify Console.

Example 8: Hunter.io Integration for Verified Emails

Scenario: Get verified professional emails and social profiles.

Input:

Output:

Integration Examples

More examples in examples/ directory:

lambda_python.py- AWS Lambda (Python)lambda_node.js- AWS Lambda (Node.js)gcp_function_python.py- GCP Cloud Functionsglue_job.py- AWS Glue ETLecs_task_definition.json- AWS ECS/Fargatedocker_run.sh- Docker (EC2, anywhere)github_actions.yml- GitHub Actionsairflow_dag.py- Apache Airflow

CRM Integrations

Salesforce, HubSpot, Pipedrive, Zoho

Use webhooks or automation platforms:

- Webhook: Set

webhookUrlto receive enriched data - Zapier/Make: Trigger on Airtable update or schedule

- Direct API: Call from your CRM's automation (see examples above)

Output Fields

| Field | Source |

|---|---|

email | Website/Hunter |

phone | Maps/Website |

linkedinUrl | Hunter |

leadScore | AI (Excellent/Good/Fair/Poor/Bad) |

icpScore | AI (Excellent/Good/Fair/Poor/Bad) |

summary | AI |

techStack | Website |

Scheduling

Run daily at 2 AM:

Cron: 0 2 * * *

Best Practices & Tips

🎯 Optimize for Speed

Fastest configuration (website only, no AI):

Speed: ~2 seconds per lead

Balanced configuration (website + Google Maps):

Speed: ~3-4 seconds per lead

Full enrichment (all sources + AI):

Speed: ~8-12 seconds per lead (includes AI analysis)

💰 Optimize for Cost

Tips to reduce costs:

- Use API mode instead of Airtable mode when you don't need live sync

- Disable AI scoring when you only need contact info (saves LLM API costs)

- Start with website-only enrichment, then add Hunter.io/AI if needed

- Use filterFormula to avoid re-processing enriched records:

"filterFormula": "NOT({enrichedAt})"

- Batch strategically - Process 100-200 records per run instead of one-by-one

Cost breakdown:

- Website scraping: Free

- Google Maps: Free

- Hunter.io: $0.005 per request (their pricing)

- OpenAI GPT-4o: ~$0.01 per lead

- Anthropic Claude 3 Haiku: ~$0.001 per lead (recommended)

🔍 Improve Data Quality

1. Always provide website URLs

2. Use ICP criteria for better scoring

More specific = more accurate scores.

3. Enable multiple sources

More sources = more complete data.

4. Handle updates correctly

Options:

append(default) - Only fill empty fields, preserve existing dataoverwrite- Replace all fields with new dataskip- Skip record entirely if any field has data

⚡ Common Patterns

Pattern 1: New Lead Qualification

Pattern 2: Re-enrich Old Leads

Pattern 3: Enrich Only High-Value Segments

Pattern 4: Webhook-Driven Real-Time Enrichment

Performance Guide

Expected Processing Times

| Records | Mode | Config | Time |

|---|---|---|---|

| 1 | API | Website only | ~2-3 sec |

| 1 | API | Website + Maps | ~4-5 sec |

| 1 | API | Full (Website + Maps + Hunter + AI) | ~10-15 sec |

| 10 | Batch | Website only | ~30-45 sec |

| 100 | Batch | Website + Maps | ~5-8 min |

| 100 | Batch | Full enrichment | ~15-25 min |

| 1000 | API | Website only | ~25-40 min |

Factors affecting speed:

- Number of sources enabled

- AI/LLM enabled (adds 3-5 sec per lead)

- Concurrency setting (1-20, default: 10)

- Website response time (some sites are slower)

- Network latency

Memory usage:

- Base: 512 MB

- Recommended: 2048 MB (default)

- For 1000+ records with AI: 4096 MB

Troubleshooting

| Problem | Fix |

|---|---|

| Invalid API key | Use PAT (starts with pat) |

| Unknown field | Check case-sensitive names |

| No records | Check filter/view |

| No social links | Enable Hunter.io |

| Timeout errors | Reduce concurrency or increase timeout |

| Out of memory | Increase memory to 4096 MB in Actor settings |

| Low success rate | Ensure website URLs are provided |

| Empty AI scores | Check LLM API key is valid |

Common Issues:

Q: Why are some fields empty? A: Not all data is available for every company. Results depend on:

- Website structure (some sites hide contact info)

- Google Maps presence

- Hunter.io database coverage

Q: How to handle rate limits? A: Reduce concurrency:

Q: Why is AI scoring not working? A: Check:

- LLM provider API key is valid

llm.enabled: trueandscoring.enabled: true- Provider supports your region (OpenAI, Anthropic widely available)

Links

Lead enrichment made simple.