1import { Actor } from 'apify';

2import { ApifyClient } from 'apify-client';

3import escapeStringRegexp from 'escape-string-regexp';

4

5await Actor.init();

6

7const apifyClient = new ApifyClient();

8

9const { search } = await Actor.getInput();

10

11

12let actors = [];

13const fetchNextChunk = async (offset = 0) => {

14 const limit = 1000;

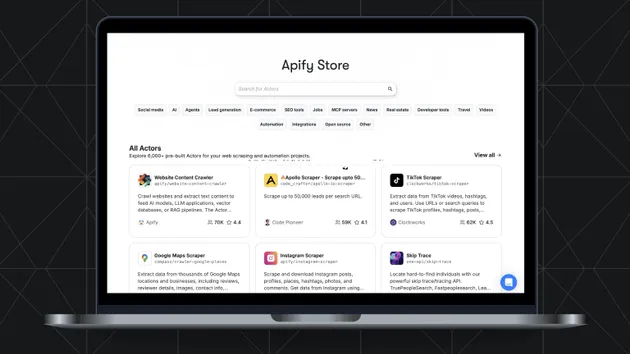

15 const value = await apifyClient.store({ search }).list({ offset, limit });

16

17 if (value.count === 0) {

18 return;

19 }

20

21 actors.push(...value.items);

22

23 if (value.total > offset + value.count) {

24 return fetchNextChunk(offset + value.count);

25 }

26};

27await fetchNextChunk();

28

29const usernames = {};

30

31

32const results = [];

33for (const actor of actors) {

34 results.push({

35 id: actor.id,

36 url: `https://apify.com/${actor.username}/${actor.name}`,

37 title: actor.title,

38 titleWithUrl: `[(${actor.title})](https://apify.com/${actor.username}/${actor.name})`,

39 pictureUrl: actor.pictureUrl,

40 description: actor.description,

41 categories: actor.categories,

42 authorPictureUrl: actor.userPictureUrl,

43 authorFullName: actor.userFullName,

44 usersTotal: actor.stats.totalUsers,

45 usersMonthly: actor.stats.totalUsers30Days,

46 pricingModel: actor.currentPricingInfo.pricingModel,

47 });

48

49 if (actor.stats.totalUsers > 35) {

50 usernames[actor.username] = true;

51 }

52}

53

54

55let regexAll = `^https:\\/\\/apify\\.com\\/(${Object.keys(usernames).map(key => escapeStringRegexp(key)).join('|')})(\\/|$)`;

56

57await Actor.setValue('gsc_regex_all_user_generated_content.txt', regexAll, { contentType: 'text/plain' });

58

59await Actor.pushData(results);

60

61await Actor.exit();