Justjoin Jobs Details Scraper

Pricing

$20.00/month + usage

Justjoin Jobs Details Scraper

Efficiently scrape detailed job listings from JustJoin.it, Poland's leading IT job board. Extract comprehensive data including salaries, tech stacks, company details, and remote work options. Perfect for market research, salary analysis, and recruitment intelligence in the Polish tech industry.

Pricing

$20.00/month + usage

Rating

0.0

(0)

Developer

Stealth mode

Actor stats

0

Bookmarked

2

Total users

0

Monthly active users

3 months ago

Last modified

Categories

Share

JustJoin.it Jobs Details Scraper: Extract Complete Polish IT Job Market Data

Understanding JustJoin.it and Why This Data Matters

JustJoin.it has established itself as one of Poland's premier job boards specifically focused on the technology sector. Unlike general job platforms, JustJoin.it caters exclusively to IT professionals, startups, and tech companies, making it an invaluable resource for understanding the Polish and Central European tech job market.

The platform's significance extends beyond simple job listings. It provides a comprehensive view of the tech ecosystem, including salary ranges, required skill sets, company cultures, and emerging technology trends. For recruiters, market researchers, and business analysts, this data represents a goldmine of insights into hiring patterns, compensation trends, and skill demand in one of Europe's fastest-growing tech markets.

However, manually collecting this information from hundreds or thousands of job postings would be incredibly time-consuming and impractical. This is where the JustJoin.it Jobs Details Scraper becomes essential, transforming what would be weeks of manual work into an automated process that delivers structured, analysis-ready data.

What This Scraper Does and Who Benefits

The JustJoin.it Jobs Details Scraper is designed to extract comprehensive information from individual job posting pages on JustJoin.it. Rather than collecting just basic information, this tool captures the complete dataset that JustJoin.it provides for each position, giving you a 360-degree view of each job opportunity.

The scraper excels at capturing both standard job information and JustJoin.it-specific features. This includes detailed technical requirements, multiple employment type options (which are common in Polish labor law), workplace flexibility indicators, and company branding information. The tool respects the platform's structure while ensuring you receive clean, organized data that's immediately usable for analysis or integration into your systems.

This scraper serves multiple professional audiences effectively. Recruitment agencies can use it to build comprehensive job databases and identify hiring trends. Market researchers gain insights into salary ranges, in-demand skills, and geographic distribution of tech jobs. Companies planning to enter the Polish market can analyze competition, typical compensation packages, and required skill sets. Data analysts and business intelligence teams can track market movements, emerging technologies, and hiring velocity across the tech sector.

Understanding Input Requirements and Configuration

The scraper accepts job detail page URLs from JustJoin.it. These are the specific pages that display complete information about individual job postings, not the search results or category pages.

Here's a practical example of properly formatted input:

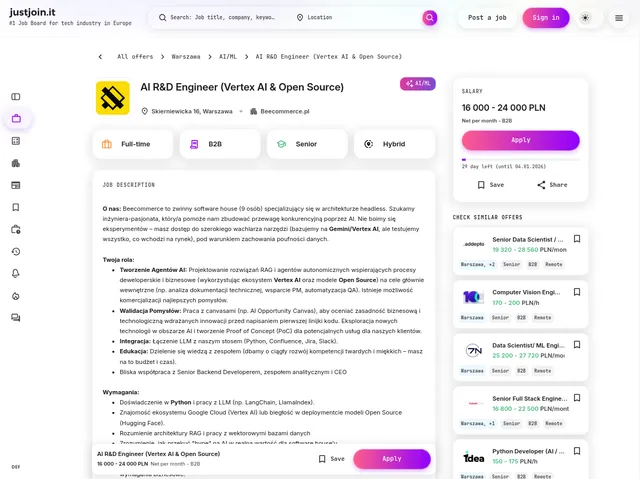

Example Screenshot:

The proxy configuration is particularly important for reliable data collection. Using residential proxies helps ensure that your scraping activity appears as normal user behavior, reducing the likelihood of being blocked. While you can choose different proxy countries, selecting one that aligns with your target market (Poland or nearby European countries) often yields better results and faster response times.

You can include multiple URLs in the array, allowing you to scrape dozens or hundreds of job postings in a single run. The scraper processes each URL sequentially, extracting the complete dataset from each job posting page.

Comprehensive Output Structure and Data Fields Explained

The scraper returns data in JSON format, with each job posting represented as a complete object containing all available information. Understanding what each field represents and how you might use it is crucial for maximizing the value of this data.

Basic Job Information Fields:

The Slug serves as a unique identifier for each job posting, derived from the URL structure. This field is invaluable when tracking specific positions over time or building relational databases. The Title contains the exact job position name as it appears on the listing, which is essential for categorization and search functionality.

Experience Level indicates the seniority expected for the position (junior, mid, senior, or expert), helping you segment opportunities by career stage. The Category field identifies the technical domain (such as AI, backend, frontend, or DevOps), which is critical for matching candidates to appropriate roles or analyzing demand by specialization.

Company and Location Information:

Company Name and Company URL provide direct references to the hiring organization, enabling you to build employer profiles or track which companies are actively hiring. The Body field contains the full job description text, including responsibilities, requirements, and benefits. This rich text data can be analyzed for keywords, sentiment, or used to train machine learning models.

Location data is particularly detailed. City and Street provide the physical address, while Latitude and Longitude offer precise geocoding for mapping applications. This geographic data enables sophisticated analyses like identifying tech hubs, calculating commute distances, or visualizing job density across regions.

Company Characteristics:

Company Size categorizes the employer by number of employees, which is valuable for candidates who prefer startups versus established corporations, or for market segmentation. The Company Logo URL provides branding assets, useful when displaying job listings or building company profiles.

Legal and Compliance Fields:

Information Clause, Future Consent, and Custom Consent capture GDPR-compliant consent language that Polish companies must include. These fields are essential for legal compliance when processing or redistributing this data, particularly important given European data protection regulations.

Employment and Work Arrangements:

Employment Types is particularly significant in the Polish market, which commonly offers multiple contract types (employment contract, B2B contract, contract of mandate). This array field shows all available options for each position. Workplace Type indicates whether the role is remote, office-based, or hybrid, reflecting the post-pandemic shift in work arrangements.

Required Skills and Nice To Have Skills are arrays containing the technical competencies needed. These fields are goldmines for skill trend analysis, identifying which technologies are in demand, and understanding how skill requirements vary by seniority level or specialization.

Working Time specifies full-time or part-time status, while Remote Interview indicates whether the hiring process can be conducted remotely, which has become increasingly important for international candidates.

Application and Timing Information:

Apply URL provides the direct link for candidates to submit applications. Published At and Last Published At timestamps enable tracking of when positions were posted and updated, useful for measuring time-to-fill metrics or identifying stale listings.

Expired At indicates when the job posting will be removed, helping you understand recruitment timelines. Is Offer Active provides a boolean flag showing current availability status.

Internationalization and Branding:

Open To Hire Ukrainians reflects the significant Ukrainian tech talent migration to Poland, showing which companies are specifically welcoming to this demographic. Multilocation indicates if the position can be filled in multiple cities, important for flexible candidates.

Languages array shows required language proficiencies, crucial in a market where English proficiency varies. Country Code confirms the job's geographic market.

Brand Story fields (Brand Story Slug, Brand Story Cover Photo URL, Brand Story Short Description) capture company culture and employer branding content that JustJoin.it provides, helping candidates understand company values beyond the job requirements.

Media Assets:

Cover Image, Video URL, and Banner URL provide rich media associated with the posting, which companies use to showcase their workplace culture, technology, or projects.

Technical Identifiers:

GUID provides a globally unique identifier for integration with external systems, while Offer Parent indicates if this posting is part of a larger hiring campaign.

Here's an example of how this data appears:

Step-by-Step Guide to Using the Scraper

Begin by creating an Apify account if you haven't already. Navigate to the JustJoin.it Jobs Details Scraper in the Apify Store. Before starting your first scrape, take time to identify the specific job postings you want to extract. You can browse JustJoin.it manually or use their search functionality to find relevant positions, then copy the URLs of individual job detail pages.

Configure your input JSON with the collected URLs and appropriate proxy settings. For most use cases, residential proxies with a Polish or European country code provide the best results. If you're scraping a large number of URLs, consider breaking them into smaller batches to monitor progress and catch any issues early.

Start the scraper and monitor the run through the Apify console. The execution time will vary based on the number of URLs and current platform load, but typically processes 50-100 URLs within 10-15 minutes. Once complete, preview the results in the dataset tab to ensure data quality.

Download your data in your preferred format. JSON is ideal for programmatic processing and database imports, while CSV works well for Excel analysis or quick reviews. If you plan to run regular scrapes, consider setting up scheduled runs to automatically collect fresh job postings daily or weekly.

For handling errors, the scraper includes built-in retry logic for temporary failures. If specific URLs consistently fail, verify that they're correctly formatted job detail pages and not search results or company profile pages. The activity log provides detailed information about any issues encountered during scraping.

Practical Applications and Business Value

The comprehensive nature of this dataset enables numerous valuable applications across different business contexts. Recruitment agencies can build proprietary job databases that update automatically, giving them faster access to opportunities than competitors relying on manual searches. By analyzing salary ranges across similar positions, recruiters can provide data-driven compensation advice to both clients and candidates.

Market researchers gain unprecedented visibility into the Polish tech sector's dynamics. Tracking which technologies appear most frequently in job requirements reveals emerging trends months before they become mainstream knowledge. Geographic analysis shows where tech talent is clustering, informing decisions about office locations or remote work policies.

Companies planning market entry or expansion can conduct thorough competitive intelligence. By analyzing what skills competitors are hiring for and at what salary ranges, businesses can benchmark their own compensation packages and identify talent gaps in their organizations. The employment type data is particularly valuable for understanding local labor market practices that differ significantly from other countries.

Data science teams can build predictive models using this structured data. Time series analysis of job posting volumes can indicate economic trends in specific tech sectors. Natural language processing on job descriptions can identify subtle shifts in role expectations or company culture emphasis. The geographic coordinates enable spatial analysis to understand urban tech ecosystems.

For salary benchmarking, the employment types field's salary ranges provide concrete market data. Unlike survey-based salary data, this represents actual market offers, giving more accurate insights into current compensation levels. Cross-referencing salary with required skills, experience level, and company size enables sophisticated compensation modeling.

Maximizing Value and Ensuring Sustainable Use

To extract maximum value from this scraper, establish a regular collection schedule rather than one-off scrapes. The job market changes continuously, and consistent data collection enables trend analysis and historical comparisons. Weekly or bi-weekly scraping captures most new postings while avoiding unnecessary duplication.

Consider enriching the scraped data with additional sources. Combine JustJoin.it data with information from LinkedIn, company websites, or other job boards to build comprehensive employer profiles. Cross-referencing multiple sources also helps validate salary information and identify discrepancies.

Implement data quality checks in your processing pipeline. Verify that required fields are present, salary ranges are reasonable, and geographic coordinates are valid. Set up alerts for anomalies that might indicate scraping errors or platform changes.

Respect the platform by implementing reasonable rate limiting and using appropriate proxy configurations. While the scraper handles technical aspects of polite scraping, avoid overwhelming the platform with excessive simultaneous requests. This sustainable approach ensures long-term access to this valuable data source.

Store historical data systematically to enable longitudinal analysis. Track how individual job postings change over time, when they expire, and how quickly they're filled. This temporal data provides insights into hiring urgency, market competitiveness, and seasonal hiring patterns.

Conclusion

The JustJoin.it Jobs Details Scraper transforms one of Poland's premier tech job platforms into a structured, analyzable dataset. Whether you're conducting market research, supporting recruitment operations, or building competitive intelligence, this tool provides the comprehensive data you need to make informed decisions in the dynamic Polish and Central European tech market. Start extracting insights today and gain the data advantage your business needs.