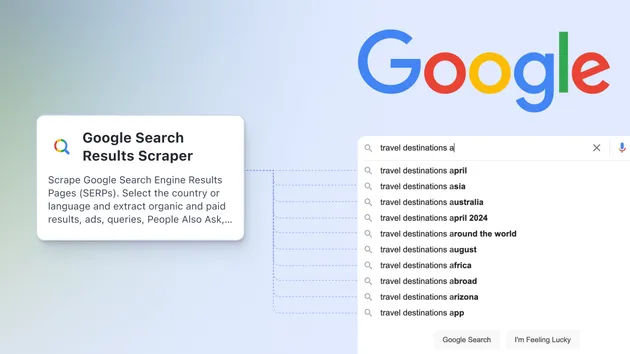

Google People Also Ask Scraper

Pricing

$10.00/month + usage

Google People Also Ask Scraper

Scrapes questions that appear in the `People also ask` section on the Google search results page according to the keyword entered with the max depth you want

Pricing

$10.00/month + usage

Rating

0.0

(0)

Developer

Iqbal R

Actor stats

5

Bookmarked

99

Total users

4

Monthly active users

4.6 days

Issues response

3 months ago

Last modified

Categories

Share

Scrapes questions that appear in the People also ask section on the Google search results page according to the keyword entered with the max depth you want

Features

- Keyword Search: Allows users to specify a search keyword as input. The Actor performs a Google search with the given keyword and retrieves related questions.

- Language Support: Supports search results in different languages. The language can be specified via the Actor input, with the default being English.

- Depth Control: The Actor allows you to specify the maximum depth of search result interactions, providing control over the number of elements scraped.

Input Schema

- keyword (required): Keyword to scrape

- max_depth: Depth to which to scrape to

- language: Language for the search results.

Dataset Schema

- Questions: List of questions from the "People also Ask" section.

How to Use

-

Create an Apify Account

- If you don't already have one, sign up for a free account at Apify.

-

Access the Google People Also Ask Scraper

- Navigate to the Google People Also Ask Scraper on the Apify platform. You can search for this actor directly in the Apify marketplace or use the direct link to open it in your Apify account.

-

Configure Input Fields

- Keyword: Enter the keyword for which you want to scrape related "People Also Ask" questions.

- Maximum Depth: Define the maximum depth to which the scraper will crawl. The default value is

10, but you can adjust it based on how many levels of questions you want to retrieve. - Language: Choose the language of the search results from the dropdown list. The default is

en(English), but you can select another language based on your needs.

-

Run the Scraper on Apify Web Platform

- After configuring the inputs, click the Run button on the Apify actor page.

- Apify will start the scraping process, and it may take a few moments depending on the keyword and depth of the scrape.

-

Access the Scraped Data

- Once the scraping is complete, the results will be saved to your Apify dataset.

- You can view the dataset by navigating to the Datasets tab in the Apify console, where you'll find a table of the scraped questions.

- The questions will be shown under the "Questions" column.

-

Export or Use the Data

- You can download the dataset in various formats (JSON, CSV) directly from the Apify web interface.

- Alternatively, you can use the Apify API to programmatically access the data for further use.

-

Modify the Parameters (Optional)

- You can modify the input parameters (

keyword,max_depth,language) anytime in the Apify console. - After modifying the inputs, re-run the actor to scrape new data based on your updated settings.

- You can modify the input parameters (

By following these steps, you can easily configure and run the Google People Also Ask Scraper on the Apify web platform to collect valuable questions related to your search terms.

Conclusion

The Google People Also Ask Scraper on Apify provides an easy and efficient way to collect related "People Also Ask" questions from Google search results. By simply providing a keyword, specifying the maximum depth for scraping, and selecting the desired language, users can gather valuable insights from Google’s "People Also Ask" feature.

With Apify’s no-code platform, you don’t need to worry about setting up or managing infrastructure. Everything is handled for you, allowing you to focus on analyzing the data. Whether you're conducting market research, enhancing SEO efforts, or gathering insights for content creation, this scraper is a powerful tool to add to your toolkit.

After the scraping is complete, you can quickly access and export the data in multiple formats such as JSON or CSV. This allows for seamless integration into your workflow or further processing.

If you need more customization or want to integrate the scraper into your own workflows, you can easily modify the input parameters and run the actor again to get updated results.