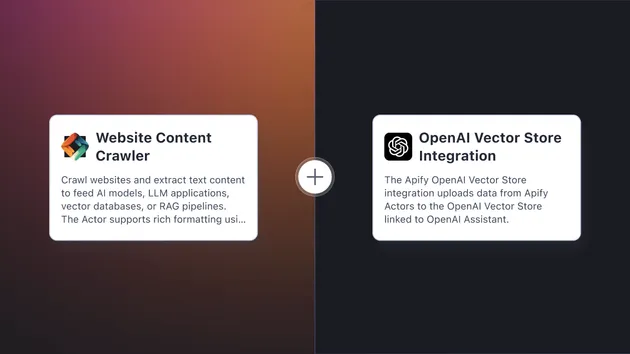

OpenAI Vector Store Integration

Pricing

Pay per usage

Go to Apify Store

OpenAI Vector Store Integration

This integration uploads data from Apify Actors to the OpenAI Vector Store linked to OpenAI Assistant.

Pricing

Pay per usage

Rating

4.8

(5)

Developer

Jiří Spilka

Maintained by Apify

Actor stats

15

Bookmarked

215

Total users

14

Monthly active users

2 months ago

Last modified

Categories

Share