Turn your website into an AI chatbot

Automate content updates for customer support and beyond with Website Content Crawler. Convert your website, blog, or FAQ into a chatbot-ready format. Keep your data current and relevant with fresh web data without worrying about scraping challenges or infrastructure.

Convert your website into usable data

Apify's Website Content Crawler transforms web content into Markdown files optimized for human readability and LLM processing. It removes unnecessary elements like headers, navigation bars, and cookie banners, leaving only the content that matters.

Embed and store your data efficiently

Website Content Crawler integrates with tools like Pinecone and other vector databases to create and store embeddings. The Apify platform lets you automate regular scraping to make sure your data stays accurate and up-to-date.

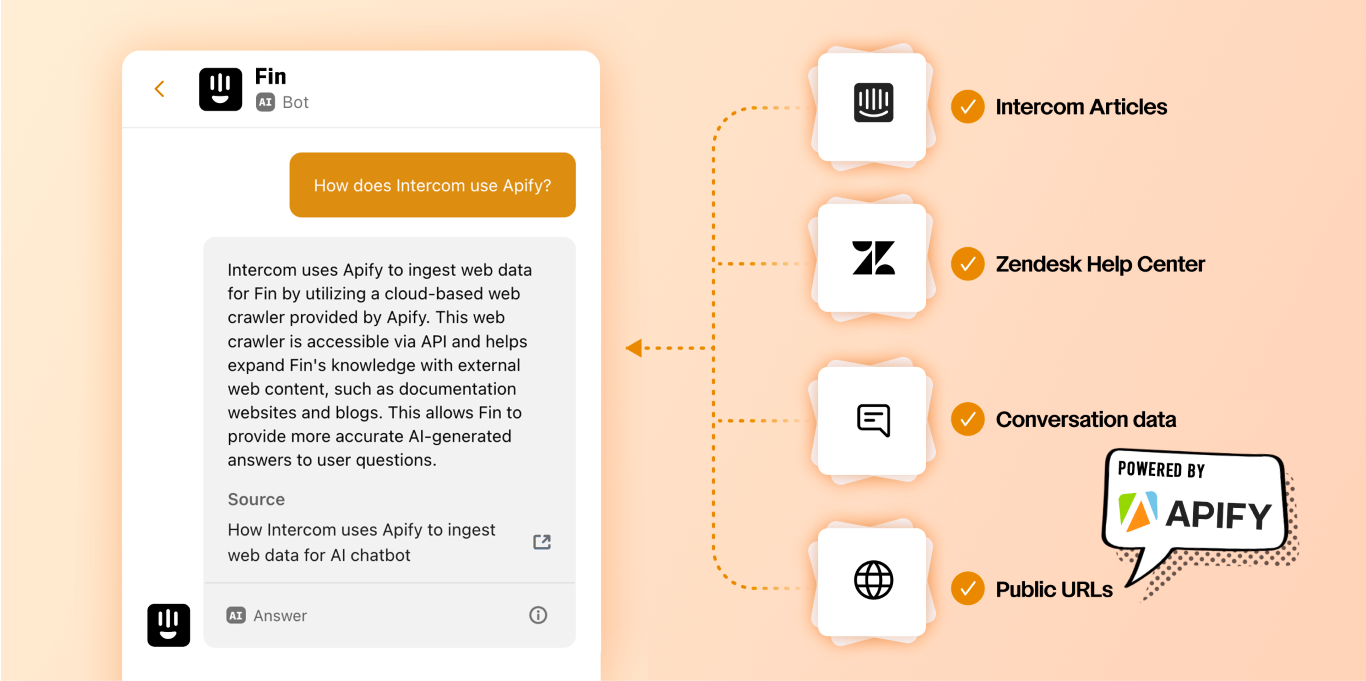

Integrate with RAG pipelines for smart solutions

Use the data for RAG pipelines to create customer support chatbots that can answer questions directly from your site’s content, agent Q&A systems to connect your data with vector databases for retrieval, and current documentation hubs for developers working with specific libraries.

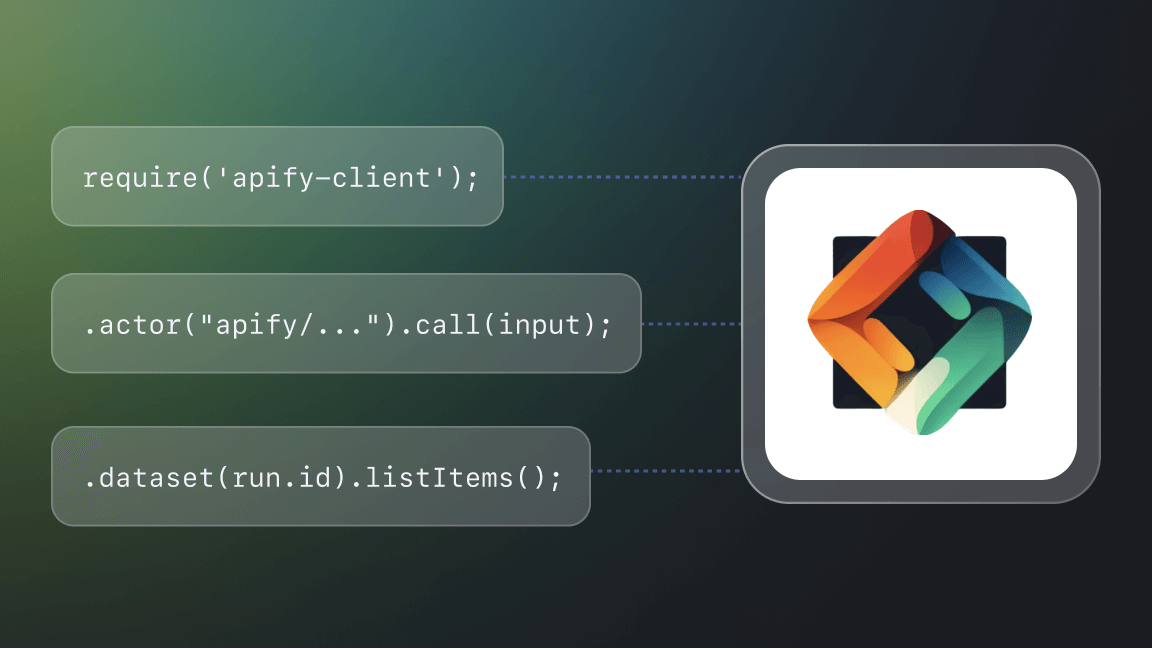

Connect agents with Apify tools through MCP

Apify's MCP Server lets agents find, run, and fetch data from the right tool automatically. Agents can operate independently - scraping live data, reacting to real-world changes, and completing tasks without manual prompts.