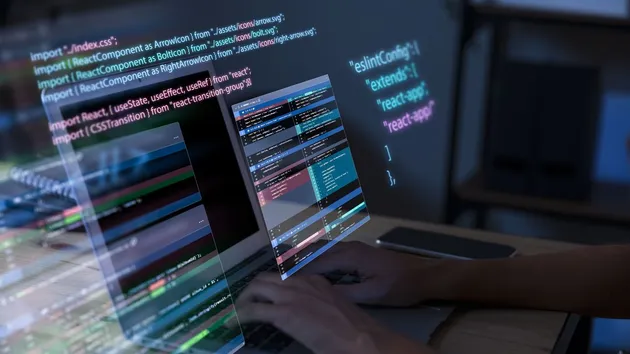

1const fs = require('fs');

2const tmp = require('tmp');

3const Apify = require('apify');

4

5

6

7process.on('exit', (code) => {

8 console.log('Exiting the process with code ' + code);

9 process.exit(code);

10});

11

12(async function () {

13 try {

14

15 const input = await Apify.getValue('INPUT');

16 console.log('Input fetched:');

17 console.dir(input);

18

19

20

21

22

23 if (!input || !input.app || !input.cred || !input.index || !input.sitemaps) {

24 console.error('The input must be a JSON config file with fields as required by algolia-webcrawler package.');

25 console.error('For details, see https://www.npmjs.com/package/algolia-webcrawler');

26 process.exit(33);

27 }

28

29 var tmpobj = tmp.fileSync({ prefix: 'aloglia-input-', postfix: '.json' });

30 console.log(`Writing input JSON to file ${tmpobj.name}`);

31 fs.writeFileSync(tmpobj.name, JSON.stringify(input, null, 2));

32

33 console.log(`Emulating command: node algolia-webcrawler --config ${tmpobj.name}`);

34 process.argv[2] = '--config';

35 process.argv[3] = tmpobj.name;

36 const webcrawler = require('algolia-webcrawler');

37 } catch (e) {

38 console.error(e.stack || e);

39 process.exit(34);

40 }

41})();