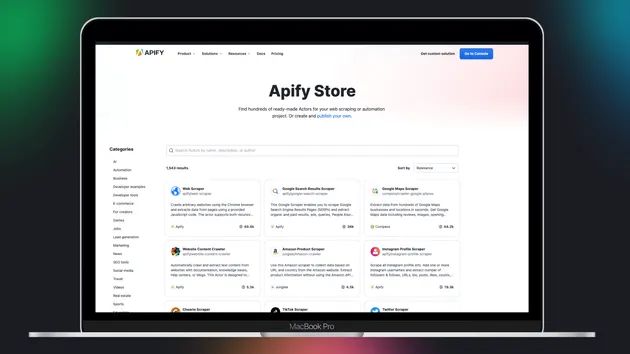

OpenAI Vector Store Integration

Pricing

Pay per usage

OpenAI Vector Store Integration

The Apify OpenAI Vector Store integration uploads data from Apify Actors to the OpenAI Vector Store linked to OpenAI Assistant.

4.8 (5)

Pricing

Pay per usage

14

Total users

176

Monthly users

30

Runs succeeded

90%

Issues response

16 hours

Last modified

4 months ago

My vector store ID is unique to each run

Closed

Is there some way to pass the vector store ID into the run at the start?

Hi, thank you for using the OpenAI Vector Store Integration!

I'm not sure I fully understand your question, but I will try to propose a use case

If you need to scrape data from different websites (e.g., using Website Content Crawler), you can set up a task. In this task, you can connect Website Content Crawler (with a specific startURL) and OpenAI Vector Store Integration (with a specific vectorStoreId).

This way, you can update different vector stores with different data from the web.

Does this approach make sense? If you're working on a different use case, please let me know—I'd be happy to help!

Jiri

drippingfist

Hi Jiri

Thanks for your response! I start the website content crawler via an https request in which I set all the options for the run and startURL. When I try to add the OpenAI Vector Store Integration, it requires that I add a vector store ID. I don't want to do this manually each time. I'm now implementing a workaround where I first run the website content scraper, save the datasetID and the KeyValueStoreId to a database, then when the website content crawler is finished, I start the OpenAI Vectore store Integration with these values added to the debugging portion of the request body.

BUT, is there a way to pass the vectorStoreID into the Website Content Crawler request so that the OpenAI Vector Store Integration can use when if it's triggered as an integrated compotentn of my task?

Hope that makes sense.

Hi, thank you for the detailed explanation.

Unfortunately, it's not possible to pass the vectorStoreId directly into the Website Content Crawler request. This feature is not supported, and there are no plans to add support for it in the near future.

That said, there are a few alternative approaches you can consider:

-

Separate calls – As you mentioned, you can first run Website Content Crawler, retrieve the

datasetId, and then call OpenAI Vector Store with bothdatasetIdandvectorStoreId. This works but somewhat undermines the goal of having seamless integrations. -

Using tasks – Another option is to create a Task that combines Website Content Crawler and OpenAI Vector Store with a specific

vectorStoreId. If you have three uniquevectorStoreIds, you would need to create a separate task for each one. You can then call the appropriate task withstartURLsas usual, and it will save the data into the correspondingvectorStoreId. However, this approach is only feasible if the number of vector stores remains manageable. -

Using webhooks – A more advanced option is to leverage Webhooks, though this setup is even more complex.

Unfortunately, I don't have an immediate out-of-the-box solution for this. If possible, I’d recommend trying the second approach first, or the first one if that works for your needs.

If you'd like, I can provide a code snippet for calling it. However, it seems like you already figured it out—let me know if you need any help. Jiri

I'll go ahead and close this issue now. Please feel free to ask questions or raise a new issue. Jiri