Pinecone GPT Chatbot

Pricing

Pay per usage

Pinecone GPT Chatbot

Pinecone GPT Chatbot combines OpenAI's GPT models with Pinecone's database to generate insightful responses. Its interactive chatbot interface presents precise and comprehensive answers to user queries. Benefit from semantic understanding, efficient workflows, and enriched knowledge integration!

Pricing

Pay per usage

Rating

4.9

(4)

Developer

Tri⟁angle

Actor stats

9

Bookmarked

77

Total users

1

Monthly active users

2 months ago

Last modified

Categories

Share

Pinecone GPT Chatbot is an Apify Actor designed to seamlessly integrate OpenAI's GPT models with the Pinecone database. Featuring an interactive chatbot application, it enables users to effortlessly generate rich and insightful responses to queries.

Why Use Pinecone GPT Chatbot?

- Seamless Integration: Pinecone GPT Chatbot effortlessly connects OpenAI's GPT models with your Pinecone index, ensuring that GPT model has access to the latest information.

- Rich Responses: Pinecone GPT Chatbot navigates through your Pinecone documents, connects the knowledge together and presents insights with precision.

- Efficient Workflows: Pair Pinecone GPT Chatbot with the WCC Pinecone Integration Actor to optimize your data pipeline. WCC Pinecone Integration gathers data with the help of Website Content Crawler and then it stores the extracted data in the Pinecone vector database to the specified index. You can then seamlessly query the Pinecone index with our Pinecone GPT Chatbot, enhancing productivity and insights.

Usage

Input

The Actor takes the following input fields in the JSON format:

| Input field | Description |

|---|---|

| 🔑 OpenAI API key | API key for connecting to OpenAI. Available at: https://platform.openai.com/api-keys |

| 🔑 Pinecone API key | API key for connecting to an existing Pinecone index. Available at: https://app.pinecone.io/ |

| 🔖 Pinecone index name | The name of the Pinecone index where the relevant vectors are stored. |

| 🤖 GPT model | GPT-4 Turbo or GPT-3.5 Turbo. |

| 🌡️ Temperature | The temperature for the GPT model that controls the randomness. Accepts the values 0-1. |

| 📊 Top K results | Top K results to fetch from the Pinecone index and use as a context for the GPT model |

Example Input

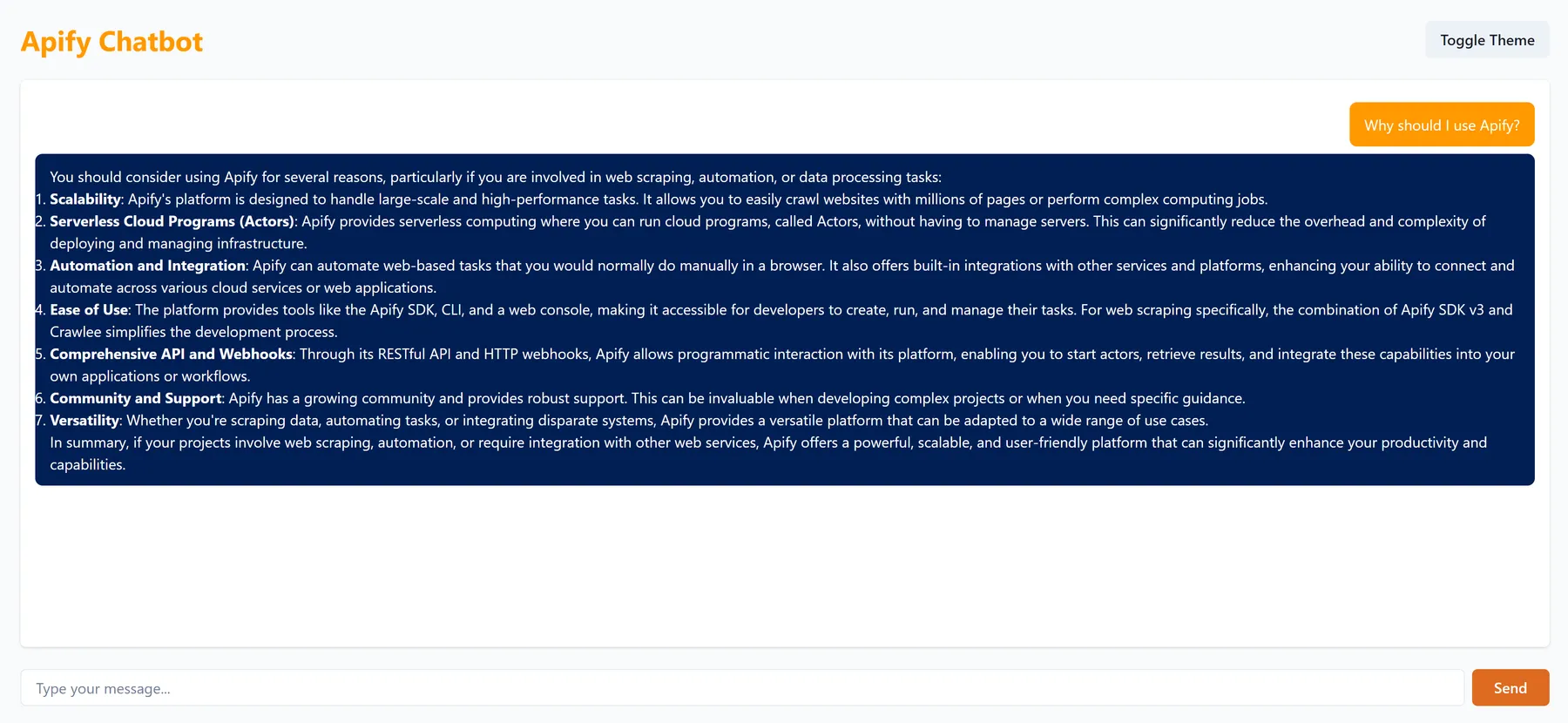

How It Works

-

The Actor deploys an interactive chatbot application to a dedicated server. You can find the link to the application in the Actor's log, e.g.:

Chatbot application is running on https://mckifnthonjq.runs.apify.netYou can then interact with the application by providing questions as prompts:

-

The Actor connects to an existing Pinecone index using the provided Pinecone API key and index name.

-

It retrieves relevant vectors from the Pinecone index based on your queries.

-

The Actor integrates the vector data with the selected GPT model (GPT-4 Turbo or GPT-3.5 Turbo).

-

The GPT model uses the conversation history and context vectors to generate responses to your queries.

-

Conversation history, along with source documents used for answering questions, is stored in the Actor's dataset.

NOTE: When the Actor run times out or is manually aborted, the chatbot application stops working. Each Actor run starts a new conversation with no context from the previous conversations.

Output

The Actor stores conversation history in a dataset. It also includes source documents that were used for answering your questions so that you could easily validate the correctness of GPT model's answers. Each dataset item contains the following fields:

question: Your query.answer: The generated response from the GPT model.sourceDocuments: An array of objects containing relevant page content and corresponding page URL.

Example Output