PostgreSQL Database Extractor

Pricing

Pay per event

PostgreSQL Database Extractor

Extract an entire PostgreSQL database into Apify storage using a connection string or individual parameters. Supports table filtering, flexible output formats, and automated export of all rows and metadata for seamless integration with other Actors or workflows.

Pricing

Pay per event

Rating

5.0

(1)

Developer

ParseForge

Actor stats

0

Bookmarked

3

Total users

1

Monthly active users

14 days ago

Last modified

Categories

Share

🚀 Extract entire PostgreSQL databases into structured data formats with ease. Perfect for database migrations, backups, data analysis, and integration with other systems.

This tool connects to your PostgreSQL database and extracts all tables (or specific ones you choose) into Apify's storage system. You can export your data as JSON, CSV, Excel, or other formats for easy analysis and integration.

Target Audience: Database administrators, data analysts, developers, and businesses needing database extraction and migration tools

Primary Use Cases: Database backups, data migration, data analysis, system integration, data warehousing

What Does PostgreSQL Database Extractor Do?

This tool connects to your PostgreSQL database and extracts all tables and their data into Apify storage. It delivers:

- Complete table extraction - All tables from your database

- Flexible filtering - Choose specific tables or exclude certain ones

- Row limits - Control how many rows to extract per table

- Dataset storage - Each table is saved to its own dataset

- Connection flexibility - Use connection strings or individual parameters

- SSL support - Secure connections for cloud databases

- And more

Business Value: Quickly extract and export your entire database for backups, migrations, or analysis without writing custom scripts or using complex tools.

How to use the PostgreSQL Database Extractor - Full Demo

[YouTube video embed or link]

Watch this 3-minute demo to see how easy it is to get started!

Input

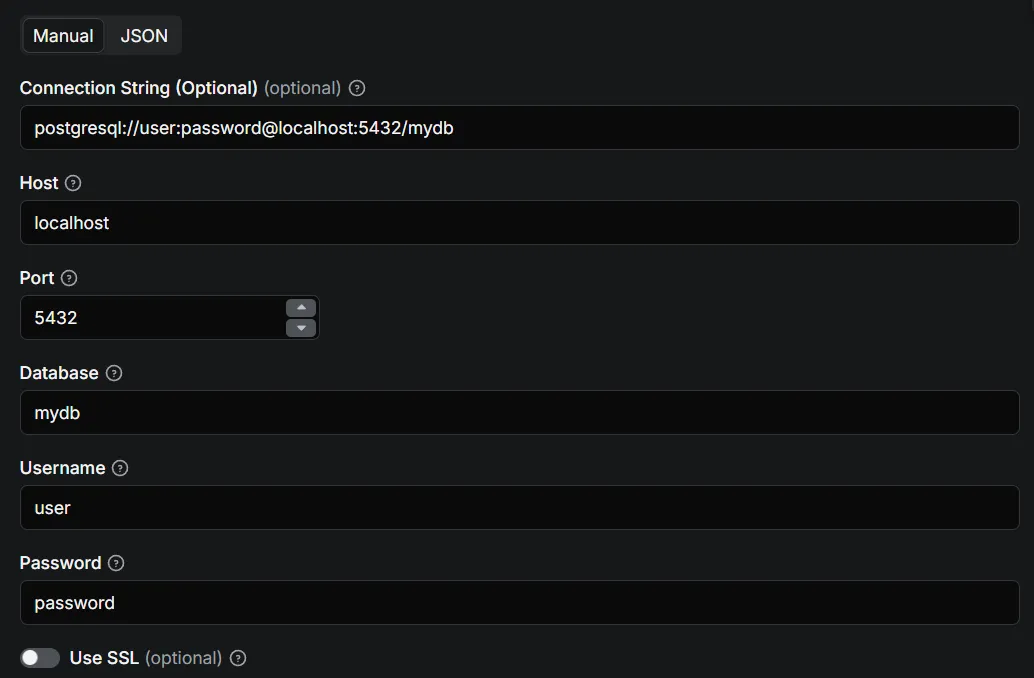

To start extracting your PostgreSQL database, simply fill in the input form. You can extract data based on:

- Connection String - Use a full PostgreSQL connection string (e.g.,

postgresql://user:password@host:port/database). If provided, other connection parameters will be ignored. - Individual Parameters - Provide host, port, database, username, and password separately

- Tables Filter - Specify which tables to extract (leave empty for all tables)

- Exclude Tables - List tables to skip during extraction

- Max Rows Per Table - Limit the number of rows extracted from each table (optional)

Here's what the filled-out input schema looks like:

And here it is written in JSON:

Pro Tip: Use connection strings for quick setup, or individual parameters for more control. Enable SSL for cloud database connections.

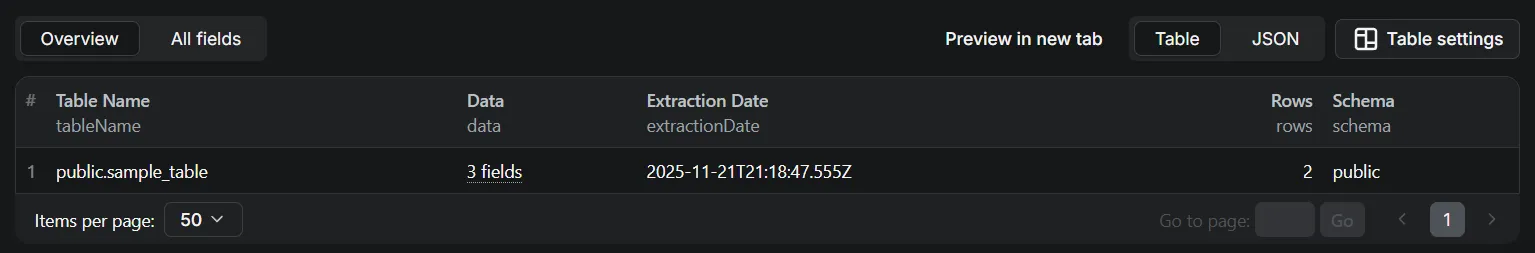

Output

After the Actor finishes its run, you'll get a dataset with the extracted database tables. Each table is exported as a separate record with all its data. You can download those results as an Excel, HTML, XML, JSON, and CSV document.

Here's an example of extracted PostgreSQL data you'll get:

Each table gets its own separate dataset named {schema}_{tableName}. For example, if you have a table users in the public schema, it will create a dataset named public_users containing all rows from that table.

Example dataset structure for a users table:

What You Get: Each table from your database gets its own separate dataset with all its rows, making it easy to work with individual tables independently

Download Options: CSV, Excel, or JSON formats for easy integration with your business tools

Why Choose the PostgreSQL Database Extractor?

- Complete Extraction: Extract entire databases or specific tables with one click

- Dataset Storage: Each table is saved to its own dataset for easy access

- Secure Connections: SSL support for cloud databases and production environments

- Table Filtering: Include or exclude specific tables based on your needs

- Row Limits: Control data volume with per-table row limits

- Multiple Formats: Export to JSON, CSV, Excel, and more

- No Coding Required: Simple form-based interface, no SQL knowledge needed

Time Savings: Extract entire databases in minutes instead of hours of manual work

Efficiency: Automated extraction eliminates the need for custom scripts or database tools

How to Use

- Sign Up: Create a free account w/ $5 credit (takes 2 minutes)

- Find the Extractor: Visit the PostgreSQL Database Extractor page

- Set Connection: Enter your PostgreSQL connection details (connection string or individual parameters)

- Configure Options: Choose tables to extract, row limits, and storage type

- Run It: Click "Start" and let it extract your database

- Download Data: Get your results in the "Dataset" tab as CSV, Excel, or JSON

Total Time: 5-10 minutes for most databases

No Technical Skills Required: Everything is point-and-click

Business Use Cases

Database Administrators:

- Create regular database backups

- Extract data for migration projects

- Export data for compliance and auditing

- Prepare data for system upgrades

Data Analysts:

- Extract data for analysis and reporting

- Export data to Excel or CSV for analysis

- Create data snapshots for comparison

- Prepare data for visualization tools

Developers:

- Extract databases for development environments

- Export data for testing and QA

- Migrate data between systems

- Create data dumps for version control

Business Teams:

- Export customer data for CRM integration

- Extract transaction data for accounting

- Create reports from database tables

- Backup critical business data

Using PostgreSQL Database Extractor with the Apify API

For advanced users who want to automate this process, you can control the extractor programmatically with the Apify API. This allows you to schedule regular database extractions and integrate with your existing business tools.

- Node.js: Install the apify-client NPM package

- Python: Use the apify-client PyPI package

- See the Apify API reference for full details

Frequently Asked Questions

Q: How does it work?

A: PostgreSQL Database Extractor connects to your PostgreSQL database using standard connection protocols, queries the database schema to discover all tables, and extracts the data row by row into Apify storage.

Q: Is my database secure?

A: Yes, all connections are encrypted when SSL is enabled. Your database credentials are stored securely and never exposed. The extractor only reads data and never modifies your database.

Q: Can I extract specific tables only?

A: Yes, you can specify which tables to extract or exclude certain tables from extraction. This gives you full control over what data is exported.

Q: What if my database is very large?

A: You can set row limits per table to control the volume of data extracted. The extractor handles large databases efficiently and provides progress updates.

Q: Can I schedule regular extractions?

A: Yes, using the Apify API or scheduler, you can set up automated extractions to run daily, weekly, or on any schedule you need.

Q: What formats can I download?

A: You can download your extracted data as JSON, CSV, Excel, HTML, or XML formats, making it easy to use in any business tool.

Q: Is my data private?

A: Yes, your database data is stored securely in your Apify account and is never shared with third parties. You have full control over your data.

Integrate PostgreSQL Database Extractor with any app and automate your workflow

Last but not least, PostgreSQL Database Extractor can be connected with almost any cloud service or web app thanks to integrations on the Apify platform.

These includes:

Alternatively, you can use webhooks to carry out an action whenever an event occurs, e.g. get a notification whenever PostgreSQL Database Extractor successfully finishes a run.

🔗 Recommended Actors

Looking for more data collection tools? Check out these related actors:

| Actor | Description | Link |

|---|---|---|

| PDF to JSON Parser | Convert PDF documents to structured JSON using AI OCR | https://apify.com/parseforge/pdf-to-json-parser |

| ID to JSON Parser | Extract structured data from ID documents using AI | https://apify.com/parseforge/id-to-json-parser |

| HTML to JSON Smart Parser | Intelligently parse HTML documents into structured JSON | https://apify.com/parseforge/html-to-json-smart-parser |

| Hubspot Marketplace Scraper | Extract business app data from HubSpot marketplace | https://apify.com/parseforge/hubspot-marketplace-scraper |

| PR Newswire Scraper | Extract press release and news content | https://apify.com/parseforge/pr-newswire-scraper |

Pro Tip: 💡 Browse our complete collection of data collection actors to find the perfect tool for your business needs.

Need Help? Our support team is here to help you get the most out of this tool.

Contact us to request a new scraper, propose a custom data project, or report a technical issue with this actor at https://tally.so/r/BzdKgA

⚠️ Disclaimer: This Actor is an independent tool and is not affiliated with, endorsed by, or sponsored by PostgreSQL or any of its subsidiaries. All trademarks mentioned are the property of their respective owners.